Increasingly, Computer Systems May Harness Us and Our Data to Machines, Often Without Our Knowledge. How Should We Regulate That?

Back in 1999, Tim Berners-Lee, inventor of the World Wide Web, envisioned a time when computers would be used “to create abstract social machines on the Web: processes in which the people do the creative work and the machine does the administration.” More than 15 years later, the idea of social machines remains both arcane and relatively unexamined. Yet such machines are all around us. Many are built on social networks such as Facebook, in which human interactions—from organizing a birthday party to protesting terrorist attacks—are underpinned by an engineered computing environment. Others are to be found in massively multiplayer online games, where a persistent online environment facilitates interactions concerning virtual resources between real people.

Most computer users unknowingly become part of another type of social machine on a recurring basis. A CAPTCHA (Completely Automated Public Turing test to tell Computers and Humans Apart), invented by Louis Von Ahn, is the distorted sequence of letters that users must type into a box to verify themselves as a human (e.g., when commenting on a blog). This is something that computers cannot do, and so the system prevents bots from leaving spam messages as comments.

A CAPTCHA involves no social computing. But Von Ahn extended the idea to create the social machine reCAPTCHA. Google (which bought reCAPTCHA in 2009) uses it to scan older books. reCAPTCHA presents the user with two words, not one. The first is a normal CAPTCHA, the second a word from an old book that automatic Optical Character Recognition cannot identify. If the person succeeds with the first CAPTCHA, then he or she is known to be human. And as humans are reliable at word recognition, the response to the second word will be a plausible suggestion as to what it might be. Presenting the same word to multiple users allows a consensus to emerge. The goal of the social machine is to digitize books; the necessity for users to prove themselves human provides the mechanism. But reCAPTCHA also illustrates one of the challenges of social machines: it is purely exploitative, as the goal of the machine is independent of the needs of its human “components.”

Social Machines: Promise and Hazard

In the past year we have seen exploitation by social machines taken to more troubling extremes. Research published in June 2014 by academics from Cornell University, with the cooperation of Facebook, studied induced changes to the emotions of 689,000 Facebook users, carried out without consent using the fine print of the company’s data-use policy as an ethical fig leaf. Despite the echoes of Stanley Milgram’s notorious obedience experiments of the 1960s, nobody seemed very ashamed. Shortly afterwards, the dating site OKCupid bragged that it too had experimented on its customers without their consent, matching up apparently ill-suited pairs to see how they got on. As they got on quite well, OKCupid’s customers might consider questioning its compatibility algorithms.

Used responsibly, however, social machines can be powerful tools to achieve what neither computers nor humans can accomplish by themselves. Take the DARPA balloon challenge of 2009, in which the aim was to find ten weather balloons placed randomly around the US. The rules of the challenge were intended to support the growth of a network of people to take part in the search, enabling a crowd-sourced solution. The means of doing this (in the winning solution from the Massachusetts Institute of Technology) was to create a social machine in which people were financially incentivized both to look for the balloons and add more people to the network. The MIT team began with four people, and by using social media had recruited over 5,000 at the point of completion, which took less than ten hours. The DARPA challenge may be trivial, but such social machines can also be used to mitigate far more serious issues, such as enabling those suffering from a particular health problem to pool resources and offer support and advice to fellow sufferers (e.g., CureTogether).

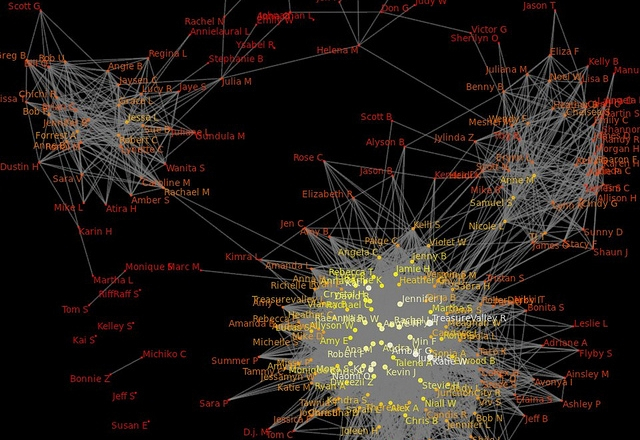

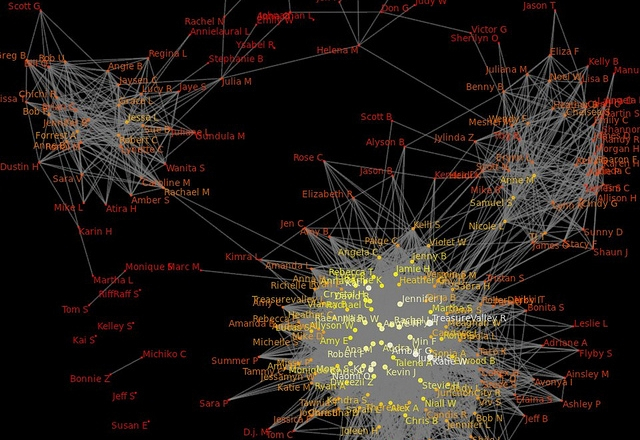

The increasing availability of data and powerful data-handling tools (e.g., Ushahidi and Galaxy Zoo) is enabling many individuals and communities to identify and solve their own problems, harnessing collective commitment, local knowledge and embedded skills. With the help of advanced social-networking tools, they are able to leverage their social networks without resorting to remote experts. Such social machines have a great deal of potential, but crucially they will depend on the willingness of participants to trust their peers, continue providing data to the systems, and trust that the systems will provide services that are deemed fair and appropriate.

Broadening the Debate

As the Cornell/Facebook and OKCupid experiments show, this is by no means a given. So what are the guidelines for how personal and other data may be used in a social machine, and who should determine them? What are the guiding principles by which social machines should operate? And what does it mean to “program” a social machine?—after all, integrating human and machine problem-solving power so that they complement each other requires abstracting away from today’s methods and algorithms. Such questions amplify the need to have a much broader discussion about data and its impact than we are currently seeing.

Along with Kieron O’Hara of Britain’s University of Southampton and M-H. Carolyn Nguyen of Microsoft Corporation, I co-edited a recently released book on these and related issues. The latest in the Digital Enlightenment Forum’s annual Digital Enlightenment Yearbook series, Social Networks and Social Machines, Surveillance and Empowerment considers the evolving relationships between individuals, data, networks and computers. Writers from various disciplines (technology, law, philosophy, sociology, economics and policymaking) bring their diverse opinions and perspectives to bear on this topic—one whose relevance is set to increase rapidly in the years ahead.

The book’s (comprehensive) introduction can be read or downloaded free of charge here, and the book itself can be purchased here or here.

Peter Haynes is a nonresident senior fellow with the Atlantic Council’s Strategic Foresight Initiative.

Image: Social machines may use people's interactions with computers to perform tasks or generate research data without their knowledge. (Wikimedia.org/ Kenneth Freeman/ CC License BY-SA 2.0)