Data rules for machine learning: How Europe can unlock the potential while mitigating the risks

Table of contents

Executive summary

Artificial intelligence (AI) will increasingly shape societies and the global economy. Machine learning—which is responsible for the vast majority of AI advancements—is enhancing the way businesses and governments make decisions, develop products, and deliver services.1Karen Hao, “What Is Machine Learning?,” MIT Technology Review, November 17, 2018, accessed July 25, 2021, https://www.technologyreview.com/2018/11/17/103781/what-is-machine-learning-we-drew-you-another-flowchart/. For machine learning algorithms to learn and make increasingly accurate predictions, they need large quantities of high-quality data that is relevant, representative, free of errors, and complete.2European Commission, Proposal for a Regulation Laying down Harmonised Rules on Artificial Intelligence (Artificial Intelligence Act) and Amending Certain Union Legislative Acts, Forwarded to the European Parliament and the Council, 2021/0106/COD, April 21, 2021, https://digital-strategy.ec.europa.eu/en/library/proposal-regulation-laying-down-harmonised-rules-artificial-intelligence. As such, data policies have a significant bearing on an economy’s capacity to take advantage of machine learning.

Supremacy in AI technologies has become a key aspect of strategic competition between China and the United States. President Xi Jinping has made achieving global leadership in AI by 2030 central to building China into a “modern socialist power.”3Xi Jinping, Remarks at the Nineteenth National Congress of the Communist Party of China (CCP) on October 18, 2017, full text of report via Xinhua News Agency, China Daily (website of CCP English-language news organization), accessed May 27, 2021, https://www.chinadaily.com.cn/china/19thcpcnationalcongress/2017-11/04/content_34115212.htm. China’s rapid advances in machine learning have alarmed America’s private sector and national security establishment.4Graham Allison and Eric Schmidt, Is China Beating the U.S. to AI Supremacy?, Harvard Kennedy School, 2020, 34. The Biden administration is looking to shore-up America’s national footing to compete with China in an AI arms race.5Robert O. Work, Remarks of the Vice Chair, National Security Commission on Artificial Intelligence, Press Briefing on Artificial Intelligence, United States Department of Defense (website), April 9, 2021, https://www.defense.gov/Newsroom/Transcripts/Transcript/Article/2567848/honorable-robert-o-work- vice-chair-national-security-commission-on-artificial-i/. This competition also extends to setting the global rules and norms that govern AI systems, given their influence on how economies and societies function.6Justin Sherman, Essay: Reframing the U.S.- China AI “Arms Race,” New America, March 6, 2019, https://www.newamerica.org/cybersecurity-initiative/reports/essay-reframing-the-us-china-ai-arms-race/.

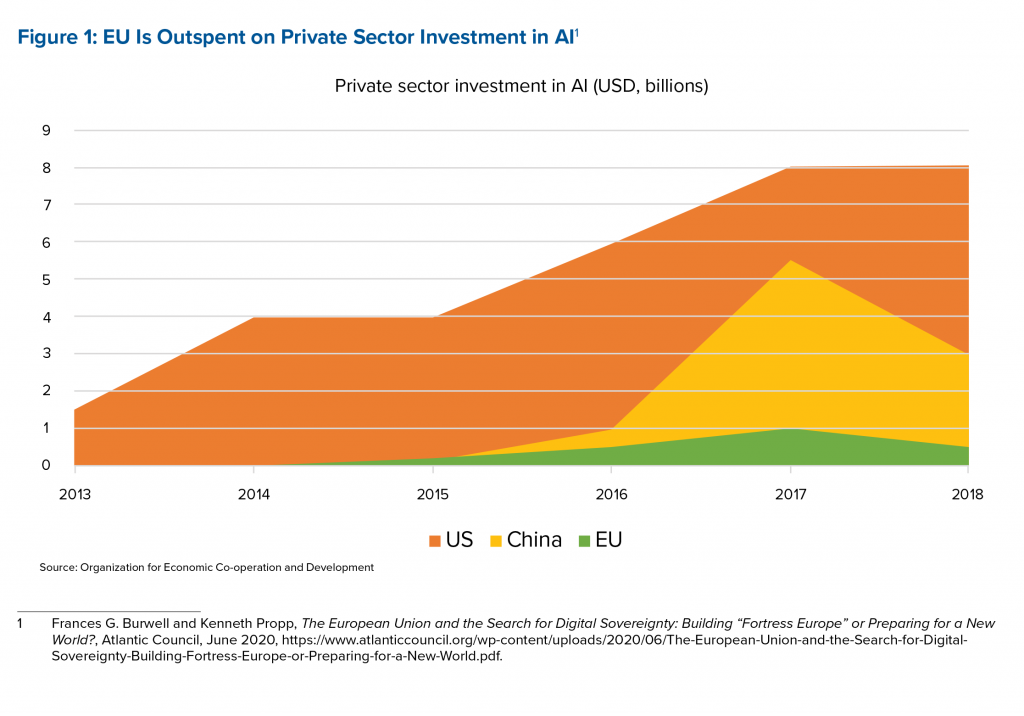

The European Union (EU) already lags behind China and the United States in key AI indicators such as private investment,7Frances G. Burwell and Kenneth Propp, The European Union and the Search for Digital Sovereignty: Building “Fortress Europe” or Preparing for a New World?, Atlantic Council, June 2020, https://www.atlanticcouncil.org/wp-content/uploads/2020/06/The-European-Union-and-the-Search-for-Digital-Sovereignty-Building-Fortress-Europe-or-Preparing-for-a-New-World.pdf. patent filings,8World Intellectual Property Organization, “Technology Trends 2019 Executive Summary: Artificial Intelligence,” accessed May 27, 2021, https://www.wipo.int/edocs/pubdocs/en/wipo_pub_1055_exec_summary.pdf. and data market growth.9“The European Data Market Study Update,” European Commission (website), accessed 2021, https://digital-strategy.ec.europa.eu/en/library/european- data-market-study-update. As China and the United States move to position their laws, bureaucratic structures, and public resources to accelerate the development and deployment of AI technologies,10The White House, “Fact Sheet: The American Jobs Plan,” White House (Briefing Room website), March 31, 2021, https://www.whitehouse.gov/briefing-room/statements-releases/2021/03/31/fact-sheet-the-american-jobs-plan/; and Jinping, Remarks at the Nineteenth National Congress of the CCP. the EU faces the added challenge of coordination among its twenty-seven member states to do the same.

Aware of this challenge, the European Commission has made “a Europe fit for the digital age” a priority to achieve by 2024.11European Commission, “6 Commission Priorities for 2019-24,” European Commission (website), https://ec.europa.eu/info/strategy/priorities-2019-2024_en. It is pursuing a “third way” between China and the United States in shaping its digital future, particularly when it comes to the use of data and AI.12Luis Viegas Cardoso, “Panel Discussion during AI, China, and the Global Quest for Digital Sovereignty – Report Launch,” GeoTech Center, Atlantic Council, January 13, 2021, https://www.atlanticcouncil.org/blogs/geotech-cues/event-recap-the-global-quest-for-digital-sovereignty-report-launch/. This has included a raft of legislative proposals, with more in the pipeline, informed by the commission’s European Strategy for Data and its White Paper on Artificial Intelligence, both released in 2020.13European Commission, European Strategy for Data, February 19, 2020, https://eur-lex.europa.eu/legal-content/EN/TXT/ PDF/?uri=CELEX:52020DC0066&from=EN ; and European Commission, White Paper on Artificial Intelligence: A European Approach to Excellence and Trust, 2020, https://ec.europa.eu/info/sites/default/files/commission-white-paper-artificial-intelligence-feb2020_en.pdf. Those proposals grapple with striking the right balance between reaping the economic benefits of those technologies while minimizing security threats and protecting the public from harm, including preserving individual rights such as privacy and nondiscrimination.14Article 2 of the Treaty on European Union states that the Union is “founded on the values of respect for human dignity, freedom, democracy, equality,

rule of law and respect for human rights”; see Consolidated Version of the Treaty of the European Union, Official Journal of the European Union, 2016/C 202/01 (2016); 20, https://eur-lex.europa.eu/legal-content/EN/TXT/HTML/?uri=CELEX:12016ME/TXT&from=EN). These values were reinforced by the Treaty of Lisbon with an explicit reference to the Charter of Fundamental Rights of the European Union in Article 6(1); see European Parliament, “The Treaty of Lisbon, Fact Sheets on the European Union,” European Parliament (website), accessed August 5, 2021, https://www.europarl.europa.eu/factsheets/en/ sheet/5/the-treaty-of-lisbon. The charter recognizes the right to respect for private and family life (Article 7), protection of personal data (Article 8), and nondiscrimination (Article 21) among other rights; see Charter of Fundamental Rights of the European Union, Official Journal of the European Union (June 7, 2016), https://eur-lex.europa.eu/legal-content/EN/TXT/HTML/?uri=CELEX:12016P/TXT&from=EN).

With the digital economy predicted to be valued at $11 trillion by 2025 on one hand,15James Manyika et al., “Unlocking the Potential of the Internet of Things,” Executive Summary, McKinsey Global Institute, June 1, 2015. and the risk machine learning can pose to fundamental rights and European autonomy on the other, the cost of getting that balance wrong is high. The European Commission will need to walk a fine line that separates the legitimate interests of businesses, citizens, and national governments to capture the economic benefits while limiting the negative social impacts of machine learning. Doing so will place the EU in a strong position to shape global rules at the intersection of technology and society to reflect European systems and values more closely.

Given the crucial role data plays in developing innovative AI systems, this report focuses on data governance in the context of machine learning. It provides European decision makers with a set of actions to unlock the potential of AI, while mitigating the risks. Developed at the request of the Atlantic Council, a nonpartisan organization based in Washington, DC, it examines the geopolitical considerations of the EU in the context of competition with China and the United States, the domestic considerations of EU member states including societal interests and the protection of individual rights, and the commercial considerations of EU-based businesses.

The report builds on the existing regulatory initiatives of the European Commission and attempts to lay out key considerations of the EU, the member states, and businesses. In doing so, we believe it captures the dominant features of the current policy debate in Europe to help navigate the complex equities at stake in developing data rules. That said, framing the issue of data governance around international political and economic competition can result in lines of reasoning that pay less consideration to important societal interests and can trivialize complex and multidimensional issues, such as social equity and individual rights. Therefore, we devoted the last section of this report to the protection of fundamental rights, with two chapters focused on these issues. We also have attempted to flag these cases in other parts of the report when they arise in our analysis.

Summary of recommendations

Leverage the scale of Europe

Addressing fragmented open data rules and procedures across Europe and harmonizing technical data standards would increase the quantity of data available for innovation and reduce the complexity and cost of preparing data for machine learning.

Therefore, the European Commission should:

1.1A Establish an EU data service under legislation creating common data spaces to facilitate access to public-sector data in consultation with national data protection

authorities by:

i. Serving as a single window to grant access to data sets spanning multiple jurisdictions under a single or compatible format and license.

ii. Making recommendations to European public bodies on conditional access to sensitive data in consultation with national data authorities.

1.2A Establish an EU technical data standards registry to increase transparency and facilitate sharing of various standards used by EU-based organizations and help identify opportunities to increase interoperability.

1.2B Establish sector-specific expert committees to recommend preferred technical data standards, including:

i. Standard data models that enable the translation of differently structured data across systems and organizations.

ii. Standard application programming interfaces (APIs) that enable apps and databases to seamlessly exchange data.

iii. Free open source data management-software that embed sector-specific standards.

Balance economic autonomy and openness

By promoting diversification in cloud data storage and processing services, the EU can mitigate vulnerabilities to disruptions and coercion. However, it also needs to maintain competitive cloud offerings for European businesses and avoid succumbing to calls for protectionist measures. Simplifying rules and minimizing legal ambiguity around data transfers can also lower the risk to European businesses of being excluded from global value chains, as can removing anticompetitive barriers to accessing data while maintaining incentives for businesses to invest in high-quality data sets.

Therefore, the European Commission should:

2.1A Enhance the implementation of the European Alliance for Industrial Data, Edge and Cloud by seeking additional investments from participating cloud computing providers and industrial cloud users.

2.1B Ensure coordination between the European Alliance for Industrial Data, Edge and Cloud and parallel initiatives and ensure that the emerging legislative proposals do not impose significant regulatory burdens on cloud service providers that could impact the competitiveness of their offerings.

2.2A Clarify legal ambiguity on liability and ownership when transferring data, including in the General Product Safety Directive, Product Liability Directive, and in planned legislation on sector-specific common data spaces.

2.2B Prioritize data protection adequacy negotiations with global innovation hubs that share European values on the protection of personal data to enable the transfer of data outside the EU, including exploring solutions with the United States following the Schrems II decision.

2.3A In planned sector-specific “common data spaces” legislation, adopt sector-specific data portability rights for customer data (personal and nonpersonal) that consider the distinct data access problems at an industry level.

2.3B In planned sector-specific common data spaces legislation, adopt sector-specific data access obligations for platforms and dominant companies to share data with other businesses that consider the business models, public interest, and economic sensitivities of each sector.

2.3C Establish a consultative mechanism involving industry, national standards organizations, the proposed European Data Innovation Board, and competition authorities to periodically review the suitability of sectoral data-access rules as technologies and market structures change.

Protect fundamental rights

The EU can ensure the protection of fundamental rights while enabling data use for innovation and protecting privacy by decreasing compliance costs and legal uncertainty of data protection while investing in research on machine learning techniques that reduce the need for large pools of personal data. The EU can also mitigate data-driven discrimination through machine learning by addressing gaps in data protection and antidiscrimination laws and strengthening enforcement capabilities.

Therefore, the European Commission should:

3.1A Coordinate with the European Data Protection Board and national data-protection authorities to provide more detailed guidance and assistance on

applying the General Data Protection Regulation (GDPR) to machine learning applications to enhance compliance and mitigate business costs.

3.1B Enhance investment in research in privacy-preserving techniques that can adequately protect personal data while enabling machine learning development, including in partnership with like-minded countries such as the United States.

3.2A Work with the European Parliament and member states on providing more specific requirements in the new EU AI legislation for data-management standards to mitigate risks of discrimination. These should focus on greater transparency in access to AI systems documentation and ensuring that data requirements also address cases where the data sets could embed and amplify discrimination.

3.2B Provide sufficient financial and human resources to national data-protection authorities and other relevant bodies that investigate antidiscrimination violations and carefully define and coordinate the establishment of new enforcement authorities with the existing ones.

3.2C Increase transatlantic cooperation to close the gap between US and EU approaches to mitigating risks of bias and discrimination enabled by data in machine learning through the EU-US Trade and Technology Council.

Machine learning is a powerful tool for analyzing very large data sets and spotting patterns in that data. It has two key ingredients: algorithms that learn and data to train machine learning systems.”

Introduction

Machine learning is changing the way public- and private-sector organizations operate, make decisions, develop products, and deliver services. It is already a big part of people’s daily lives, with a rising number of applications emerging across society from healthcare to transportation. As the technology develops, societies at the forefront of machine learning will become more innovative and their industries more competitive.16James Manyika et al., “Unlocking the Potential of the Internet of Things,” McKinsey Global Institute, June 1, 2015. Those that fall behind risk moving down the value chain and taking a smaller slice of the global economy. However, those that that do not manage data rules and machine learning applications with care risk undermining individual rights and exacerbating existing social problems.

As a major subset of artificial intelligence (AI), machine learning is a powerful tool for analyzing very large data sets and spotting patterns in that data.17Ben Buchanan and Taylor Miller, Machine Learning for Policymakers, White Paper, Harvard Kennedy School’s Belfer Center, 2017, https://www.belfercenter.org/sites/default/files/files/publication/MachineLearningforPolicymakers.pdf. It has two key ingredients: algorithms that learn and data to train machine learning systems. Self-learning machines take each additional data point and adapt the new information to make more accurate predictions. To make very precise predictions, like diagnosing a disease from an eye scan, machine learning requires very large quantities of reliable data, in this case, accurately labeled eye scans.

Rules that govern data have a significant bearing on an economy’s capacity to take advantage of machine learning. Industries that can more easily exploit large quantities of high-quality data will be better able to take advantage of the technology. Autonomous vehicles are a good example of an emerging disruptive innovation that depends on gathering high-quality data to feed algorithms. When large amounts of data are available, data scientists can train autonomous vehicles to be less likely to have a collision. However, the quality of these data sets erodes when data is missing, incomplete, inaccurate, duplicated, or dated.18Venkat N Gudivada, Amy Apon, and Junhua Ding, “Data Quality Considerations for Big Data and Machine Learning: Going Beyond Data Cleaning and Transformations,” in International Journal on Advances in Software 10, no.1 (2017): 20.

Importantly, data rules also have a significant bearing on society and individual rights. Rules that govern who can access, collect, use, and store data—such as who you are, where you go, what you say or do and with whom, the products you buy or the maladies you suffer—dictates who gets to surveil your life and on what grounds.19Louis Menand, “Why Do We Care So Much About Privacy?,” New Yorker, June 11, 2018, https://www.newyorker.com/magazine/2018/06/18/why-do-we-care-so-much-about-privacy. As such, data rules can have significant implications on rights widely viewed in Europe as fundamental to freedom and equality, such as the right to respect for private life, the protection of personal data, and the right to nondiscrimination.20European Union, Charter of Fundamental Rights of the European Union, Official Journal of the European Union.

Given the economic, social and ideological implications, data rules can exert geopolitical influence on how economies and societies function. Whose rules become more commonly adopted by other countries underpins whose economic, social, and ideological systems dominate global digital norms. For example, how open an economy is to international trade and foreign investment,21Magnus Rentzhog and Henrik Jonströmer, No Transfer, No Trade: The Importance of Cross-Border Data Transfers for Companies Based in Sweden, Kommerskollegium (Sweden’s National Board of Trade), January 2014, https://www.kommerskollegium.se/globalassets/publikationer/rapporter/2016-och- aldre/no_transfer_no_trade_webb.pdf. how free society is from repression,22Adrian Shahbaz, “The Rise of Digital Authoritarianism,” Freedom House (website), 2018, https://freedomhouse.org/report/freedom-net/2018/rise-digital-authoritarianism. and how protected individuals are from harm.23European Union Agency for Fundamental Rights, Getting the Future Right: Artificial Intelligence and Fundamental Rights, Publications Office of the European Union, 2020, doi:10.2811/774118.

Leading the development of frontier technologies has become central to the intensifying rivalry between China and the United States, and AI is a key aspect of that competition.24Robert O. Work, Remarks of the Vice Chair, National Security Commission on Artificial Intelligence, Press Briefing on Artificial Intelligence, United States Department of Defense (website), April 9, 2021, https://www.defense.gov/Newsroom/Transcripts/Transcript/Article/2567848/honorable-robert-o-workvice-chair-national-security-commission-on-artificial-i/. Chinese leaders believe that being at the forefront of AI technology is critical for China’s economic and national security.25Gregory C. Allen, “Understanding China’s AI Strategy,” Center for New American Security, accessed May 27, 2021, https://www.cnas.org/publications/reports/understanding-chinas-ai-strategy. President Xi Jinping has outlined policies for China to be the world leading AI power by 2030.26Ryan Hass and Zach Balin, “US-China Relations in the Age of Artificial Intelligence,” Brookings Institution, January 10, 2019, https://www.brookings.edu/ research/us-china-relations-in-the-age-of-artificial-intelligence/. This has included designating national champions that are each focused on different applications of machine learning, like facial recognition and language processing, as well as incentives for start-up investments and patents applications in AI technologies.27Allison and Schmidt, Is China Beating the U.S. to AI Supremacy?, Harvard Kennedy School, 5. China now outpaces the United States in AI start-up investment, patents, and performance in international AI competitions.28Allison and Schmidt, Is China Beating the U.S. to AI Supremacy?, 5–6. In 2020, China’s share of global AI journal citations were higher than the United States for the first time.29Daniel Zhang, Saurabh Mishra, Erik Brynjolfsson, John Etchemendy, Deep Ganguli, Barbara Grosz, Terah Lyons, James Manyika, Juan Carlos Niebles, Michael Sellitto, Yoav Shoham, Jack Clark, and Raymond Perrault, The AI Index 2021 Annual Report, AI Index Steering Committee, Human-Centered AI Institute, Stanford University, March 2021, https://aiindex.stanford.edu/wp-content/uploads/2021/03/2021-AI-Index-Report_Master.pdf.

In the United States, which has been leading the world in AI,30Daniel Castro and Michael McLaughlin, Who Is Winning the AI Race: China, The EU, or the United States?— 2021 Update, Center for Data Innovation, January 2021, 49, https://www2.datainnovation.org/2021-china-eu-us-ai.pdf. there is bipartisan support in Congress to approve the largest boost to R&D funding in a quarter century,31John D. McKinnon, “House Passes Bipartisan Bill to Boost Scientific Competitiveness, Following Senate,” Wall Street Journal, June 29, 2021, https://www.wsj.com/articles/house-passes-bipartisan-bill-to-boost-scientific-competitiveness-following-senate-11624941848. with specific reference to maintaining leadership in AI technologies.32The White House, “Fact Sheet: The American Jobs Plan.” The 2021 National Security Commission on Artificial Intelligence Final Report has also called for a new whole-of-government organizational structure to coordinate, incentivize and resource the accelerated wide-scale adoption of AI technologies in the United States—the goal is to position the nation to compete with China.33Robert O. Work, Remarks of the Vice Chair, National Security Commission on Artificial Intelligence. The European Union (EU) is falling behind China and the United States in machine learning.34Castro and McLaughlin, Who Is Winning the AI Race. One telling indicator is the level of private-sector investment in AI, with investment in China and the United States being much larger than the amount seen in the EU, as shown in figure 1.

The key challenge the EU faces includes the complexity of coordinating data rules and standards among twenty-seven member states and their fragmented implementation at the national and subnational level. Higher public expectations around privacy and data protection also means the EU must proceed with greater care in making data available for businesses and researchers, slowing the development of machine learning applications in the shorter term, though potentially creating a more sustainable approach that places European innovation on stronger footing in the longer term.35Tim Hwang, Shaping the Terrain of AI Competition, Center for Security and Emerging Technology (CSET), Georgetown University’s Walsh School of Foreign Service, June 2020, 17–18, https://cset.georgetown.edu/research/shaping-the-terrain-of-ai-competition/.

In comparison, China and the United States both have larger, more homogenous data ecosystems that can be more easily accessed by data scientists, better facilitating the training of machine learning algorithms. As a result, the US data market is three times the size,36Data markets are where digital data is exchanged as “products” or “services” as a result of the elaboration of raw data. compared to the EU’s, and is growing at twice the rate.37“The European Data Market Study Update,” European Commission (website). China’s data market is larger still and growing at three times the rate of the EU’s.38Long Zhang, “China’s Big Data Market to Continue Expansion,” Xinhua News Service, March 14, 2021, https://www.shine.cn/biz/economy/2103145919/. On its current trajectory, the EU will fall even further behind China and the United States, potentially leaving it dependent on foreign supplies of AI technologies trained on data outside Europe.

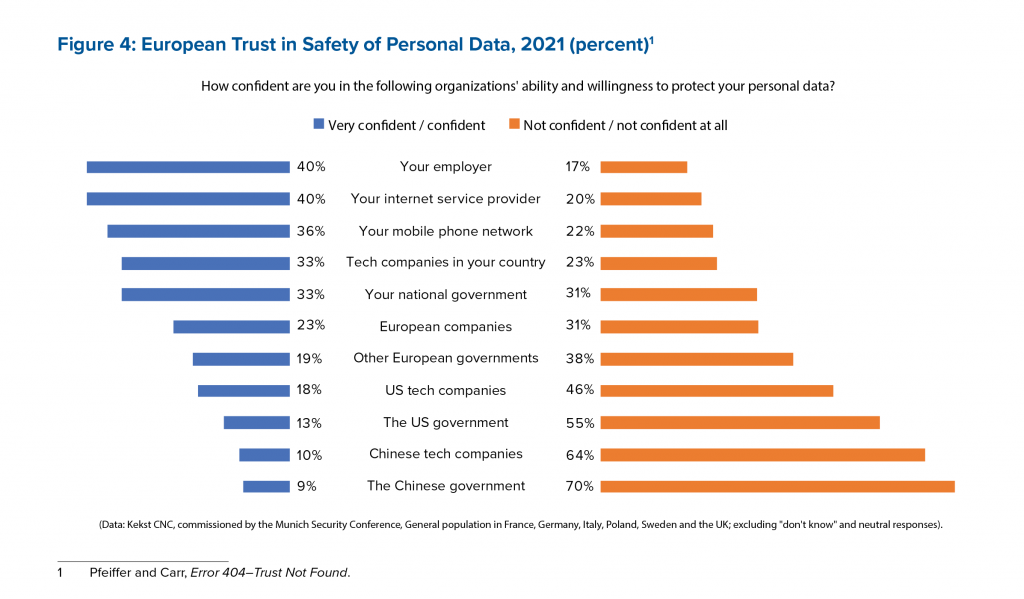

In an effort to compete globally, the EU is grappling with data policies that strike the right balance between innovation, security, and the protection of fundamental European rights. Privacy and security concerns in Europe have already undermined public trust in foreign governments and private companies. These concerns are amplified by Europe’s data infrastructure being largely controlled by only a few foreign suppliers, Washington’s ability to impose sanctions that undermine the policies of European countries,39Ellie Geranmayeh and Manuel Lafont Rapnouil, “Meeting the Challenge of Secondary Sanctions,” Policy Brief, European Council on Foreign Relations, June 25, 2019, https://ecfr.eu/publication/meeting_the_challenge_of_secondary_sanctions. and the increasing tendency of China to deploy economic coercion to assert its national interests.40James Laurenceson, “Will the Five Eyes Stare Down China’s Economic Coercion?,” Lowy Institute, accessed July 8, 2021, https://www.lowyinstitute.org/ the-interpreter/will-five-eyes-stare-down-china-s-economic-coercion. Many Europeans are also made uneasy by the potential for machine learning to exacerbate discrimination in society.41Kantar, “Standard Eurobarometer 92: Report on Europeans and Artificial Intelligence,” Kantar (consulting company), November 2019, 19, https://europa.eu/eurobarometer/api/deliverable/download/file?deliverableId=72485. This is because data may reflect existing structural inequalities and the realities of an unjust society, which can become embedded in AI systems and exacerbate discriminatory outcomes.42Genevieve Smith and Ishita Rustagi, Mitigating Bias in Artificial Intelligence: An Equity Fluent Leadership Playbook, Berkeley Haas Center for Equity, Gender and Leadership, July 2020, 23, https://haas.berkeley.edu/wp-content/uploads/UCB_Playbook_R10_V2_spreads2.pdf. Another concern is that inadequate data protections deployed by businesses outside the EU have allowed countries like Russia to exploit them for political purposes to target disinformation campaigns and influence elections in Europe.43Francesca Bignami, “Schrems II: The Right to Privacy and the New Illiberalism,” Verfassungsblog, accessed April 20, 2021, https://verfassungsblog.de/schrems-ii-the-right-to-privacy-and-the-new-illiberalism/. Reconciling these concerns with the benefits of data access and re-use for machine learning will be an ongoing challenge for the EU.44Hwang, Shaping the Terrain, 16–18.

There is the risk that if the EU fails to resolve these tensions through data rules that foster innovation, businesses could face higher costs to deploy machine learning, weakening their ability to compete in a global market. The resulting lack of European innovation could reinforce the EU’s dependencies on foreign digital technologies, with local industries taking a smaller slice of the global value chain. However, there is also a risk that the EU implements short term, innovation-driven policies that could embed economic systems that fail to protect fundamental European rights, exacerbate social inequalities, and threaten democratic institutions and processes.45Hwang, Shaping the Terrain, 13–15. If the EU cannot get this balance right, its inability to present an effective regulatory model will undermine its ability to shape global rules at the intersection of technology and society.

Aware of these costs, the European Commission has made “a Europe fit for the digital age” a priority to achieve by 2024.46European Commission, “6 Commission Priorities for 2019-24,” European Commission website, https://ec.europa.eu/info/strategy/priorities-2019-2024_en. The EU has proposed a raft of legislative proposals, with more in the pipeline, informed by the commission’s European Strategy for Data and White Paper on Artificial Intelligence, both released in 2020, including the Data Governance Act proposal introduced in November 2020,47European Commission, Proposal for a Regulation on European Data Governance (Data Governance Act), November 25, 2020, https://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=CELEX:52020PC0767&from=EN. and the Artificial Intelligence Act proposal introduced in April 2021.48European Commission sources: Artificial Intelligence Act, April 21, 2021, https://eur-lex.europa.eu/resource.html?uri=cellar:e0649735-a372-11eb-9585-01aa75ed71a1.0001.02/DOC_1&format=PDF; European Strategy for Data, February 19, 2020, https://ec.europa.eu/info/sites/info/files/communication-european-strategy-data-19feb2020_en.pdf; and White Paper on Artificial Intelligence: A European Approach to Excellence and Trust, 2020, https://ec.europa.eu/info/sites/default/files/commission-white-paper-artificial-intelligence-feb2020_en.pdf. It has also allocated €143.4 billion ($166.25 billion) toward the EU “single market, innovation, and digital” between 2021 and 2027.49European Commission, “EU’s Next Long-Term Budget & NextGenerationEU: Key Facts and Figures,” November 11, 2020, accessed July 31, 2021, https://ec.europa.eu/info/sites/default/files/about_the_european_commission/eu_budget/mff_factsheet_agreement_en_web_20.11.pdf. In pursuing this agenda, the commission needs to balance stimulating innovation with mitigating the risks machine learning can pose to fundamental rights, European autonomy, and society. This report proposes a path forward for the EU against the backdrop of rapid European rulemaking and intensifying competition between China and the United States in data and machine learning.

Our approach examines the EU’s geopolitical considerations, domestic considerations of EU member states including societal interests and the protection of individual rights, and the commercial considerations of EU-based businesses and makes recommendations designed to navigate the complex equities at stake. While our research takes a broad view, we sought specific examples, concerning autonomous vehicles and healthcare, to demonstrate the benefits and risks to society posed by the deployment of machine learning. We similarly sought examples from four countries—Finland, France, Germany, and Poland—to highlight the diversity of priorities, concerns, and approaches among EU member states.

The report proposes three broad objectives for the EU to pursue in developing data rules for machine learning:

- leverage the scale of Europe

- balance economic autonomy and openness

- protect fundamental rights

Under these categories, we recommend fifteen specific actions for European Commission.

It is important to note that framing the issue of data governance around EU competition with the United States and China can lend itself to lines of argument that downplay the importance of protecting the rights of individuals to control their own data and the social implications of data-driven decision making based on machine learning systems. To make the analysis and recommendations more easily digestible, they are separated into seven chapters under three themes; however, all the sections are strongly interconnected and are not designed to be read in isolation from one another. We have attempted to flag in the analysis the cases in which lines of reasoning risk downplaying important social interests, which can be complex and multidimensional, and have linked them to other parts of the report that discuss those issues in more detail.

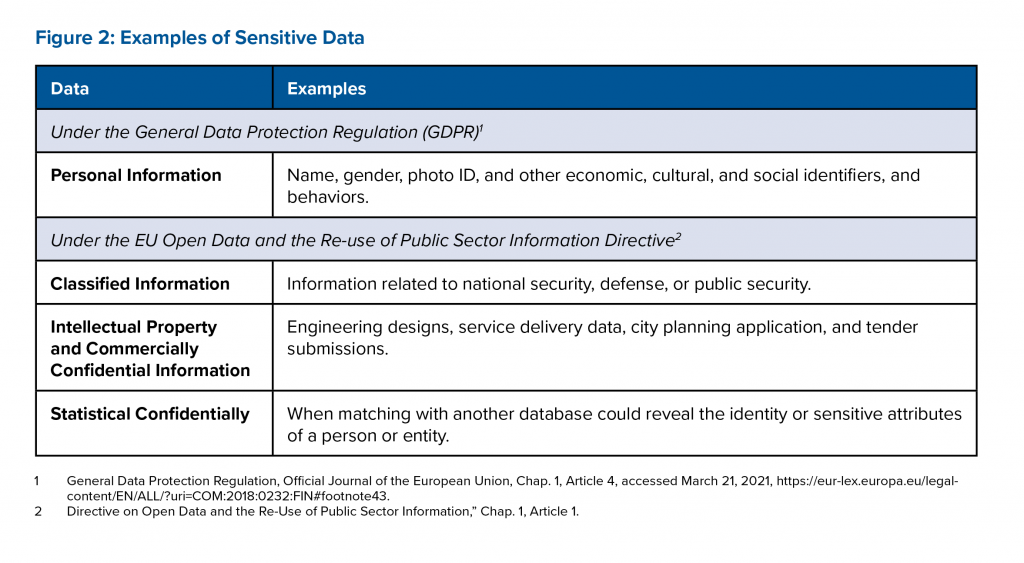

Part one: Leverage the scale of Europe

1.1 Increase access to public-sector data

Public-sector data has significant potential for commercial and research purposes including to develop new products and services with machine learning.50“Open Data,” Shaping Europe’s Digital Future (webpages), European Commission, March 10, 2021, https://digital-strategy.ec.europa.eu/en/policies/open-data-0. The scale of the EU single market offers opportunities to pool data resources, the reuse of which could contribute up to €194 billion to the EU economy by 2030.51“From the Public Sector Information (PSI) Directive to the Open Data Directive,” Shaping Europe’s Digital Future (webpages), European Commission, March 10, 2021, https://digital-strategy.ec.europa.eu/en/policies/psi-open-data. However, the EU needs to address the complexity of navigating fragmented open data rules and procedures across Europe and, with the appropriate privacy-preserving techniques and protections in place, enable limited access to sensitive data,52Sensitive data can include personal data described under the General Data Protection Regulation, as well as intellectual property, and information that is commercially confidential, statistically confidential, or relates to national security under the Open Data and the Reuse of Public Sector Information Directive. if it is to innovate on par with China and the United States.

To achieve this, the European Commission should establish an EU data service that facilitates access to publicly held data, helping to reduce the administrative and legal complexity for businesses seeking to reuse nonsensitive public-sector data. The service could also work with national data-protection authorities and public bodies to make sensitive data more widely available for research and development purposes under adequate security and privacy-preserving protections that meet data-protection requirements, such as the GDPR.

This chapter should be read in conjunction with chapter 2.3 on data ecosystems, which looks at the implications of data sharing among businesses, as well as chapter 3.1 on privacy and trust, which has a greater focus on the risks to individuals, as both chapters are relevant to the issues discussed in this chapter.

EU policy context

There are fragmented rules and processes on accessing and using public-sector data in Europe. The EU’s 2019 Directive on Open Data and the Re-Use of Public Sector Information updated the minimum standard for public bodies to make nonsensitive data accessible to the public.53Directive on Open Data and the Re-Use of Public Sector Information, Official Journal of the European Union 62, no. L172 (June 26, 2019): 56–83, https://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=OJ:L:2019:172:FULL&from=EN.

However, while the directive strengthens language against imposing conditions on the reuse of data unless justified on public-interest grounds, it does not require the use of standard licenses that make it easier for organizations to use and combine data sets.54Directive on Open Data and the Re-Use of Public Sector Information. The directive also implies that those seeking EU-wide data sets need to approach each public-sector body individually. Furthermore, it does not clarify conditions to access to sensitive data (see figure 2 for examples of sensitive data). The commission’s proposed Data Governance Act seeks to address this gap by clarifying obligations for the public sector to share sensitive data that falls outside of the scope of the Open Data Directive.55European Commission, Proposal for a Regulation on European Data Governance (Data Governance Act). The commission has also foreshadowed sectoral legislation to create “common data spaces” to make public and private data more widely available.56European Commission, “A European Strategy for Data,” Communication from the Commission to the European Parliament, the Council, the European Economic and Social Committee, and the Committee of the Regions COM(2020), 66, February 19, 2020, https://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=CELEX:52020DC0066&from=EN. It envisages these common data spaces will provide nondiscriminatory access to high-quality data between businesses and with government.57European Commission, Proposal for a Regulation Laying down Harmonised Rules, 29.

Geopolitical considerations

Barriers to accessing public-sector data put Europe at a disadvantage vis-à-vis China and the United States. Charles Michel, president of the European Council, has described the current unavailability and fragmentation of open data policies as the “weak point” of the European market.58Charles Michel, keynote address, Masters of Digital 2021, Digital Europe (trade association summit), February 3, 2021, https://www.youtube.com/watch?v=TR0p_9Ii0U8. Compared to the EU, Chinese organizations benefit from the ability to extract vast amounts of data from users owing to few and lax privacy laws.59Hwang, Shaping the Terrain. The US private sector has amassed troves of data drawn from its dominant position in most sectors in the United States and globally.60Matt Sheehan, “Much Ado About Data: How America and China Stack Up,” MacroPolo, July 16, 2019, https://macropolo.org/ai-data-us-china/. For example, in a conversation with experts Marc Lange and Luc Nicolas from the European Health Telematics Association (EHTEL), they noted that US healthcare companies often had direct access to data given the heterogeneity and market orientation of the different health systems, giving US companies greater capacity to directly deploy machine learning in healthcare. They observed that health data in Europe was generally only open to each hospital’s research department, which, given their nonprofit legal status, sought mainly to publish scientific research rather than develop healthcare products and services.61Marc Lange and Luc Nicolas, Interview with the European Health Telematics Association (EHTEL), February 12, 2021. Given these systemic differences, to compete with China and the United States, the EU needs to better enable companies to train machine learning algorithms with data held by the public sector.

National considerations

EU member states have different levels of comfort with allowing access to sensitive data. Member states are concerned about ensuring and guaranteeing the trust and confidence of citizens and businesses (see chapter 3.1). For instance, countries where citizens have strong concerns about privacy, like Germany, have until recently been reluctant to enable public access to data in sensitive industries such as healthcare.62Carmen Paun, “Germany Aims to Become EU Leader in Digital Health Revamp,” Politico, October 16, 2019.

On the other hand, countries like Finland and Estonia lead the way in digital health, and health providers already exchange some patient data.63Paun, “Germany Aims to Become EU Leader.” In 2019, the Finnish Act on the Secondary Use of Health and Welfare Data entered into force to facilitate access to social and health data, the first such legislation by an EU member state.64“Secondary Use of Health and Social Data,” Finland’s Ministry of Social Affairs and Health (website), April 16, 2021, https://stm.fi/en/secondary-use-of- health-and-social-data. The act establishes a central data-permit authority for the secondary use of health and welfare data, unifying access procedures and decision making.65Saara Malkamäki and Hannu Hämäläinen, “New Legislation Will Speed up the Use of Finnish Health Data,” Sitra, May 14, 2019, https://www.sitra.fi/en/ blogs/new-legislation-will-speed-use-finnish-health-data/. Given these different levels of comfort, the European Commission will need to balance increased data availability while ensuring data-protection measures satisfy the higher expectations of some member states.

Business considerations

Low-cost access to multiple public-sector databases can enable businesses to develop new products and services. Reaching the scale and scope needed to train an algorithm may require pooling data from a variety of open data sources. However, EU member states each approach the openness of public data differently,66“Directive on Open Data and the Re-Use of Public Sector Information., Paragraph 15. meaning businesses need to treat data under different conditions, increasing legal complexity and operational costs.67“Report of License Proliferation Committee and Draft FAQ,” Open Source Initiative (website), accessed April 17, 2021, https://opensource.org/proliferation-report. One example is the range of different open data licenses used, which is estimated to reach ninety different licenses used across various national, regional, and municipal governments in the EU.68Rufus Pollock and Danny Lämmerhirt, “Open Data Around the World: European Union,” State of Open Data (research project funded by the International Research Centre with the support of the Open Data for Development [OD4D] Network), accessed April 17, 2021, https://www.stateofopendata.od4d.net/chapters/regions/european-union.html. If businesses can more simply access and use open data across the EU, it would make it easier for businesses to combine data sets to train new products and services based on machine learning.

Businesses would benefit from simpler procedures to access sensitive data under conditions that maintain data protection. Implementing simple procedures for the public to access sensitive data under the right conditions can enable the development of innovative machine learning products and services. For example, Corti is a natural language processing start-up that learns from medical conversations in emergency calls to better and sooner detect if someone is having a heart attack by listening to key words, background noises, and emotions.69Bernard Marr, “AI That Saves Lives: The Chatbot That Can Detect A Heart Attack Using Machine Learning,” accessed April 17, 2021, https://bernardmarr.com/default.asp?contentID=1762. Copenhagen Emergency Health Services shared audio data from hundreds of thousands of emergency calls with Corti to enable machine learning; in trials, the software reduced the number of undetected out-of-hospital heart attacks in Copenhagen by 43 percent.70“Corti Website: About,” accessed April 17, 2021, https://www.corti.ai/about. Corti was further piloted in Italy and France, and while meeting GDPR posed significant challenges, it was able to work with emergency services to meet European data-protection requirements.71European Emergency Number Association (EENA), “Detecting Out-of-Hospital Cardiac Arrest Using Artificial Intelligence Protect Report,” January 23, 2020, 14–15, https://eena.org/document/detecting-out-of-hospital-cardiac-arrest-using-artificial-intelligence/. This demonstrates that enabling access to sensitive data with the right protections for privacy (see chapter 3.1) can lead to innovations that benefit consumers and society.

There are significant risks in allowing access to sensitive data, but privacy-preserving machine learning techniques are emerging. Once data is released, controlling who can view data and for what purpose becomes extremely difficult to enforce, opening opportunities for misuse.72Ari Ezra Waldman, “Privacy Law’s False Promise,” Washington University Law Review 97, no. 3, (2020), 773–834, https://openscholarship.wustl.edu/law_ lawreview/vol97/iss3/7. Data scientists are developing privacy-preserving techniques that can still produce accurate machine learning outputs, ranging from anonymization that removes sensitive entries and pseudonymization that replaces them with made-up ones—though these have been proven to be vulnerable to reidentification73Caroline Perry, SEAS Communications, “You’re Not So Anonymous,” Harvard Gazette, October 18, 2011, https://news.harvard.edu/gazette/story/2011/10/youre-not-so-anonymous/.—to more sophisticated decentralized, differential, or encryption techniques.74For example, federated machine learning is a decentralized approach that distributes the algorithm to where the data is, rather than gathering the data where the algorithm is, removing the need to transfer the data. Another example is differential privacy, which randomly shuffles data to remove the association between individuals and their data entries in a way that specifically designed algorithms can still conduct statistical analysis on the whole data set. Homomorphic encryption, which allows computation on encrypted data as if it were unencrypted is another approach, albeit technically challenging. See Georgios A. Kaissis et al., “Secure, Privacy-Preserving and Federated Machine Learning in Medical Imaging,” Nature Machine Intelligence 2, no. 6 (June 2020): 305–11, https://doi.org/10.1038/s42256-020-0186-1. Applying these privacy-preserving techniques as a condition to access to sensitive public data could provide opportunities for researchers to develop and deploy beneficial machine learning applications while protecting sensitive data.

Recommendations for the European Commission

A. Establish an EU data service under legislation creating common data spaces to facilitate access to public-sector data in consultation with national data-protection authorities, starting with the health dataspace planned for the end of 2022.75European Commission, “European Health Data Space,” European Commission (website), accessed April 17, 2021, https://ec.europa.eu/health/ehealth/dataspace_en.

Piloting the new system in the healthcare sector would enable the authority to draw lessons from Finland’s permit-granting authority (Findata), before expanding its scope to cover other sectors. The service could be an expansion of the EU’s open data portal (data.europe.eu) and be jointly overseen by the European Data Protection Board, as an independent European body focused on the consistent application of data-protection rules throughout the EU,76“Who We Are,” European Data Protection Board (website), accessed August 2, 2021, https://edpb.europa.eu/about-edpb/about-edpb/who-we-are_en. and the European Data Innovation Board in the proposed Data Governance Act, given its intended focus on data standardization.77European Commission, Proposal for a Regulation on European Data Governance (Data Governance Act). The authority’s functions could include:

- Serving as a single window for data-access requests for data sets across multiple jurisdictions and coordinate access to various data sets under single or at least compatible format and license

- Assessing requests for data not available for open access, including sensitive data, in consultation with national data-protection authorities and make recommendations to relevant public bodies on whether to allow access and under what conditions, such as the use of specific privacy-preserving techniques and security precautions

The service could operate on a cost-recovery basis, passing expenses on to the businesses and research institutes using the service, which would still help reduce costs arising from administrative and legal complexity for businesses seeking to reuse public-sector data. It would also increase the availability of sensitive data for research and development while ensuring adequate protections laid out in relevant national-level data-protection legislation, for example, through privacy-preserving machine learning techniques.

1.2 Harmonize data standards for interoperability

Machine learning and the Internet of Things (IoT) will bring artificial intelligence to our devices, factories, hospitals, and homes, creating efficiencies and driving productivity.78“Generating Value at Scale with Industrial IoT,” McKinsey Digital (website), McKinsey & Company, February 5, 2021, https://www.mckinsey.com/business-functions/mckinsey-digital/our-insights/a-manufacturers-guide-to-generating-value-at-scale-with-industrial-iot. To achieve this, however, the EU needs to enable the sharing of high-quality data between distributed devices and systems.79Gudivada, Apon, and Ding, “Data Quality Considerations for Big Data.” The lack of harmonized data standards across the EU80Daniel Rubinfeld, “Data Portability,” Competition Policy International, November 26, 2020, https://www.competitionpolicyinternational.com/data-portability/. slows the deployment of machine learning compared to China and the United States.81Winston Maxwell et al., A Comparison of IoT Regulatory Uncertainty in the EU, China, and the United States, Hogan Lovells (law firm including Hogan Lovells International LLP, Hogan Lovells US LLP, and affiliated businesses), March 2019.

To address this, the European Commission should facilitate the development of sector-specific data models, application programming interfaces (APIs) and open source software that enable the seamless exchange and translation of differently structured data across systems and organizations.

EU policy context82This chapter focuses on electronic health records (EHR) as a case study on data interoperability. The siloed nature of healthcare IT systems, combined with incompatible national privacy and security rules, provide a good demonstration of the challenges the EU will face more broadly in connecting distributed data systems to leverage the benefits of machine learning.

The European Commission’s efforts to encourage the voluntary adoption of data interoperability standards have made slow progress. For example, decadelong efforts by the commission under the European Patient Smart Open Services (epSOS) project to encourage the implementation of interoperable health data standards have been stymied by reluctant healthcare IT providers and ambivalent national governments.83“EpSOS,” healthcare-in-europe.com (platform website), accessed 2021, https://healthcare-in-europe.com/en/news/epsos.html. More recently, the commission enacted the 2019 Recommendation on a Europe Electronic Health Record exchange, but it lacks legal enforcement to incentivize compliance.84European Commission, Recommendation on a European Electronic Health Record Exchange Format, C(2019)800 of February 6, 2019, European Commission (website), accessed 2021, https://digital-strategy.ec.europa.eu/en/library/recommendation-european-electronic-health-record-exchange-format.

Looking forward, the EU’s 2020 Data Strategy included the development of rules for common “data spaces” intended to “set up structures that enable organizations to share data.” The European Commission’s 2020 proposal for a Data Governance Act also includes the establishment of a European Data Innovation Board that would focus on data standardization.85European Commission, Proposal for a Regulation on European Data Governance (Data Governance Act). However, it will be very difficult for member states to agree to a standard data format due to constraints by existing IT infrastructure as well as incompatible and inflexible national privacy and security regulations.86Philipp Grätzel von Grätz, “Transforming Healthcare Systems the European Way,” Healthcare IT News, May 24, 2019, https://www.healthcareitnews.com/news/emea/transforming-healthcare-systems-european-way. To facilitate widespread adoption of machine learning, the commission needs to find a way to enable data interoperability while avoiding costly and complex overhauls of member state regulations and IT systems.

Geopolitical considerations

China uses different digital standards than the EU and is internationalizing those standards through global bodies and bilateral agreements, making its digital exports more interoperable with digital systems in foreign markets. The EU has a strong voice on international data standards through international bodies like the International Standards Organization (ISO) and the International Electrotechnical Committee (IEC).87Björn Fägersten and Tim Rühlig, China’s Standard Power and Its Geopolitical Implications for Europe, Swedish Institute of International Affairs, 2019. However, China is rapidly increasing its participation in standard setting and using other strategies to influence standards in export markets.88Fägersten and Rühlig, China’s Standard Power. Björn Fägersten and Tim Rühlig at the Swedish Institute of International Affairs outline how China is rapidly developing industry standards and proposing new work items in ISO and IEC based on nascent technologies to gain first mover advantage in technical standardization.89Fägersten and Rühlig, China’s Standard Power. China also is exporting its domestic standards through foreign investments such as Belt and Road Infrastructure projects, including incorporating clauses on standardization in government-to-government memorandums of understanding.90Fägersten and Rühlig, China’s Standard Power. Given the scale of the EU, standards adopted EU-wide can create market power that sway smaller economies to adopt EU standards.91“The EU Wants to Set the Rules for the World of Technology,” Economist, February 20, 2020, http://www.economist.com/business/2020/02/20/the-eu-wants-to-set-the-rules-for-the-world-of-technology. However, if data standards remain fragmented in Europe, they are less appealing to other countries compared to Chinese or other technical standards that are already widespread.

National considerations

Capacity and existing regulations constrain some EU member states from implementing common data standards. Each EU member state has its own approach to public data infrastructure that uses disparate data formats based on legacy systems, as well as national security standards tied to privacy requirements,92Philipp Grätzel von Grätz, “Transforming Healthcare Systems the European Way.” to which amendments can be controversial.93Grätzel von Grätz, “Transforming Healthcare Systems.” For example, Germany’s mandatory technical specifications for electronic health records (EHR) are different from those encouraged by the European Commission due to security requirements.94Grätzel von Grätz, “Transforming Healthcare Systems.” For other member states, regulatory constraints may be surmountable but there is a lack of organizational capacity to adopt interoperability standards. For example, while Poland faces fewer legal barriers,95Milieu Ltd. and Time.lex, Overview of the National Laws on Electronic Health Records in the EU Member States: National Report for Poland,” Prepared for the Executive Agency for Health and Consumers, March 5, 2014, https://ec.europa.eu/health/sites/health/files/ehealth/docs/laws_poland_en.pdf. its hospitals and clinics have been slower to digitize health data,96Leontina Postelnicu, “EU Report Looks at Uptake of EHRs, EPrescribing, and Online Access to Health Information,” Healthcare IT News, December 14, 2018, https://www.healthcareitnews.com/news/emea/eu-report-looks-uptake-ehrs-eprescribing-and-online-access-health-information. resulting in a Polish law requiring national adoption of EHR by 2014 to be delayed by three years.97Aleksandra Czerw et al., “Implementation of Electronic Health Records in Polish Outpatient Health Care Clinics: Starting Point, Progress, Problems, and Forecasts,” Annals of Agricultural and Environmental Medicine 23, no. 2 (2016): 6. In general, studies have found that Central and Eastern European countries continue to face difficulties operationalizing EHR due to fragmented policy frameworks and constraints on financial resources.98Marek Ćwiklicki et al., “Antecedents of Use of E-Health Services in Central Eastern Europe: A Qualitative Comparative Analysis,” BMC Health Services Research 20, no. 1 (March 4, 2020): 171, https://doi.org/10.1186/s12913-020-5034-9.

Business considerations

Reducing the time and costs involved in preparing data for machine learning will make the technology more accessible. Preparing large data sets for machine learning can be difficult, resource intensive, and costly for business. Currently, “data wrangling” takes up about 80 percent of the time consumed in most artificial intelligence projects, as data from various data sources may need to be manually labeled and structured in a coherent way.99Tom Gauld, “For AI, Data Are Harder to Come by Than You Think,” Economist, June 13, 2020, http://www.economist.com/technology-quarterly/2020/06/11/for-ai-data-are-harder-to-come-by-than-you-think. Data also needs semantic interoperability, whereby the labels or categories mean the same thing across databases.100“What Does Interoperability Mean for the Future of Machine Learning?,” Appen (blog on corporate website), September 22, 2020, https://appen.com/blog/what-does-interoperability-mean-for-the-future-of-machine-learning/. There are solutions to this problem in the form of data models like the Observational Medical Outcomes Partnership (OMOP) that can translate different data formats so long as the semantic vocabulary is the same (e.g., using the term “myocardial infarction” rather than “heart attack”).101Observational Health Data Sciences and Informatics, “OMOP Common Data Model,” accessed April 18, 2021, https://www.ohdsi.org/data-standardization/the-common-data-model/. These kinds of data models have the potential to enable smoother compilation of large-scale data sets. In doing so, they make large amounts of data more easily available to machine leaning algorithms resulting in accurate outputs, such as a higher likelihood of correctly diagnosing a disease.

Interoperability—the ability for two systems to communicate effectively—is key for machine learning.102“What Does Interoperability Mean for the Future of Machine Learning?,” Appen blog. For instance, patients are increasingly tracking and generating large volumes of personal health data through wearable sensing and mobile health (mHealth) apps.103Haining Zhu et al., “Sharing Patient-Generated Data in Clinical Practices: An Interview Study,” AMIA Symposium 2016 (2016): 1303–12. One example is KardiaMobile, a personal ECG device that can monitor heart rhythms and instantly detect irregularities, such as atrial fibrillation that can lead to a stroke.104Sushravya Raghunath et al., “Deep Neural Networks Can Predict Incident Atrial Fibrillation from the 12-Lead Electrocardiogram and May Help Prevent Associated Strokes,” Preprint, submitted April 27, 2020, https://doi.org/10.1101/2020.04.23.20067967. The Kardia app allows patients to track ECG data over time and share the recordings directly with their physician.105EIT Health and McKinsey & Company, Transforming Healthcare with AI, March 2020, https://thinktank.eithealth.eu/wp-content/uploads/2020/12/EIT-Health-and-McKinsey-%E2%80%93-Transforming-Healthcare-with-AI.pdf. However, a European study of health clinicians using mHealth apps with their patients found that they did not have the means to transfer this data to the patient’s electronic health record.106Zhu et al., “Sharing Patient-Generated Data in Clinical Practices.” This means a patient’s data from a device cannot be compiled with the patient’s other health data for further research and development purposes, which weakens the potential for machine learning to improve patient health outcomes.

Open source data-management software—which are characterized by open licenses, commitment to standards, and collaboration among developers—could offer a pathway to help institutions keep pace with technological advancements and enhance interoperability.107Maria Sukhova, “Pros and Cons of Open Source Software in Healthcare,” Blog, Auriga (outsourcing software company), April 19, 2017, accessed August 6, 2021, https://auriga.com/blog/2017/pros-and-cons-of-open-source-software-in-healthcare/. Compared to proprietary software, the open architecture of open source software can more easily allow for the integration of various products like medical devices and wearables into a single system, and commercial versions can adapt the software to support the needs of specific users.108Sukova, “Pros and Cons of Open Source Software in Healthcare.” Open source software can be vulnerable to hacking and data breaches when not maintained by an active community;109“Open Source Does Not Equal Secure: Schneier on Security,” Blog, accessed August 13, 2021, https://www.schneier.com/blog/archives/2020/12/open-source-does-not-equal-secure.html. however, open source projects with an active user base are generally more likely to discover bugs and security vulnerabilities, and fix them more quickly, than most proprietary software (i.e., closed source software).110Ron Rymon, “Why Open Source Software Is More Secure Than Commercial Software,” WhiteSource (blog), April 7, 2021, accessed August 13, 2021, https://www.whitesourcesoftware.com/resources/blog/3-reasons-why-open-source-is-safer-than-commercial-software/.

Recommendations for the European Commission

A. Mandate and fund the European Data Innovation Board (in the proposed Data Governance Act) to establish an EU technical data standards registry to facilitate the sharing of various technical data standards between national standards organizations, industry bodies, associations, and businesses.

The purpose of the registry would be to increase transparency of the various data standards at the European, national, sectoral, association, and enterprise levels that are in use across the EU. This would assist technical experts identify opportunities and develop ways to enhance interoperability. The registry could draw on the example of the registry maintained by the Standardization Administration of China that includes government, industry regulator, local, association, and enterprise-level standards.111The US-China Business Council, Standards Setting in China: Challenges and Best Practices, 2020, 22. Another example is the comprehensive list of standards developed by the Canadian Data Governance Standardization Collaborative.112Standards Council of Canada, Canadian Data Governance Standardization Roadmap, Annex B, 75–155, 2021, https://www.scc.ca/en/system/files/publications/SCC_Data_Gov_Roadmap_EN.pdf.

B. Mandate and fund the European Data Innovation Board (proposed under the Data Governance Act) to establish sector-specific expert committees to recommend data interoperability standards, drawing on technical experts from standardization bodies, industry, academia, and consumer groups.

The expert committees could be established alongside each “common data space” and should specifically focus on:

i. Standard data models that enable the translation of differently structured data across systems and organizations. An example of such a data model is the OMOP model for health data mentioned above.

ii. Standard application programming interfaces (APIs) that enable apps and databases to seamlessly exchange data. For example, the United States requires health information to be made available by the Fast Healthcare Interoperability Resources (FHIR) API standard.113United States Department of Health and Human Services, Centers for Medicare & Medicaid Services, “Interoperability and Patient Access Final Rule,” 85 Fed. Reg. 25510 (May 1, 2020), https://www.federalregister.gov/documents/2020/05/01/2020-05050/medicare-and-medicaid-programs-patient-protection-and-affordable-care-act-interoperability-and.

iii. Free open source data-management software that embed sector-specific standards, encourage a community of practice, and allow for commercial adaption to serve the needs of specific users.

The widespread adoption of common data models would enable EU member states to implement data interoperability standards without overhauling costly IT infrastructure and amending sensitive privacy and security regulations. Combined with common APIs and free open source data-management software this would reduce costs and increase accessibility to data for businesses to adopt machine learning. The broad adoption of these standards in the EU would also increase the chances of European data interoperability standards being adopted internationally.

Part two: Balance economic autonomy and openness

2.1 Diversify data storage and processing

Cloud service providers increasingly become geopolitical actors whose influence shapes economic growth, international security competition, and global balance of power.114Trey Herr, Four Myths about the Cloud: The Geopolitics of Cloud Computing, Atlantic Council, August 31, 2020, https://www.atlanticcouncil.org/in-depth-research-reports/report/four-myths-about-the-cloud:-the-geopolitics-of-cloud-computing/. To ensure redundancy in essential data services and reduce vulnerability to potential coercion, the European Union needs to diversify its dependency on a few non-European cloud service providers. However, in doing so, the EU needs to maintain competitive cloud offerings for European businesses and avoid succumbing to calls for protectionist measures that potentially harm businesses and could negatively impact some EU member states.

The European Commission should consider further enhancing investment into the existing federated cloud project to diversify the supply of data infrastructure services. It also needs to ensure coordination of proposed and existing initiatives in this area, such as Gaia-X, and aim to minimize the compliance costs of new regulatory measures that could disadvantage European cloud providers. It is worth noting that within the EU policy debate there is a strong focus on protecting data from foreign jurisdictions and weaker data- protection regimes, often mentioned in the context of data localization efforts that force data to be hosted in the jurisdiction it was collected.115Herr, Four Myths about the Cloud. Therefore, this chapter should be read in conjunction with chapters 2.2 on simplifying data transfers and with chapter 3.1 on enhancing privacy and trust.

EU policy context

The European Commission, supported by most EU member states, aims to reduce dependencies on external cloud providers by setting new regulations and funding “federated cloud infrastructure.”116Burwell and Propp, The European Union and the Search for Digital Sovereignty. Building on the data strategy proposed by the commission, twenty-five EU member states signed a joint declaration in October 2020 pledging to invest up to €10 billion in the cloud sector and establish the “European Alliance on Industrial Data, Edge and Cloud.”117“Commission Welcomes Member States’ Declaration on EU Cloud Federation,” Press Release, European Commission (website), October 15, 2020, https://digital-strategy.ec.europa.eu/en/news/commission-welcomes-member-states-declaration-eu-cloud-federation; and Melissa Heikkila and Janosch Delcker, “EU Shoots for €10B ‘Industrial Cloud’ to Rival US,” Politico, October 15, 2020, https://www.politico.eu/article/eu-pledges-e10-billion-to-power-up-industrial-cloud-sector/. To shape these efforts, twenty-seven CEOs of European companies shared priority areas for cloud and edge ecosystem development and investment with European Commissioner for Internal Market Thierry Breton in May 2021.118European Commission, “Today the Commission Receives Industry Technology Roadmap on Cloud and Edge,” Report Announcement, Shaping Europe’s Digital Future (webpages), May 2021, https://digital-strategy.ec.europa.eu/en/library/today-commission-receives-industry-technology-roadmap-cloud-and-edge.

In July 2021, the commission officially launched the European Alliance on Industrial Data, Edge and Cloud, which aims to combine resources from existing EU programs, industry and member states, for the creation of a European Federated Cloud in 2021.119Heikkila and Delcker, “EU Shoots for €10B ‘Industrial Cloud’ to Rival US.” The alliance plans to write a Cloud rulebook that would provide a single European framework of cloud use and interoperability requirements, and it has posed the idea of a European marketplace for cloud offerings: a single portal to cloud services meeting key EU standards and rules.120“Cloud Computing,” Shaping Europe’s Digital Future (webpages), European Commission, March 10, 2021, https://digital-strategy.ec.europa.eu/en/policies/cloud-computing. There also is a strong focus on so-called edge computing, which instead of relying on centralized infrastructure, allows for real-time data sharing and analysis directly between devices. In addition to this, the 2020 Digital Markets Act proposed by the commission aims to mitigate the risk of dependencies on cloud providers through stricter portability and data access obligations.121European Commission, Directorate-General for Communications Networks, Content, and Technology (DG CNECT), Proposal for a Regulation of the European Parliament and of the Council on Contestable and Fair Markets in the Digital Sector (Digital Markets Act), 2020/0374/COD (2020), https://eur-lex.europa.eu/legal-content/en/TXT/?qid=1608116887159&uri=COM%3A2020%3A842%3AFIN.

It is yet unclear whether the European Alliance on Industrial Data, Edge and Cloud will be able to coordinate the efforts needed to compete with the leading non-European cloud providers. There is also a question of how specifically the Alliance relates to other initiatives, such as the Franco-German Gaia-X cloud network. Gaia-X aims to serve as a platform and Europe-wide network joining up cloud services providers, high performance computing, and edge system providers from a number of EU-based and international organizations.122“GAIA-X: A Federated Data Infrastructure for Europe,” accessed August 10, 2021, https://www.data-infrastructure.eu/GAIAX/Navigation/EN/Home/home. html; and Janosch Delcker and Melissa Heikkila, “Germany, France Launch Gaia-X Platform in Bid for ‘Tech Sovereignty,’ ” Politico, June 4, 2020, https://www.politico.eu/article/germany-france-gaia-x-cloud-platform-eu-tech-sovereignty/. It is crucial that these policy and funding initiatives are coherent and that potential overlaps are minimized to enable data flows while maintaining adequate data protections.123Andrea Renda et al., The Digital Transition: Towards a Resilient and Sustainable Post-Pandemic Recovery, Centre for European Policy Studies (CEPS), 2021, https://www.ceps.eu/wp-content/uploads/2021/07/The-Digital-Transition_CEPS-TF-WGR.pdf.

Geopolitical considerations

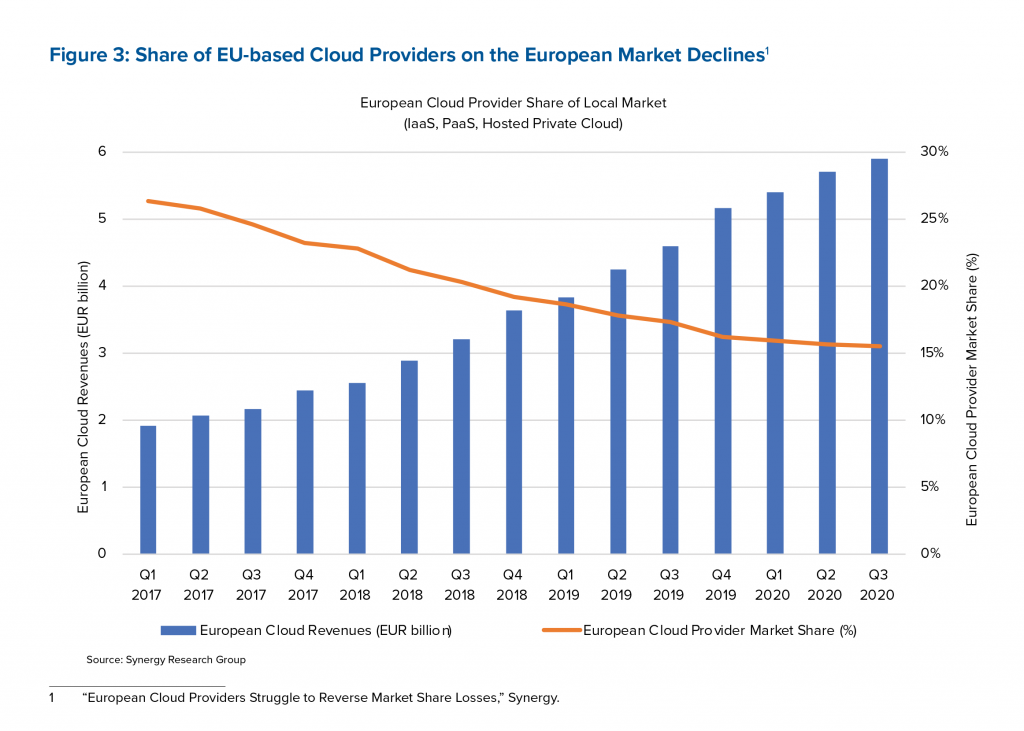

The concentration of the EU’s cloud services among few US suppliers makes it vulnerable to external supply disruptions. According to Atlantic Council research, some 92 percent of the Western world’s data are stored in the United States.124Burwell and Propp, The European Union and the Search for Digital Sovereignty. Just three US providers—Amazon, Microsoft, and Google—account for 66 percent of the European cloud market.125“European Cloud Providers Struggle to Reverse Market Share Losses,” Synergy Research Group (website), January 14, 2021, https://www.srgresearch.com/articles/european-cloud-providers-struggle-reverse-market-share-losses. EU-based cloud providers have seen their share of the European market decline from 26 percent in 2017 to 16 percent in 2020 (see figure 3) and the largest EU-based provider—Deutsche Telekom— accounts for only 2 percent of the market.126“European Cloud Providers Struggle,” Synergy.

At the same time, the uptake of cloud among EU businesses has been on the rise, which highlights the urgency of addressing the issue.127“Cloud Computing: Statistics on the Use by Enterprises,” Eurostat Statistics Explained (website), January 2021, https://ec.europa.eu/eurostat/statistics-explained/index.php/Cloud_computing_-_statistics_on_the_use_by_enterprises#Use_of_cloud_computing:_highlights. As cloud services become part of critical infrastructure, they become increasingly vulnerable to disruption.128Ariel E. Levite and Gaurav Kalwani, Cloud Governance Challenges: A Survey of Policy and Regulatory Issues, Carnegie Endowment for International Peace, November 9, 2020, https://carnegieendowment.org/2020/11/09/cloud-governance-challenges-survey-of-policy-and-regulatory-issues-pub-83124. For example, a single cloud provider’s supply chain decisions and internal security policies can impact millions of customers.129Herr, Four Myths about the Cloud. As the United States has previously threatened to cut European companies off from critical US services for engaging in business activities condoned by European countries,130Geranmayeh and Rapnouil, “Meeting the Challenge of Secondary Sanctions.” the growing dependence on a few US providers is increasingly seen as a strategic weakness that could undermine European foreign policies and national interests.131“US ‘Cloud’ Supremacy Has Europe Worried about Data,” Euractiv (media network), August 4, 2020, https://www.euractiv.com/section/digital/news/us-cloud-supremacy-has-europe-worried-about-data/.

Europeans are also concerned about foreign authorities potentially accessing their data and risks to data protection. The emergence of foreign entities holding large troves of data on individuals is a growing concern for Europeans who were alarmed by Edward Snowden’s disclosure of electronic mass surveillance by the US government.132“Mass Surveillance: EU Citizens’ Rights Still in Danger, Says Parliament,” Press Release, European Parliament, October 29, 2015, https://www.europarl.europa.eu/news/en/press-room/20151022IPR98818/mass-surveillance-eu-citizens-rights-still-in-danger-says-parliament. These concerns have intensified since the adoption of the US Clarifying Lawful Overseas Use of Data Act (CLOUD Act) that can require US tech companies to hand data to US authorities, even if it is stored outside the United States.133Laurens Cerulus, “Europe’s Litmus Test over Cloud Computing Push,” Politico, July 28, 2020, https://www.politico.eu/article/shades-of-sovereignty-dent-european-cloud-dreams/. For example, in April 2019, Germany’s top data protection office told Politico that Amazon’s cloud hosting services were not suitable for storing German police data due to the risks that US authorities would access the data.134Janosch Delcker, “German Watchdog Says Amazon Cloud Vulnerable to US Snooping,” Politico, April 4, 2019, https://www.politico.eu/article/german-privacy-watchdog-says-amazon-cloud-vulnerable-to-us-snooping/. In May 2021, the EU privacy watchdog, the European Data Protection Supervisor, launched an investigation looking into compliance of EU bodies’ using of cloud computing services provided by Amazon and Microsoft due to concerns about the transfer of personal data to the United States.135Foo Yun Chee, “EU Bodies’ Use of Amazon, Microsoft Cloud Services Faces Privacy Probes,” Reuters,” May 27, 2021, https://www.reuters.com/article/ctech-us-eu-dataprotection-amazon-com-mi-idCAKCN2D80TQ-OCATC. In addition to this, the EU is concerned about cloud service providers being subject to legislation that would contradict the EU’s General Data Protection Regulation and therefore weaken existing data protection enforcement, in particular in the context of concerns about several Chinese cybersecurity and national intelligence laws (see chapter 2.2).136European Commission, European Strategy for Data.

However, the pursuit of strategic autonomy in cloud infrastructure and related data localization requirements have important limitations. Some EU member states such as France and Germany have already introduced data localization requirements that restrict the storage or transmission of certain data outside their borders.137Herr, Four Myths about the Cloud. However, as Atlantic Council research by Trey Herr indicates, data localization policies, especially when they do not adequately capture the different types of data managed by cloud operators, can undermine the public cloud’s economic and technical underpinnings.138Herr, Four Myths about the Cloud. The public cloud—where a service provider makes its resources available to the public, enabling organizations and individuals to leverage large volumes of data storage and cutting-edge computing services at lower costs—is made possible by large numbers of global users accessing international networks of storage and computing resources.139Herr, Four Myths about the Cloud. Therefore, when data cannot cross borders, much of the flexibility at the core of the public cloud model can be lost.140Herr, Four Myths about the Cloud. Data localization requirements can force cloud service providers to build infrastructure to serve specific markets, potentially leading to costly changes in core infrastructure design.141Herr, Four Myths about the Cloud. For example, the push for data localization has led to a different model of cloud services provided by Microsoft Azure in Germany.142Tim Maurer and Garrett Hinck, Cloud Security: A Primer for Policymakers, Carnegie Endowment for International Peace, August 31, 2020, https://carnegieendowment.org/2020/08/31/cloud-security-primer-for-policymakers-pub-82597. In 2015, Microsoft announced that it would open a cloud offering that would be run through a data trustee, which would control the data stored in Germany and Microsoft’s access to the data.143Maurer and Hinck, Cloud Security. However, this offering wasn’t sustainable, according to reports, due to higher costs and separation from international business, which highlighted the challenges posed by data localization rules.144Maurer and Hinck, Cloud Security. See chapter 2.2 for more analysis on data transfers and the impact of data localization rules.

National considerations