Building a collaborative ecosystem for AI in healthcare in Low and Middle Income Economies

This publication is part of the Smart Partnerships for Global Challenges series, focused on data and AI. The Atlantic Council GeoTech Center produces events, pioneers efforts, and promotes educational activities on the Future of Work, Data, Trust, Space, and Health to inform leaders across sectors. We do this by:

- Identifying public and private sector choices affecting the use of new technologies and data that explicitly benefit people, prosperity, and peace.

- Recommending positive paths forward to help markets and societies adapt in light of technology- and data-induced changes.

- Determining priorities for future investment and cooperation between public and private sector entities seeking to develop new technologies and data initiatives specifically for global benefit.

@acgeotech: Championing positive paths forward that nations, economies, and societies can pursue to ensure new technologies and data empower people, prosperity, and peace.

The authors are a project team with the International Innovation Corps, University of Chicago Trust, supported by the Rockefeller Foundation in developing digital health and AI strategies for public health systems in India.

Introduction

Over the past two decades, Artificial Intelligence (AI) has emerged as one of the most fundamental and widely adopted technologies of the Industrial Revolution 4.0. AI is poised to generate transformative and disruptive advances in healthcare through its unparalleled ability to translate large amounts of data into actionable insights for improving detection, diagnosis, and treatment of diseases, enhancing surveillance and accelerating public health responses, and now, for rapid drug discovery as well as interpretation of medical scans.

Given its range of applications, AI will undoubtedly play a central role in most nations’ journeys towards Universal Health Coverage (UHC) and the Sustainable Development Goals (SDGs). However, the development of AI for healthcare has been largely disparate in low- and middle-income countries (LMICs) compared to high-income countries (HICs) even as their public health conditions are converging. As incomes have grown across the developing world, health outcomes and life expectancies in LMICs have markedly improved, growing closer to those in HICs. This development has ignited a growing demand for services, rising costs of delivery and innovation, and challenges in building the appropriate workforce to deliver care.

At the same time, epidemiological transition and demographic changes have shifted the disease burden in LMICs from communicable to non-communicable diseases (NCDs), such as cardiovascular disease, cancer, and diabetes, much like in HICs. However, NCD-related morbidity is much more severe in LMICs — it is estimated that of the approximately 15 million persons between 30 to 69 years who die annually from NCDs, 85% occur in LMICs.

While there is convergence in the demographics and incidence of health conditions between LMICs and HICs, there remains a gulf of difference in the quality and availability of healthcare services, linked to starkly disparate health outcomes. Presently, WHO reveals that over 40% of all countries have fewer than 10 medical doctors per 10,000 people and over 55% have fewer than 40 nursing and midwifery personnel per 10,000 people. Only one-third to half of the world’s population was able to obtain essential health services as of 2017. It is not a surprise then that key health indicators such as maternal mortality varies as much as 495 (out of 100,000 live births) in LICs as opposed to 17 in HICs. This is also the case with under-five mortality (69 per 1000 live births in low-income nations (LICs) versus 5 in HICs) and incidences of communicable diseases like malaria (189.3 per 1000 population at risk in LICs to nil in HICS).

In order to improve service delivery and health outcomes in LMICs, the international community has launched a concerted effort to minimize gaps in health system preparedness and resources, including the World Health Assembly Resolution in its 71st session calling for a global commitment on digital health. As part of this effort, a growing number of digital and AI-enabled initiatives have been launched. Researchers assert that AI-enabled healthcare can improve access to services (especially in remote areas), enhance the safety and quality of care, enable cost savings and efficiencies, and serve as an educational tool.

In fact, LMICs’ merits including quick smartphone penetration, flexible health systems, and established successes of some lower tech applications points to the perfect ground for AI experimentation. While digital health is taking off in these economies, propelling this movement even further into emerging technology transformations of health systems might not only induce a new push for digital systems readiness, but also might be necessitated by the need of LMICs to have quick transformational impact as compared to other economies.

However, there are multiple economic, institutional, and regulatory challenges in instituting AI solutions in LMICs. While AI solutions are being piloted in LMICs for diagnosis, clinical decision support, and predicting spread of certain diseases, there are several issues in moving from pilot to scale. As technology evolves and penetration improves, AI experimentation will inevitably increase. For AI-enabled technologies to truly transform healthcare and improve national and global health outcomes, it is imperative that cross-cutting challenges like data availability, business model sustainability, and lack of enabling infrastructure and other building blocks are addressed. The success of AI enabled solutions will require national and international strategy as well as vision-setting for a less-siloed health ecosystem and investment in building foundations of the data-driven industry.

Key Elements of an AI Enabled Health System

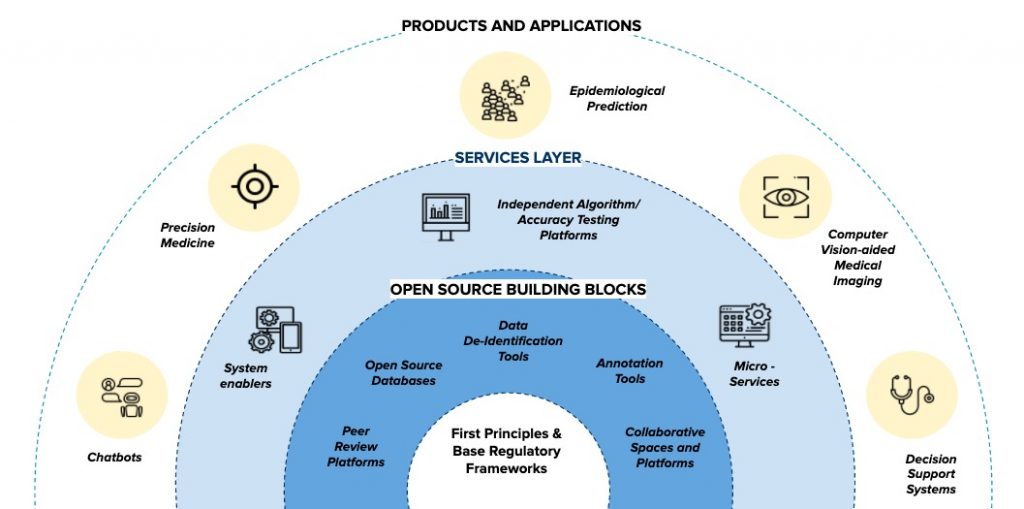

Figure 1: Representation of building blocks of an AI in healthcare ecosystem

I. Strong Digital Health Foundation

Although AI interventions have definite promise, regulatory and practical challenges prevent them from being deployed and scaled within LMICs. Digital health infrastructure forms the base on which digital health interventions such as the ones promised by AI. The scale up of this infrastructure has been impeded in LMICs due to a multitude of factors including lack of technical consensus, limited human resource and health systems capacity and inadequate research in scaling digital health in low resource settings. The global community, however, has committed itself to resolving these challenges to ensure that populations in LMICs can benefit from AI and digital health. In 2019, the UN Secretary General’s High-Level Panel on Digital Cooperation recommended that “by 2030, every adult should have affordable access to digital networks, as well as digitally enabled financial and health services, as means to make a substantial contribution to achieving the SDGs”.

Understood holistically, digital health refers to the use of any digital technology in healthcare. While it was once limited to eHealth (using ICT for health), it has come a field has widened to include genomics, big data, AI etc. Earlier, countries were encouraged by the WHO to develop a strategic plan for implementing eHealth services, which was limited to ensuring availability of health information to the right person at the right time in a secure electronic form for efficient health delivery. eHealth was limited to ensuring information exchange through Electronic Health Records (EHR), patient registries and other shared knowledge resources, and basic tracking systems.

Ensuring robust eHealth systems meant that nations had to commit themselves to solving many complex challenges through the development of policies, legislation, and standards. These issues included interoperability and standardization, lack of physical infrastructure (e.g. networks), integration with core services, and inadequate availability of eHealth knowledge and skills in the existing workforce. The WHO and ITU released a National eHealth Strategy Toolkit in 2012 to guide all nations through their journey of establishing a robust eHealth ecosystem, right from experimentation and early adoption to its mainstreaming.

Since then, progress has been laggard in the healthcare industry. While sectors such as banking and finance have been able to transform and cater to the specific needs of the large unbanked populations in LMICs through increased digitization and targeted policies, the same cannot be said for healthcare. Only approximately half of WHO member nations had an eHealth strategy as per the third global survey on eHealth (2016). Moreover, just 47% had a national EHR system and 54% reported legislation that protects the privacy of electronically held patient data.

Robust privacy legislation, interoperable Electronic Health Records, and dynamic registries are all core elements of a digitized health system on top of which effective AI solutions can be built. Thus, a well-functioning eHealth system and a well-defined eHealth strategy lay the first foundations of an AI-enabled ecosystem.

II. Modern and Integrated Health Programs

It is pertinent that the innovation process continues in a manner that is not siloed from public health delivery systems. Disintegrated innovation results in sub-optimal benefits for the health system at large. This is all the more relevant in LMICs where primary and public healthcare continue to be the most substantial element in the achievement of health objectives. The integration of AI innovations and existing health programs requires a double-sided effort, viz. ensuring health programs have the capacity to support AI development and ensuring the AI developed fits the purpose of those programs.

The former prong necessitates digital health readiness in health systems, mostly in terms of building reliable ICT infrastructure till the last mile and building capacity for accurate and digital data collection. The challenge from moving from a paper-based record to digital data collection is one that most developing economies face, mostly because of inadequate migration to digitized tools by the front-line health staff. Thus, a user centric approach is essential to ensure that data collection tools on the field can in practice integrate easily into the established workflows of staff in a time and cost efficient way. Local ownership and co-creation of these tools can also improve adoption rates and ease of maintaining these systems locally.

Data collection is also closely tied to the development of a digital health ecosystem, and includes two simultaneous measures. First, it is essential to reassess the way existing data collection happens, check for redundancies and create a minimum viable set of data to be collected. This means that data collection for different programs might have to be harmonized to ensure that progressive data collection is digitized and of acceptable quality. At the same time, efforts may need to be made to digitize existing data, in information systems or paper records, and ensure machine readability for it to be usable for AI.

The latter prong necessitates that algorithms are built based on the health systems they will be integrated into. Specifically, algorithms must account for technological as well as programmatic feasibility in order to be fit for purpose. To inform development, solution makers can use the WHO Health Technology Assessment (HTA), a multidisciplinary process that summarizes information about the medical, social, economic and ethical issues related to the use of a health technology in a systematic, transparent, unbiased, robust manner, as means to establish the appropriateness of an intervention. Further, programmatic design may need marked changes to enable the collection and use of data, particularly with respect to training of existing healthcare workers and establishing structures which enable proper administration of collected data, with the goal of creating a common data parlance in the program.

Health system planners may be better served devoting their efforts to small incremental changes in AI integration into health programs, as large scale disruptions might hinder health service delivery and create resistance to change. Simpler process optimization tools that do not provide relief or diagnosis but help human resources perform more effectively can be explored. This creates a culture of data optimization and trusting technology. For example, process and population-level AI tools offer immense benefits to national health programs and are relatively easy to integrate as they don’t require the robust regulatory standards and certifications necessary for supporting AI in clinical settings. In any case, commensurate education and capacity building of health workers, as well as establishment of logistical and procurement channels for appropriate hardware for using these tools, is critical.

III. Base Regulatory Frameworks

A holistic AI regulatory system ideally covers the entire product life cycle of the AI model from data collection to the model’s certification, deployment and vigilance. However, this is an evolving process commensurate with the development and maturity of the AI in the healthcare industry. For most LMICs at the cusp of digital health and AI transformations, it is the government and civil society leading the charge of AI development. Thus, their natural focus should be public health applications rather than advanced clinical applications like computer vision for radiology, given the critical nature of improving and expanding primary healthcare in LMICs. Regulatory certifications and evaluations of efficacy and safety are usually necessitated by a sprawling private industry developing clinical AI.

Therefore, in the first phase of the AI ecosystem development, the government’s focus should be on regulating the most foundational block of any AI model: data. Ethical use of data, especially in a way that can preserve privacy, is a significant concern amongst many LMIC governments and stakeholders working in AI and digital health. However, only 132 nations have legislation in place to secure data protection and privacy. In Asia and Africa, the adoption rate is only 55%.

Enabling an ecosystem for AI in healthcare first and foremost requires that each jurisdiction has at least a minimum viable set of principles and standards for data governance, which are similar to those required for establishing an interoperable digital health ecosystem. While there can be a range of comprehensive regulatory frameworks for data governance in healthcare, the leanest way to ensure a well-functioning AI ecosystem is by focusing on two elements in the early stages: (i) data collection and management (for ensuring quality, interoperable, and machine-readable data); and (ii) data sharing (in a way that preserves privacy).

Thus, regulations should both mandate and incentivize the recording of all health data in adherence to a minimum viable set of standards, based on national/international consensus. This includes standardizing data structures (consistency in how data like health event summaries, prescriptions, care-plans etc. are stored) as well as common medical terminologies (using common language to describe disease, symptoms, diagnosis etc. like WHO-ICD10, SNOMED CT, DICOM etc.). A common set of standards will ensure semantic and technical interoperability between all players. FAIR Guiding Principles prescribe good data management practices for storing data in a manner that is findable, accessible, interoperable, and reusable, while also emphasizing machine-actionability.

In the absence of comprehensive privacy regulations, the regulator should define standards and norms that can ensure privacy as the core of all operations that use sensitive and personal health data (referred to as “privacy by design” in western legislation like the European Union’s General Data Protection Regulation). This method can include mandating security and access control standards like ISO 22600:2014. However, it also requires a policy consensus on key questions of how to ensure consent of the patient and define ownership of data, especially for the purpose of sharing it with AI developers for processing. This is where policies and standards around de-identification and anonymization can balance privacy with the need to use their data for advancement of health innovation. While there are experimental and novel models for data sharing, collaboratives and authorities, the first and quickest step should be to define a standard model agreement for data sharing between all stakeholders that prescribes privacy and security measures to be undertaken by both the data fiduciary and the party requesting data access.

IV. Open Source Ecosystem

Technological infrastructure stacks have been widely accepted as a catalytic means of propelling an innovations and application development ecosystem for different sectors, most prominently financial services (for example, the IndiaStack). The stack approach utilizes horizontal solutions that address cross-cutting, fundamental needs of a particular industry. In AI, specifically for healthcare, this means creating open digital commons like open datasets, libraries of software codes, and common tools that enable applications to be cost-competitive, reach scale, and be largely interoperable.

While interoperability standards for how data is stored, managed, and shared can be enshrined, the challenge remains in their widespread adoption due to lack of incentives. Adhering to these standards can also prove costly because it involves redesigning many systems. This hurdle is where open source tools and libraries can be useful by incorporating these base frameworks and standards themselves and allowing innovators to build upon them, ensuring rapid diffusion and adoption at all levels. Since open source software can be easily downloaded and tested, it can encourage innovators to work within the confines of the regulatory standard without having to spend substantial resources in ensuring compliance, which can in turn deflate the prices to the end users. It is a low-touch regulatory approach that piggybacks on open tools.

Moreover, common tools can efficiently propel an innovation ecosystem of affordable, convenient, plug and play applications built on top of open source frameworks. This ‘strategy of openness’ led to the first open source program in 1998, Netscape Navigator. The creators drew inspiration from academia, realizing that if researchers kept their research methods confidential, there would be snail-paced innovation and stunted scientific progress. In AI, open source strategies foster a ‘maker’ culture. By providing public access to frameworks, data sets, workflows, models, and software (i.e. for training), this culture enables companies greater freedom to innovate in a more cost-efficient way. This model has immense potential for LMICs, where having commons-platforms kickstart peer and collaborative production distributed amongst the innovator community can create common pools of knowledge and resources essential for the AI ecosystem.

Critical open source tools for enabling AI innovations include:

a) Data De-identification

Personal health information is highly sensitive, often requiring the highest levels of security and access control. Ensuring ethical access to health information is key to the development and viability of AI solutions in healthcare. This is particularly important for countries with high population diversity to enable representative and thereby accurate models.

While the norms for data sharing are fairly defined in developed economies such as the United States and the European Union, many LMICs are yet to promulgate such legislation. The US Health Insurance Portability and Accountability Act (HIPAA) recognizes the need to share data to enable innovation in digital health, therefore encouraging de-identified data sharing. Indicative operational guidelines for de-identification of data in healthcare settings have also been issued by the Information and Privacy Commissioner of Ontario as well as non-profit organizations such as the Integrating the Healthcare Enterprise based out of Illinois.

While regional contexts may require adaptation, the principle of de-identification or anonymization as a balancing factor between the need to innovate and preservation of privacy is well-settled. However, this might also add a significant cost burden to AI players in LMICs. Thus, there is a need for open-source and subsidized de-identification services. This also ensures that methods of de-identification are applied, and that the contexts and data on which the tools work are visible to all entities to critique. In regions with ill-defined privacy regulations, these provisions play an important role in creating transparency.

While a wide array of de-identification tools exist, they may need to be adapted to local norms and sensitivities. Establishing open source programs by collectives or governments in LMICs that collaborate with established programs such as United States Privacy Engineering Program under National Institute of Standards and Technology will help set a cohesive direction for a country’s healthcare AI industry. In contrast, an industry with parallel and disparate standards will need to be matched and corrected painstakingly as the ecosystem develops, thus stalling sectoral development.

b) Open source data-banks

A significant portion of health data across the world exists as unstructured data and information systems tend to be manual and stowed away in physical files. While efforts to collate the large datasets in LMICs are underway, they are often complicated by the decentralized nature of healthcare systems. Despite challenges, the digitization and systematization of health systems have multiple merits permeating through all levels of healthcare personnel. Availability of quality datasets is also essential for training AI models to run effectively and efficiently.

Open data-banks represent a sustainable way of managing health data. They allow for stakeholders to contribute and retrieve from a transparent, publicly managed resource with defined guidelines for usage. Public scrutiny of data also creates enhanced accountability on the government and in turn leads to better quality data.

Given that collecting representative health data is often cost-prohibitive, it frequently serves as an entry barrier to entities building AI health interventions. In order to make solution development economically feasible as well as avoid bias in the healthcare models being developed, LMICs need to build a robust repository of datasets that is representative of their populations. Research institutions and non-profit organizations such as Child Health and Development Studies or OpenfMRI have created several such health data repositories. A concerted institutional push for the creation of such databanks under a single platform in LMICs will be the first step in kick-starting a local healthcare AI hub.

Structures for such data-banks have already been detailed by several countries including India, which has proposed a multilayered health information exchange. This policy also stipulates that data is stored in local databases while keys are stored in a centralized database, thus providing for enhanced data security and access control. While the delineated system is to maintain personal health records, an additional layer can be added in order to aggregate or de-identify the data in order to enable it to be shared openly without infringing on personal privacy. The Indian Government has also planned the creation of the National AI Platform (NAIRP), which is conceptualized to serve as an open data, knowledge, and innovation platform.

Open data platforms also allow for other services to be easily deployed, which can add value to the stakeholders actively using the system. Data segmentation applications are one such tool. While preparing data for machine learning purposes may involve extensive annotation and segmentation of medical data, classification and tagging of incoming data allows datasets to be linked and easily searchable. Deployment of such tools especially on platforms in LMICs may enable resource constrained public health hospitals to add data without the additional hassle of tagging and segmenting them. The data thus coming in can add tremendous value to healthcare professionals, policy makers, and technologists as data of communities, for whom public healthcare is the only option, often is relegated to filing cabinets. Further, these platforms can host population segmentation tools which enable the development of integrated service models that can target the needs of specific populations. Open data banks hold the promise of offering an effective solution to LMICs for creating truly representative data sets where all can actively participate.

c) Annotation Tools

Most AI models employ at least partial supervised learning, which involves an algorithm learning from specific predefined pointers and cues in the data it is fed. In order to enable machines to learn, data has to be labeled to the desired degree and accuracy (data annotation). This includes everything from image annotation to content categorization. Annotation also varies as per the depth, from labeling an image to precisely marking the boundaries of the required object to be identified. The depth of annotation and the spectrum of data that is fed to train the AI often dictates the accuracy to which the algorithm will be able to compute the necessary attributes.

According to some estimates, around 80% of AI project time is currently spent on data preparation, which involves significant manual labor. With the ever-increasing amount of patient data generated in hospitals and the need to support patient diagnosis with this data, computerized automatic and semiautomatic algorithms are a promising alternative to human annotation. Currently, there are several such annotation tools available, ranging from semantic segmentation to polylines that can be deployed in a variety of clinical contexts, from cancer detection to dentistry. However, error-free accuracy of these automated tools is paramount to avoiding adverse medical outcomes.

Annotation tools also serve as critical enablers in the digital ecosystem by supporting model development and classification of medical data. As these annotation tools form a significant part of any digital health setting, ensuring that they are accessible, especially in low resource settings, is critical to long-term community health security. While tools such as Ratsnake, ITK-SNAP and ImageJ are freely available, they are limited to specific purposes in static settings. ePAD, a quantitative imaging platform developed and maintained by Stanford University, is an open source tool which supports annotation of imaging based projects. A concerted effort to build and popularize such open source annotation tools under a single platform is required to reduce the burden of annotation on fledgling social health focused AI solutions and ensure the long-term viability and equitable ownership of such solutions, especially in LMICs.

d) Dedicated collaborative spaces and platforms

While advocating the open source development of tools, it is pertinent to acknowledge that peer-production of technologies, with potential to be widely adopted, necessitates active engagement from the developer community and the stakeholders involved. The complexity of any digital health tool often results in developers working in manageable silos, not consulting others who may have built similar tools albeit for different contexts. Breaking down barriers for collaboration is critical to encouraging open source tool development.

Dedicated platforms and spaces with tools which allow for such collaboration may help in actively engaging and sustaining interest of all stakeholders. Open source platforms in the long term can host in-depth health datasets, micro-architectures for products, virtual testing environments and even connect developers with programmatic implementers.

In the initial phases of an ecosystem, effective platforms such as Kaggle Kernels or Google Collab can be used to create fully functional repositories of health notebooks with code, data and descriptions, incorporating current accepted digital health standards. These collaborative spaces, when built specifically in the operating environment of a nation’s IT infrastructure norms, may help ease of translating code from existing in a cloud to being implemented on the ground using existing IT infrastructure. This may further boost the confidence of local developers on regulatory consistency and help in building a concerted national effort to solve public health problems. As discussed earlier, these platforms may also enable interoperability by allowing for wider adoption of set standards and established micro-architectures, enabling LMICs to circumnavigate the problems of disaggregated digital health systems found in developed economies early on.

On the global scale, leveraging open source libraries, packages and open platforms such as Jupyter notebooks will be key for collaboration. OpenMRS is one such tool which has leveraged platforms to build and allow for quick adoption of its open source enterprise electronic medical record system platform. Creation of such global collaborations will be critical in the space of AI as they allow for datasets and regional norms to be shared and worked on simultaneously for a common objective. Project ORCHID (OpenCollaborative Model for Tuberculosis Lead Optimization) is one such project which is currently working on a novel drug candidate for TB by working with researchers across the world and combining the data to help create a ‘global genomic TB avatar’.

e) Peer Review Platforms

Publication of scientific research is necessary in emerging fields such as AI in medicine in order to create a common knowledge pool and enable researchers and practitioners to build off each other’s work. Likewise, peer reviews serve a critical role in emerging fields by ensuring that only accurate, relevant conclusions contribute to the sector’s discourse. As AI in healthcare involves applications of niche algorithms to specific fields of medicine which directly or indirectly influence the health outcomes of an individual, it is critical that conclusions based on unproven and unscientific research be called out.

AI development involves cross functional teams of AI experts and medical researchers working with engineers to build solutions. The consequences of these solutions may be beyond the patient or the medical community and often the developers or researchers may not be equipped to identify or comprehensively address these consequences. This calls for the AI advances, including underlying data and algorithms to be published, and these published works to be reviewed by a larger community based on a strong bioethical framework built specifically for the field. Organizations like the Committee on Publication Ethics (COPE) define the ethics of scientific publishing which provides an ethical baseline for publications to follow. There is a need for organizations like COPE to define bioethical standards for all research in AI.

Since considerations that emerge from the intersection of AI and medicine are unique, they require a dedicated peer review mechanism for new research. While a few open access journals such as Journal of Medical Artificial Intelligence do exist, they are extremely rare in the field of scientific publishing. The most prominent medical journals, including the ones with a high impact score such as Lancet and The New England Journal of Medicine, do lack sections dedicated to Artificial Intelligence in the field. While these platforms do publish research on AI, papers are often behind a paywall and underlying data may not be accessible even for subscribers.

In order to efficiently and accurately review new research, there is a need to build a dedicated platform which can readily provide the required data for clinical review. There remains large scope for innovation if the clinical data from established research data-banks and journals, which was collected and employed for reaching clinical or pharmaceutical conclusions, is made accessible. This data can be re-used for development of effective AI. Close integration of such platforms with open source collaborative networks where the source code is openly published will help in reducing the cycle time for development as well as an increase credibility of peer-reviewed AI interventions

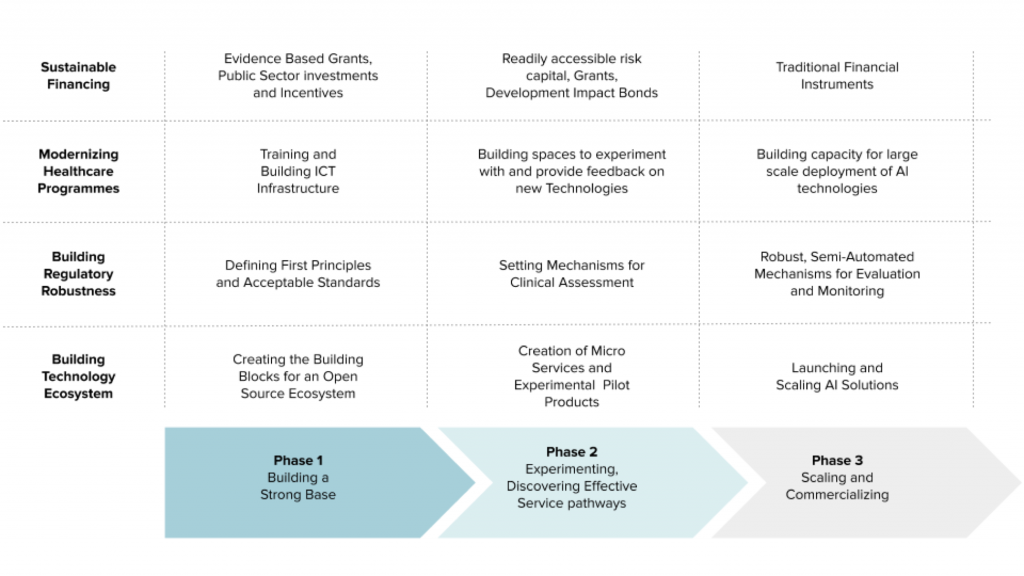

V. Sustainable Financing

While developing a technology-enabled ecosystem requires a set of base principles, technology products, public education, capacity building, and widespread adoption, sustaining the ecosystem requires stable sources of finance. Digitizing healthcare involves reorienting existing approaches and operations which may disrupt the ongoing activities but does promise cost efficiencies and better health outcomes in the future. The United States, where most of the healthcare is provided by formal entities, spent $14.5 billion in 2019 alone in efforts to digitize its health records and set up an EHR infrastructure. Healthcare in LMICs is provided by a wide range of establishments ranging from super-specialty hospitals in urban centers to informal and unregistered outpatient health providers in remote rural areas. The multiple settings, along with the expensive digital interventions required to bring in cost effective AI solutions, will require innovative technical and financial solutions. By planning for finances at the outset for a digital health program, through its different stages of development, deployment and adoption, policymakers can ensure its long-term sustainability.

The funding mechanisms, even for base open ecosystem building blocks, need not be solely from governments. Around 60% of healthcare financing in Africa, for example, is derived from private sources. Several initiatives such as the Ghana telemedicine services and MomConnect in South Africa have employed public-private funding models to great effect in different stages of their development. While these examples represent specific healthcare technology interventions, developing a sustainable ecosystem of AI in healthcare also requires the adoption of similar models to ensure long term sustainability.

Canada, for instance, invested $2.15 billion on its digital health systems and has achieved an estimated $16 billion in benefits such as quality, access and productivity gains from its investments. Developing economies can benefit similarly from investments in the base infrastructure required for development of AI interventions as it may help them leapfrog from the legacy systems in place. Dedicated funding and commitment by governments for open source building blocks will ensure long term sustainability, till the broader ecosystem takes over the regular maintenance and upkeep of these products.

Initial foundational investments by the government allow them greater autonomy in ensuring base products are accessible to all. Moreover, this investment may force governments to adopt and adhere to the new standards defined for the ecosystem, and define the paradigm for the private industry to interact with the public healthcare system. It may also be prudent for governments to plan for contingency funds and incentives to the early adopters in the initial phases of the ecosystem development to drive adoption till it reaches a critical mass.

Philanthropic grants have had a significant and perhaps even a transformational role in the domain of public health. A government push in the domain with regulatory certainty may help in convincing these large funds to invest in developing the base open source ecosystem products. Open source ecosystems also represent an opportunity for these institutions to effectively employ evidence-based grant models owing to the transparency at the core of any peer production model.

As open ecosystems mature, proprietary AI products for public health settings built on top of these tools can attract impact investments functioning on the principles of partial capital recovery or expect a return which is below the market rate. There is a requirement of large amounts of risk capital for piloting and proving the efficacy of AI solutions. The players who are able to prove efficacy can then access instruments such as development impact bonds to expand further and make a financially viable business in cases where capital may be inaccessible due to long lead times for returns.

International collaboration in the space of AI is crucial and critical. Technologies which may already be deployed in developed economies may be unviable in the developing world due to inadequate economic circumstances. This disparity is seen in the pharmaceutical sector where new innovative drugs with novel, life saving treatments are priced so as to recuperate the R&D costs incurred which results in these drugs not being viable in LMICs, as the affordability in these economies is much lower. It is thus prudent for international collaborations to set up funds to mitigate this possible eventuality and encourage innovators to develop solutions for populations in LMICs. UNITAID, which manages a grant portfolio of over $1 billion to cater to immediate health needs of the disadvantaged through innovative initiatives like Medical Patent Pooling, can provide a model of an international collaboration. Precedents of effective AI solutions may serve as catalysts for LMICs to solve accessibility problems and accelerate a digitally enabled future.

Figure 2: Indicative Phase-Wise Maturity of the AI in Healthcare Ecosystem

The Way Forward

How an industry develops generally depends upon a country’s socio-political context, especially the role of its government. Usually, industries that serve to fulfill the public welfare objectives of the government move gradually from regulated (where government support is maximum to kickstart the ecosystem) to free market. In the case of the proposed AI in healthcare ecosystem, an open source approach as described above will lead to enhanced adoption of the standards and tools that will harmonize divergent objectives. This is where collaborative efforts by the government and the philanthropic funding ecosystem will need to initially invest themselves, while at the same time focusing on building a robust digital infrastructure and data system.

Initially, certain use-cases that either serve as proofs-of-concept for the larger industry as well as those that match urgent public health needs will need to be supported through government guidance or non-profit funding and technical support. However, as investor confidence increases and cost-prohibitive entry barriers decrease, application development atop foundational layers is expected to commence with some degree of commercialization. The open ecosystem approach will also lead to a more cost-effective and commercial architecture for building AI services for the final consumer based on microservices instead of a monolithic approach.

In the latter approach, services are developed and updated recurrently for different add-on services, which is investment heavy. This limits its reuse in the development of other solutions and thwarts open innovation and interoperability. For instance, the code of an application targeting diabetes patients can in part be used towards monitoring COPD patients as well. By using that original platform as a microservice, one could easily replace the component that interfaces the patient through glucometers with one that tracks an oximeter without having to recreate the system. Thus, the government should also propel a microservice architecture development where functionality of an AI application can be distributed into sets of smaller services that have multiple uses.

As the fledgling industry matures, the government’s role will be to guide the market through effective collaborations with industry innovators and civil society, especially for transforming the early patchwork of basic foundational components into more robust and holistic foundational layers. This is specifically needed for increased data availability through establishing data marketplaces and working with industry to institute reliable synthetic data services. As more AI is developed with hope to be applied in clinical settings, the government also must move in a consultative and swift manner to establish norms and standards around clinical evaluations, benchmarking and certification of these technologies. Ethical issues like bias and explainability as well as legal frameworks around liability norms for adverse events must also be accounted for.

Further Reading

- Topol, E., 2019. High-performance medicine: the convergence of human and artificial intelligence. Nature Medicine, 25(1).

- Alami, H., Rivard, L., Lehoux, P., Hoffman, S., Cadeddu, S., Savoldelli, M., Samri, M., Ag Ahmed, M., Fleet, R. and Fortin, J., 2020. Artificial intelligence in healthcare: laying the Foundation for Responsible, sustainable, and inclusive innovation in low- and middle-income countries. Globalization and Health, [online] 16(1).

- Alami, H., Gagnon, M. and Fortin, J., 2017. Digital health and the challenge of health systems transformation. mHealth, 3.

- International Innovation Corps, University of Chicago Trust, 2019. Catalyzing Innovation In Digital Health : Event Proceedings. [online] New Delhi: International Innovation Corps,. Available here

About the Authors:

Abhinav Verma is a lawyer who specializes in International Law and a public policy professional working on strengthening health systems.

Krisstina Rao works in healthcare and education, designing grassroots programs and advocating for responsive policy. She is an Amani Social Innovation Fellow.

Vivek Eluri is a healthcare and technology innovation professional. He has worked as a portfolio manager and has designed and implemented technology products.

Yukti Sharma is a tech innovation and public policy professional, advocating for development of ethical and responsible AI. She is a Young India Fellow.

Together they form project team with the International Innovation Corps, University of Chicago Trust, supported by the Rockefeller Foundation in developing digital health and AI strategies for public health systems in India.

The GeoTech Center champions positive paths forward that societies can pursue to ensure new technologies and data empower people, prosperity, and peace.