The full text of this paper is split across the various articles linked below. Readers can browse in any order. To download a PDF version, use the button below.

Developing ethical principles, standards, and norms governing the development and use of artificial intelligence is perhaps the most imperative governance challenge for the coming decade. This is underscored by four major international statements on AI governance since 2017: Asilomar AI Principals Conference (2017); the EU’s 2019 Ethics Guidelines for Trustworthy AI; OECD Guidelines on AI (May 2019); and a Chinese 2018 White Paper on Artificial Intelligence Standardization. Though the Asilomar AI principles are nongovernmental, they were endorsed worldwide by more than twelve hundred AI and robotics researchers and institutes, more than twenty-five hundred scientists and engineers such as Stephen Hawking, and tech entrepreneurs including Elon Musk, which suggests widespread consensus. The other efforts, while less-than-firm commitments from individual states, reflect the judgment of supranational governmental institutions (e.g., EU, OECD) and, in the case of China, governmental institutions; this can certainly shape policymakers’ decision process.

While there are different points of emphasis, and varying degrees of elaboration and detail, there appears substantial overlap on essential ethics and principles. This amalgam, based on all four efforts, captures core principles.

• Human agency and benefit: Research and deployment of AI should augment human well-being and autonomy; have human oversight to choose how and whether to delegate decisions to AI systems; be sustainable, environmentally friendly, and compatible with human values and dignity.

• Safety and responsibility: AI systems should be: technically robust; based on agreed standards; verifiably safe, including resilience to attack and security, reliability, and reproducibility. Designers and builders of advanced AI systems bear responsibility and accountability for their applications.

• Transparency in failure: If an AI system fails, causes harm, or otherwise malfunctions, it should be explainable why and how the AI made its decision; that is, algorithmic accountability.

• Privacy and data-governance liberty: People should have the right to access, manage, and control the data they generate; AI applied to personal data must not unreasonably curtail an individual’s liberty.

• Avoiding arms races: An arms race in lethal autonomous weapons should be avoided. Decisions on lethal use of force should be human in origin.

• Periodic review: Ethics and principles should be periodically reviewed to reflect new technological developments, particularly those involving deep learning and general AI.

How such ethics are translated into operational social, economic, legal, and national security policies—and enforced— is an entirely different question. These ethical issues already confront business and government decision makers. Yet, none have demonstrated any clear policy decisions or implemented them, suggesting that establishing governance is likely an incremental, trial-and-error process. How to decide standards and liability for autonomous vehicles, data privacy, and algorithmic accountability is almost certainly difficult. Moreover, as AI becomes smarter, the ability of humans to understand how AI makes decisions is likely to diminish. One first step would be for a representative global forum like the G20 to reach consensus on principles, and then move to codify them in a UN Security Council resolution, or through other international governance institutions such as the International Monetary Fund, World Bank, International Telecommunication Union, or WTO.

An issue of social concern writ large, and a test of ethics, is automaticity of AI predictive judgment. In areas such as job applications, judicial sentencing, prison parole, or even medical assessment, AI’s lack of context, situational meaning, consideration of changes in human mindsets and character, and, not least, system flaws, argue for AI as a tool to augment human decisions, rather than to serve as an autonomous decision-maker.

One compelling shared interest is AI safety and standards. An important lesson from the evolution of first digital generation is that failing to build in safety and cybersecurity on the front end tends to make it far more difficult to adapt on the back end. This argues for global threshold AI standards for safety and reliability, as well as efforts by all governments to prioritize investment in R&D for that purpose. AI safety is somewhat analogous to the issue of failsafe command and control of nuclear weapons, and the need for secure “second-strike” capability as the basis of deterrence: cooperation, even with adversaries, can be warranted to minimize the risk of accidents. This is particularly true as the bar to entry for AI—with a large body of open-source research, and its dual-use nature—is far lower (and more difficult to anticipate, as it is still an immature technology) for small and medium powers than that of nuclear weapons.

Autonomous weapons and the future of strategic stability

The impact of the technologies discussed here on national security, the strategic balance, and the future conduct of war has already begun to undermine longstanding assumptions about crisis stability. The deployment already of near- autonomous systems underscores that AI governance of emerging autonomous weapons is one of several emerging technologies adding new factors to the calculus of strategic stability that policymakers must consider. Some fear technology may challenge already-beleaguered international humanitarian law, codified the Hague and Geneva Conventions. The former’s “Martens Clause” says that new weapons must comply with “the principles of humanity,” while Article 36 of the latter calls for legal reviews of new means of warfare. US officials stress that their development and use of AI will be consistent with such humanitarian practices.

The UN CCW’s inability to reach consensus on even a definition of autonomous weapons since 2014 is a measure of the problem’s complexity. As discussed above, there are degrees of autonomy and levels of human control or supervision, which, depending on the situation, some might see as autonomous, but others might not. The differences are in software and algorithms, not hardware. Semiautonomous weapons programmed to defend a ship (with autonomous and human- override modes), automatically firing at anything that attacks is one such ambiguous situation. Missiles like the HAROP, which can linger for hours, are more fully autonomous—what if the situation changes and the weapon makes a lethal decision that is flawed? Would autonomous weapons lead to escalation without human decision-making? None of the major powers, all developing cutting-edge AI, have endorsed calls to ban autonomous weapons. If one nation decides to deploy a “Terminator,” could an arms race be avoided?

Though the case of autonomous weapons includes unique questions of control and responsibility, there are parallels with the ethics of nuclear weapons; this points to the limited success in banning the use of weapons technology. The current powerful, normative taboo against nuclear use is a reaction to horrendous devastation demonstrated by its first use in 1945. Similarly, it is no coincidence that the spate of arms-control accords and test bans during the Cold War followed the near-catastrophe of the 1962 Cuban Missile Crisis. Other near-universal treaties codifying norms prohibiting the use of chemical and biological weapons (albeit, with less-than-perfect adherence) grew out of revulsion against poison gas use in WWI. If there is a pattern in the history of efforts to ban the use of terrible weapons (most often after first use), it is that success is uncommon, and establishing norms tends to work best when any advantage of use is outweighed by a perception of mutual vulnerability. In theory, AI should fall into that category. Autonomous weapons, however, are only one of several new technology-driven factors changing the equation of nuclear stability and, potentially, the balance of power. Crisis stability is threatened if decision makers—due to uncertainty, miscalculation, misunderstanding, or perception of vulnerability—feel that their second-strike capability is at risk or undermined. Avoiding crisis instability is the essence of strategic equilibrium. But, unregulated emerging technologies discussed above invalidate traditional assumptions about effective deterrence and require new understandings, restraints, or counter-technologies to sustain a framework for strategic stability.

To the degree that the United States, Russia, or China operationalizes military application of AI first, or holds an advantage in hypersonic, cyber, or anti-space weapons, one of those states might have a perceived strategic advantage in conflict scenarios. Obvious examples include swarms of smart drones, disabling C4ISR (Command, Control, Communications, Computers, Intelligence, Surveillance, and Reconnaissance) capabilities, with laser antisatellite shots, hacking command and control, and hypersonic missiles preempting second- strike assets. Beyond 2035, quantum computing could also provide a first-mover strategic advantage of unhackable communications, navigation without requiring GPS, and other sensing capabilities.

The problem is less about nuclear-arms reductions than transparency and developing new understandings and restraints to govern emerging technologies impacting crisis stability.

These new technology-driven risk factors require a rethinking by major powers of what constitutes a durable framework for strategic stability. The problem is less about nuclear-arms reductions than transparency and developing new understandings and restraints to govern emerging technologies impacting crisis stability. This may include bans on autonomous weapons, hypersonic missiles, and glide vehicles, as well as a new code of conduct for space and anti-space activities, and for cybersecurity.

While some of these issues can be discussed in existing multilateral forums, a strategic dialogue—initially, among the United States, Russia, and China, and later in the process, India—is a sine qua non for finding a new balance of interests. The starting point would be an extension of the US-Russia New START agreement, which expires in 2021, then inviting China to a new strategic dialogue. New norms, rules, and codes of conduct with regard to all the emerging technologies impacting crisis stability appears the best, a difficult, protracted, and bumpy road to global governance. Clearly, in the present climate of distrust, avoiding the perfect storm of technology triumphing over governance will be a challenge. If history is a guide, it may take a Cuban Missile-type crisis or actual conflict to introduce new sobriety to the debate.

Regardless, the stark reality of mutual vulnerabilities among all the key actors holds out hope. Can the major powers take a deep breath, reassess enlightened self-interests, and begin to explore a balance of interests on issues of rules, norms, and institutions for managing the emerging technologies on which global stability and prosperity will turn?

Read the other post in this section: The governance conundrum

Read the full paper

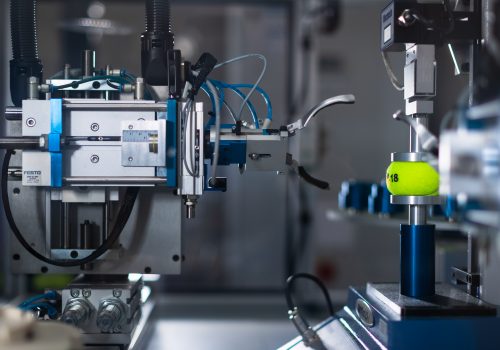

Image: A U.S. Air Force MQ-9 Reaper drone sits in a hanger at Amari Air Base, Estonia, July 1, 2020. U.S. unmanned aircraft are deployed in Estonia to support NATO's intelligence gathering missions in the Baltics. REUTERS/Janis Laizans