The full text of this paper is split across the various articles linked below. Readers can browse in any order. To download a PDF version, use the button below.

5G/Internet of Things

Emerging technologies will keep evolving as the questions raised continue to be debated. Yet, the future has arrived. The next wave of widely applied emerging tech over the next two to five years will be 5G, the next generation of wireless technology, which is up to one hundred times faster than the current 4G. Unlike previous mobile systems, 5G will use extremely high-frequency bands of the spectrum, called “millimeter bands.” This requires substantial investment in hundreds of thousands of cellular radio antennas and other infrastructure.

But, 5G is an evolving technology and other approaches are gaining favor, particularly in Japan and the United States. One prominent example is the idea of a software-based, rather than hardware-based, system known as the Open Radio Access Network (ORAN). The ORAN concept creates a new network model using software to replicate signal-processing functions. Thus, rather than having large, complex cell towers, 5G can reduce the size of its base stations, allowing them to be deployed more densely and in less conspicuous ways, and in a geographically dispersed manner.

It will be a foundational enabler, the next milestone in Fourth-Industrial-Revolution technology. As artificial intelligence will power much of the promise behind 5G, it will, in turn, spur the growth of the Internet of Things. But, no less important, IoT will connect billions of sensors and billions of devices to each other, creating massive amounts of data, which makes AI more intelligent. US, European, and Asian wireless carriers are beginning to deploy early versions of 5G. Superfast and with low latency (delay), 5G will respond in real time, driving the IoT that will have a transformational impact on advanced manufacturing (sensors, robotics), consumers, and national security—including self-driving vehicles, remote surgery, finance, smart grids and cities, precision agriculture, and autonomous robots and weapon systems in the 2020s. McKinsey forecasts that IoT , powered by 5G, will add $3.9–$11.1 trillion in value by 2025.14 Because 5G is transformational, however, cybersecurity is a critical concern. 5G/IoT will be adding a new layer to the internet, transforming it. It will increase usage and networks, connecting billions of sensors to millions of computers and networks and the cloud. It will add a layer of vulnerability on top of an already vulnerable communications platform, which can be readily hacked.

Secure 5G and the IoT will have a transformative economic and national security impact, but there is concern that cybersecurity will not be adequately built into 5G. Thus far, public/private- sector cooperation among all stakeholders has led to initial global technical and engineering standards. The nation that leads in developing and widely deploying 5G technology will have an important “first mover” advantage, with both economic and national security consequences. The United States and China are neck and neck in the global race to develop and deploy 5G technology, both internally and worldwide.

There is no shortage of tech companies from the United States and likeminded countries producing components for 5G, from chips to antennae. But, massive research and development (R&D) by Huawei and other Chinese firms have made them key players, particularly in 5G infrastructure. Huawei owns 1,529 standard patents, and Chinese firms hold 36 percent of such patents. As the UK decision to accept up to 35 percent of Huawei equipment in its 5G system suggests, global firms will need to license numerous Chinese patents regardless of the geopolitics; Chinese firms will likewise need to license patents from global firms.15 There is intense competition between Qualcomm and Huawei for 5G chips; Ericsson, Nokia, and Samsung are also in the first tier. Samsung and Verizon have a major agreement, with the former supplying 5G wireless- access technology to the latter, which seeks to accelerate US deployment. Huawei and ZTE are believed to be ahead in antennae and base-station architecture, though Samsung, Ericsson, and Nokia are competitive. Japan (both in terms of its government and private mobile operators) is investing $45 billion by 2023, and plans to roll out 5G at the 2021 Olympics.

5G is integral to China’s “Made in China 2025” plans to localize value chains and reduce dependency on foreign inputs. The intense involvement of China in creating global 5G technical and engineering standards, and its holding of patents, suggests that its plan, called “China Standards 2035,” is well on its way. China has taken advantage of reduced US engagement in international standard-setting bodies to gain advantage. Cybersecurity concerns have led to a concerted US effort to persuade allies to avoid using Huawei to build 5G networks. 5G geoeconomics are part of China’s “Digital Silk Road” ambitions to connect the Eurasian landmass. Similarly, Beijing is also trying to build an integrated digital infrastructure in Southeast Asia. Huawei and other Chinese firms are actively seeking to export digital infrastructure around the globe. There is a risk of fragmented markets and, as 5G technology evolves, conflicting standards.

Regardless of the geopolitics of 5G, the breadth, scope, and speed of IoT applications will have a major economic impact over the coming decade, across a wide spectrum of sectors.

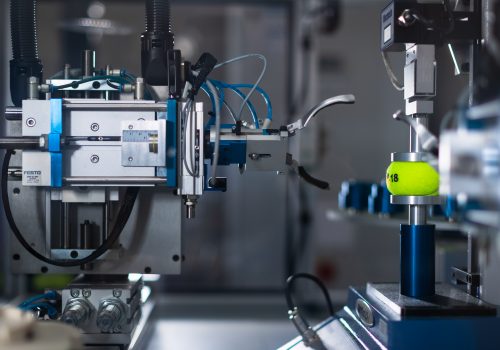

Regardless of the geopolitics of 5G, the breadth, scope, and speed of IoT applications will have a major economic impact over the coming decade, across a wide spectrum of sectors. Perhaps most prominently, the thousands of sensors and real- time vehicle-to-vehicle communication they would enable will accelerate the deployment of autonomous cars, buses, trains, ships, and trucks. This will initially happen for fleets, but by the 2030s, a new business model may prove viable, with ride firms like Uber, Didi, and Lyft altering how people think about ownership and use in terms of transport. The IoT will accelerate advanced manufacturing, using sensors for predictive industry management.For rural areas and global food production (and for urban vertical farming), IoT is a critical facilitator for precision farming, defined as everything that makes farming more accurate and controlled when it comes to growing crops and raising livestock. It is key to farm management 2.0—connecting a wide array of tools, including Global Positioning System (GPS) guidance, control systems, sensors, robotics, drones, autonomous tractors, and other equipment. IoT will enable cities to become much smarter, with more efficient traffic control, environmental monitoring, and managing utilities like smart grids that increase energy efficiency. IoT will also provide cheaper, more efficient healthcare, health monitoring, and diagnosis, and has already benefited advanced manufacturing with sensors for predictive maintenance and safety, which help reduce costs. Again, one big policy challenge—cybersecurity—remains, as billions more devices and computers can become hackable, with potentially disruptive consequences.

Artificial intelligence

Artificial intelligence, which is fundamentally data plus algorithms, promises to be a gamechanger, whether in terms of economic or battlefield innovations. It is an enabling force, like electricity, a platform that can be applied across the board to industries and services: think AI plus X. One helpful way to think about AI is to differentiate its applications. In an interview with Martin Wolf of the Financial Times, leading Chinese AI guru Kaifu Lee “distinguishes four aspects of AI: ‘internet AI’ — the AI that tracks what you do on the internet; ‘business AI’ — the AI that allows businesses to exploit their data better; ‘perception AI’ — the AI that sees the world around it; and ‘autonomous AI’ — the AI that interacts with us in the real world.”

AI is rapidly moving beyond programmed machine learning, such as robots doing repetitive human work, and single tasks like facial or voice recognition or language translation. AI is already becoming part of individuals’ daily lives via “personal assistant” robots like Amazon’s Alexa and Google Home, and, of course, in industrial and personal-service robots replacing many human functions. Moreover, there is an increasingly low bar to entry, as transparency among AI researchers has spawned wide access. There are several open-source websites to which leading researchers from top tech firms such as Google post their latest algorithms. TensorForce, for example, also enables one to download neural networks and software, with tutorials showing techniques for building them. This example of the norm of global collaboration in the ecosystem of innovation underscores the downside risks to innovation from technonationalism.

By 2030, AI algorithms will be in every imaginable app and pervasive in robots, reshaping industries from healthcare and education to finance and transportation, as well as military organization and missions. AI is already starting to be incorporated into military management, logistics, and target acquisition, and the military is exploring the use of AI to augment human mental and physical capacity. With regard to cybersecurity, it is an open question whether AI’s algorithms will provide enhanced cybersecurity, or an advantage for future hackers.

AI has already become a tool of authoritarianism, with facial recognition and big-data digital monitoring used for social control. China is not only implementing such policies, but exporting the technology. Similarly, AI has the potential of exacerbating the already vexing problem of weaponized social media, which has become a threat to social and political cohesion. As technology for trolls to create fake social media accounts improves, there is more risk not only of fake tweets and emails, but of video technology that can contrive fake videos with people appearing to say and do things they have never done. At the same time, AI could be a net plus as a tool for unmasking and attributing the trolling. Yet, it has been shown that AI is vulnerable to “spoofing,” by injecting false images into its coding.

AI is evolving beyond one-dimensional tasks of what is called “narrow AI” to “general AI.” The former refers to single tasks, such as facial recognition or language translation. The latter refers to AI that can operate across a range of tasks, using learning and reasoning without supervision to solve any problem, learning from layers of neural-network data, which is in its infancy. Two thirds of private-sector investment in AI is in machine learning—in particular, deep learning using neural networks, mimicking the human brain to use millions of gigabytes of data to solve problems. Most famously, AlphaGo beat the world champion at Go, a complex game with millions of moves. It was fed data from thousands of Go matches, and was able to select the best possible moves to outmaneuver its opponent. AI is demonstrating a growing capability to learn autonomously by extrapolating from the data fed into the algorithm.

The debate over AI remains unsettled. Some prominent technologists think AI will become as smart as, or smarter than, humans in a decade—a “Terminator scenario.” Others say progress is incremental, and such breakthroughs are a century or more away. Consider that scientists don’t know how all the billion or so neurons in the human brain work. How well, then, can AI replicate them? AI may be able to sort through thousands of job applications, or use data to suggest criminal prison sentences. But, absent human judgment, AI can’t analyze character, social skills, or personal traits that don’t show up in resumés, or how a person may change in prison.With regard to the prospect of autonomous systems, AI lacks understanding of context and meaning: Can it tell if someone is pointing a real gun or a toy pistol at it? Some leading neurologists are skeptical, arguing that intelligence requires consciousness. Emotions, memories, and culture are parts of human intelligence that machines cannot replicate. There is a growing body of evidence that AI can be hacked (e.g., misdirecting an autonomous car) or spoofed (e.g., identifying targets with false images). One potential problem for many applications is that humans don’t know how AI knows what it knows, or how its decision-making process worked. This makes it difficult to test and evaluate, or to know why it made a wrong decision or malfunctioned. This will only be more difficult as deep learning becomes more sophisticated. It also suggests a compelling argument for, as a guiding principle and operational norm, having humans “in the loop,” if not in control of AI decision-making.

Catastrophic risks

The urgency of developing a global consensus on ethics and operating principles for AI starts from the knowledge that complex systems fail. Complex systems like supercomputers, robots, or Boeing 737 jets, with multiple moving parts and interacting systems, are inherently dangerous and prone to fail, sometimes catastrophically. Because the failure of complex systems may have multiple sources, sometimes triggered by small failures cascading to larger ones, it can require multiple failures to fully understand the causes. This problem of building in safety is compounded by the fact that as AI gets ever smarter, it is increasingly difficult to discern why and how AI decided on its conclusions.The downside risks in depending solely on an imperfect AI, absent the human factor in decision-making, have already begun to reveal themselves. For example, research on facial recognition has shown bias against certain ethnic groups, apparently due to the preponderance of white faces in the AI’s database. Similarly, as AI is employed in a variety of decision- making roles, such as job searches or determining parole, absent a human to provide context, cultural perspective and judgment bias become more likely.

Robots: killing jobs and/or people?

Automated systems, machines replicating human activity, have been around for many decades (e.g., an automated teller machine (ATM)). Yet, of late, robots have become icons (or, in science-fiction movies, demons) of the tech revolution. Why? Until this century, industrial robots, mainly deployed in auto-assembly plants (and, more recently, in the electronics industry) were not standardized, had no software, and were not connected to the Internet. But, over the past two decades, as ICT became more capable and computers more powerful, sensing-technology robots have become cheaper, more ubiquitous, and more connected (for example, Xbox sensors are used to animate robots). There are now more than three million robots, and thirty-one types of personal robots (e.g., Roomba robot vacuums, or drones).

Baxter, a humanoid robot created at the beginning of this decade, is illustrative of the new forms and capabilities of robots. Baxter is mobile and normal sized on its pedestal (about five feet, ten inches tall), with dexterous arms, sensing software that allows it to be “trained” by simply copying human actions, and software that can be updated. At $22,000, it is a fraction of the cost of most industrial robots. Telepresence robots are being used in hospitals, allowing doctors to remotely assess patients and surgeons to perform surgery remotely, at even lower costs.

Despite many fears, AI-enabled robots have not, to date, generated substantial net job losses. Some studies suggest the opposite—increased productivity and more jobs. As discussed above, the five countries with the most deployed robots (the United States, China, Japan, South Korea, and Germany) all have near-record-low unemployment. There remains heated debate over whether robots will displace humans, leading to a dystopia of bored, unemployed workers, or will generate new jobs requiring new skills. There is growing evidence of humans working alongside robots, whose physical dexterity remains challenged, particularly where human judgment or context is involved—from automated call centers to “pilots” of drones thousands of miles away. Still, perhaps the greatest concern about these emerging technologies is the socioeconomic consequences: that AI- driven automation will mean the loss of jobs. The jury is still out on whether more jobs will be lost than will be created. A McKinsey Institute study examining scenarios across forty- six countries forecast that up to one third of the workforce could be displaced by 2030 by AI-driven technologies, with a midpoint of 15 percent. A 2018 Organisation for Economic Co- operation and Development (OECD) study concluded that 14 percent of jobs in OECD nations are highly automatable, and an additional 32 percent could be changed. More optimistically, a Deloitte study found that technology has created more jobs than it destroyed over the past one hundred and forty- four years. While anticipating “creative destruction” of jobs lost, and new industries and jobs created, it argues for a net gain. Nonetheless, AI and robotics will undoubtedly transform the future of work, and adapting and rethinking education and training to new skills required for a twenty-first-century workforce are looming policy issues that most governments have yet to fully address. Some technologies appear likely to create new job opportunities. For example, 3D printing, with a relatively low bar of entry, could spark local manufacturing outside of major industrial areas, shrink global supply chains, reduce transport costs, and localize trade.

Quantum computing

Looking over the horizon toward 2040, quantum computing is a good illustration of why the world may only be at the front end of the technology revolution. Quantum computing is based on the principles of quantum mechanics, which revealed that, at the atomic and subatomic levels, the behavior and characteristics of energy and matter can be different things simultaneously. This behavior of subatomic particles was so strange that Albert Einstein once referred to it as “spooky action from a distance.” Unlike the binary nature of current computers—ones and zeros—quantum bits, known as qubits, can exist in both states at once. With a sufficient amount of qubits that are stable long enough, a quantum computer would be able to perform exponentially more calculations than current supercomputers. In a single step, it could solve problems that might take years with current computers.

Such quantum computer capabilities would enable computations otherwise not possible in areas like chemistry, such as modeling that could produce new materials by simulating the behavior of matter at the atomic level. Similarly, Wall Street firms are interested in quantum because they could enable vastly more algorithm possibilities and more precise risk-management systems, modeling financial markets, complex bank exposures, and possible losses. Not least, quantum computer power could have a huge impact on deep-learning AI, making it exponentially more powerful. Cryptography may be the most disruptive application of quantum computers. Quantum computing would revolutionize cryptography, with techniques that are theoretically impossible to break. Conversely, quantum computers could allow heretofore unbreakable encryption to be deciphered. The possibility of perfect cybersecurity, writ large, has transformative implications for intelligence, national security agencies, and military forces.

Quantum computing may sound like science fiction, but look no further than the Chinese launch of the first quantum satellite in 2016 to grasp that it is already a major component of R&D portfolios of major tech firms (led by IBM, Microsoft, Google, and Intel), several dozen well-financed startups, and the governments of at least fourteen countries (led by the United States, China, and EU). China has made developing quantum computers a major element of its tech strategy. Total quantum R&D spending by national governments exceeds $1.75 billion. Quantum science could be applied to communications, radar, sensing, imaging and navigation. This could change the calculus of defense investment for the United States and other major powers, and helps explain the substantial Chinese investment in quantum R&D. Realizing the possibilities of quantum computing will require new algorithms, software, programming, and, likely, other technologies yet to be conceived. There are several different types of qubits, and efforts at prototypes to date have not gone beyond seventy-two qubits, far less than are needed for achieving the capabilities described above. Some project that the types and uses of quantum computers will be varied, and may be limited with regard to functions, not getting beyond tens of qubits for the foreseeable future. Estimates vary on when fully operational quantum computers will realize all their possibilities. As an analysis in Scientific American put it: “If 10 years from now we have a quantum computer that has a few thousand qubits, that would certainly change the world in the same way the first microprocessors did. We and others have been saying it’s 10 years away. Some are saying it’s just three years away, and I would argue that they don’t have an understanding of how complex the technology is.”

Read the full paper

Image: Frank V., Unsplash