Broken trust: Lessons from Sunburst

Table of Contents

Executive summary

Introduction

I—Sunburst explained

II—The historical roots of Sunburst

CCleaner

Kingslayer

Flame

Able Desktop

WIZVERA VeraPort

Operation SignSight

Juniper

Trendlines leading to Sunburst

III—Contributing factors to Sunburst

Deficiencies in risk management

Hard-to-defend linchpin cloud technologies

Brittleness in federal cyber risk management

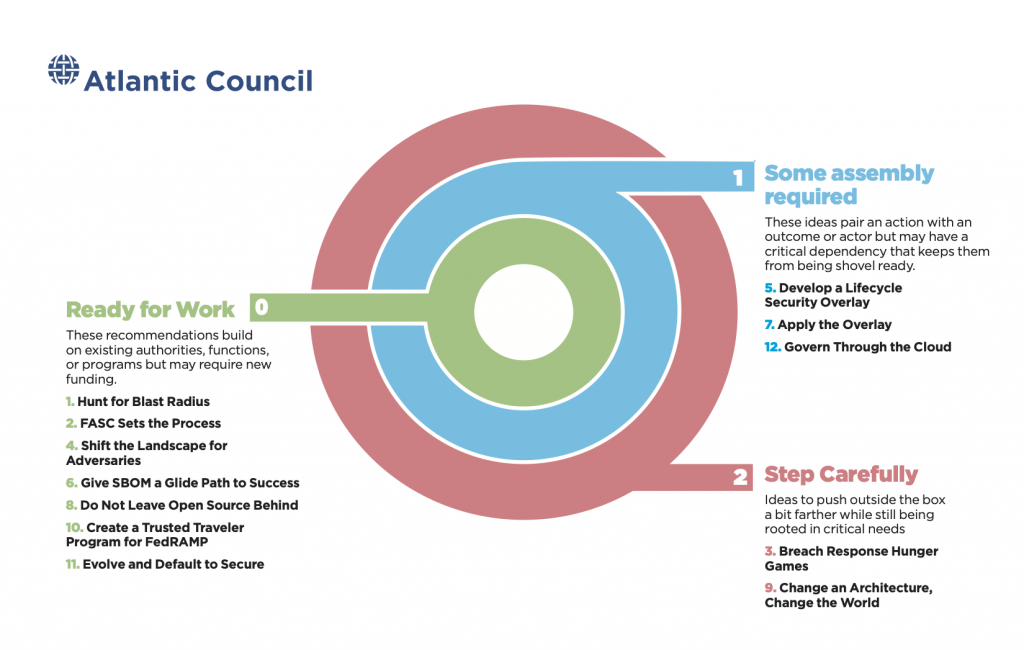

IV—Toward a more competitive cybersecurity strategy

Seeking flow

Build better on what works (or could)

Recommendations

Ruthlessly prioritize risk

Improve the defensibility of linchpin software

Enhance the adaptability of federal cyber risk management

Conclusion

About the authors

Acknowledgements

Executive summary

The Sunburst crisis was a failure of strategy more than it was the product of an information-technology (IT) problem or a mythical adversary. Overlooking that question of strategy invites crises larger and more frequent than those the United States is battling today. The US government and industry should embrace the idea of “persistent flow” to address this strategic shortfall; emphasizing that effective cybersecurity is more about speed, balance, and concentrated action. Both the public and private sectors must work together to ruthlessly prioritize risk, make linchpin systems in the cloud more defensible, and make federal cyber-risk management more self-adaptive.

The story of trust is an old one, but the Sunburst cyber-espionage campaign was a startling reminder of the United States’ collective cyber insecurity and the inadequacy of current US strategy to compete in a dynamic intelligence contest in cyberspace. The compromise of SolarWinds, part of the wider Sunburst campaign, has had enormous consequences, but, as supply-chain attacks go, it was not unprecedented, as demonstrated by seven other events from the last decade.1 Trey Herr, William Loomis, June Lee, and Stewart Scott, Breaking Trust: Shades of Crisis across an Insecure Software Supply Chain, Atlantic Council, July 27, 2020, https://www.atlanticcouncil.org/in-depth-research-reports/report/breaking-trust-shades-of-crisis-across-an-insecure-software-supply-chain.

Sunburst was also a significant moment for cloud computing security. The adversary inflicted the campaign’s most dramatic harm by silently moving through Microsoft’s identity software products, including those supporting Office 365 and Azure cloud services, and vacuuming up emails and files from dozens of organizations. The campaign raises concerns about the existing threat model that major cloud service providers Amazon, Microsoft, and Google, utilize for their linchpin services, and the ease with which users can manage and defend these products. For cloud’s “shared responsibility” to work, cloud providers must build technology users can actually defend.

Studying the Sunburst campaign, three overarching lessons become clear. First, states have compromised sensitive software supply chains before. The role of cloud computing as a target is what takes Sunburst from another in a string of supply-chain compromises to a significant intelligence-gathering coup. Second, the United States could have done more to limit the harm of this event, especially by better prioritizing risk in federal technology systems, by making the targeted cloud services more easily defensible and capable by default, and by giving federal cybersecurity leaders better tools to adapt and govern their shared enterprise.

Third, Sunburst was a failure of strategy much more than it was just an IT risk-management foul-up or the success of a clever adversary. The United States government continues to labor under a regulatory model for software security that does not match the ways in which software are built, bought, or deployed. Adding vague new secure development standards to an already overbuilt system of unmet controls and overlapping committees is not a recipe for success. Meanwhile, industry is struggling to architect its services to simultaneously and effectively defend against the latest threats, account for overlapping government requirements, and remain competitive—especially in the market for cloud services.

Observers should recognize Sunburst as part of a disturbing trend: an ongoing intelligence contest between the United States and its adversaries in which the United States is giving up leverage due to technical insecurity, deficient policy response, and a shortfall in strategy. The response to Sunburst must lead to meaningful action from both industry and the policymaking community to improve the defensibility of the technology ecosystem and position the United States and its allies to compete more effectively in this intelligence contest.

The United States and its allies must acknowledge that this is a fight for that leverage.”

Read along with the same songs the authors jammed out to while building this report:

The Sunburst crisis can be a catalyst for change and, while near-term reforms are practicable, change must extend beyond shifting how the United States buys technology or takes retribution against an adversary. The United States and its allies must acknowledge that this is a fight for that leverage. In an intelligence contest, tactical and operational information about an adversary—such as insight on forthcoming sanctions or the shape of a vulnerable network—is strategic leverage. The policymaking community must work with industry to assist defenders in becoming faster, more balanced, and better synchronized with offensive activities to ensure cyberspace remains a useful domain—one that advances national security objectives.

Timeline

“US Homeland Security, thousands of businesses scramble after suspected Russian hack”

“Microsoft’s role in SolarWinds breach comes under scrutiny”

“Biden chief of staff says hack response will go beyond ‘just sanctions’”

“SolarWinds breach ‘much worse’ than feared”

“SolarWinds hack forces reckoning with supply-chain security”

“Biden order US intelligence review of SolarWinds hack”

“SolarWinds attack hit 100 companies and took months of planning, says White House”

“US government to respond to SolarWinds hackers in weeks: senior official”

“Officials urge Biden to appoint cyber leaders after SolarWinds, Microsoft hacks”

Introduction

Now more than ever, society depends on software. Whether it is the cloud computing behind an email service, a new fifth-generation (5G) telecommunications deployment, or the system used to monitor a remote oil rig, software has become an essential and pervasive facet of modern society. As one commentator put it, “software is eating the world.”2 Marc Andreessen, “Why Software Is Eating the World,” Wall Street Journal, August 20, 2011, https://www.wsj.com/articles/SB10001424053111903480904576512250915629460. Unlike physical systems, software is always a work in progress. It relies on continual revisions from patches and updates to address security flaws and vulnerabilities, and to make functional improvements. This ongoing maintenance leaves software supply chains long, messy, and in continuous flux, resulting in significant and underappreciated aggregated risk for organizations across the world. Despite warnings from key members of the security community and increased attention to supply-chain security more generally, software has largely taken a backseat to hardware—especially 5G—in policy debates over supply-chain security.3 “Remediation and Hardening Strategies for Microsoft 365 to Defend Against UNC2452, Version 1,” Mandiant, January 19, 2021, https://www.fireeye.com/content/dam/collateral/en/wp-m-unc2452.pdf.

Cyberspace is a domain of persistent low-grade engagement below the threshold of war. Software supply chains have become a key vector for adversaries—especially those contesting for valuable intelligence. The logic of the intelligence contest argues that cyber operations are principally focused on the acquisition of information from or denial of the same to an adversary. States can use this information to identify and seize leverage over an opponent. The emailed deliberations of diplomatic activity within a coalition, a government agency’s coordination with industry during a standards body’s meeting, contracts for an upcoming naval flotilla’s port of call—all of these are nuggets of useful information that an adversary can use to shape its behavior, moving toward gaps in attention and recognizing points of vulnerability subject to influence. All of this information contributes to adversaries’ search for strategic leverage over one another and helps create opportunities to exert that leverage toward national security objectives.

In the fall of 2019, the Atlantic Council’s Cyber Statecraft Initiative launched the Breaking Trust project to catalog software supply-chain intrusions over the past decade and identify major trends in their execution. Released in July 2020, the first report from this project, and its accompanying dataset, found that operations exploiting the software supply chain have become more frequent and more impactful over the last ten years as their targets have become even more diverse.4 Ibid This report updates the dataset to include one hundred and thirty-eight incidents and, now, one of the most consequential cybersecurity crises of a young decade: Sunburst.

Beginning sometime in 2019 and carrying throughout 2020, an adversary group infiltrated more than one hundred organizations ranging from the US Departments of Homeland Security and the Treasury to Intel and Microsoft, along with seven other government agencies in the United States and nearly one hundred private companies. The adversary targeted email accounts and other productivity tools. In nearly every case, the adversary moved across victim networks by abusing several widely used Microsoft identity and access management (IAM) products, and leveraging this abuse to gain access to customer Office 365 environments in the cloud.5 Ibid As many as 70 percent of these victims were initially compromised by a software supply-chain attack on Texas-based vendor SolarWinds, from which the incident originally received its moniker.

This report uses the label “Sunburst” for this ongoing campaign. While public reporting initially focused on SolarWinds, and the compromise of this vendor’s Orion software was significant, it was just one of multiple vectors used to gain access to targeted organizations and compromise both on-premises and cloud services.6 “Alert (AA20-352A),” Cybersecurity and Infrastructure Security Agency, December 17, 2020, https://us-cert.cisa.gov/ncas/alerts/aa20-352a. As a supply-chain compromise, Sunburst is not unique; it shares common traits with, and reflects lessons unlearned from, at least seven other major software supply-chain attacks from the last decade. According to an estimate by the Cybersecurity and Infrastructure Security Agency (CISA), as many as 30 percent of these compromises occurred without any involvement by SolarWinds software, with acting Director of CISA Brandon Wales making clear, “It is absolutely correct that this campaign should not be thought of as the SolarWinds campaign.”7 Robert McMillan, et al., “Suspected Russian Hack Extends Far Beyond SolarWinds Software, Investigators Say,” Wall Street Journal, January 29, 2021, https://www.wsj.com/articles/suspected-russian-hack-extends-far-beyond-solarwinds-software-investigators-say-11611921601. The Sunburst malware was one of several malicious tools that the adversary used to move across targeted networks, including hopping into dozens of Office 365 environments. It is this lateral movement into the cloud, and the effective abuse of Microsoft’s identity services, that distinguishes an otherwise large software supply chain attack from a widespread intelligence coup.

Sunburst was neither a military strike nor an indiscriminate act of harm; it was a slow and considered development of access to sensitive government, industry, and nonprofit targets, much more like a human-intelligence operation than a kinetic strike.”

Sunburst was neither a military strike nor an indiscriminate act of harm; it was a slow and considered development of access to sensitive government, industry, and nonprofit targets, much more like a human-intelligence operation than a kinetic strike. The campaign is visible evidence of the persistent intelligence contest that is ongoing in, and through, cyberspace. How the adversary will use information obtained during the Sunburst campaign to exert leverage over the United States, facilitate sabotage, or carry out some later outright attack, is yet unrealized.8 This report and related content use the terms “attack” and “target” both for rhetorical clarity and to match the language of the technical and operational reporting on which much of this analysis is based. SolarWinds and the Sunburst incident do not rise to the level of an armed attack, nor the threshold of war, but the authors will leave it to others to parse the ongoing (and perhaps never-ending) debate over terminology and classification of this contest.

This report develops the first significant, contextualized public analysis of Sunburst, and extracts lessons for both policymakers and industry. The goal of this analysis is to understand key phases of the Sunburst campaign in the framework of an intelligence contest, examine critical similarities with seven other recent campaigns, identify both technical and policy shortfalls that precipitated the crisis, and develop recommendations to renew US cybersecurity strategy.

Section I explores the Sunburst campaign and works to understand the interplay between the software supply-chain compromise and the abuse of cloud and on-premises identity services.

Section II focuses on the familiarity of the Sunburst campaign in light of previous software supply-chain attacks by providing in-depth analysis of seven likely state-backed examples from 2012 to 2020. The section maps all incidents onto a notional outline of the software supply chain and development lifecycle used in the Atlantic Council’s previous reporting on supply chain risk management and draws out commonalities among them. The similarities—in execution, intent, and outcome—guide two critical discussions in the subsequent sections: the intelligence context in which software supply chain attacks occur and the failure of current policies and industry priorities to better prevent, identify, mitigate, and respond to them. Supply chain attacks like Sunburst have happened before, and understanding why and how the United States has adapted (or failed to adapt) previously will inform a more effective cybersecurity strategy going forward.

Section III analyzes some of the causal factors behind Sunburst’s scope and success. It delves into the shortcomings of US cybersecurity strategy and posture along three key lanes: deficiencies in risk management, reliance on hard-to-defend linchpin technologies, and limited speed alongside poor adaptability. Sunburst uniquely brings attention to the many concurrent weaknesses throughout the US cybersecurity risk-management architecture. The section pays particular attention to the cloud-enabled and cloud-adjacent features of the campaign, which differentiate Sunburst from previous widespread software supply-chain compromises. Even within existing policy regimes, better mitigation and more rapid responses to compromise, in line with stated program goals, could have curbed Sunburst’s impact. Identifying these shortcomings is key to an informed and holistic policy shift. This both primes understanding and repairing the system and creates an urgent call to action for reform.

Section IV looks at the systemic misalignment between US strategy and cybersecurity outcomes. It includes acknowledgement of underappreciated but successful (or potentially so) policy programs before developing three clusters of recommendations to renew US cybersecurity strategy. The recommendations aim to drive ruthless prioritization of risk across federal cybersecurity, to improve the defensibility of linchpin cloud services, and to develop a more adaptive federal cyber risk-management system. Together, these twelve recommendations argue for concentrating effort at points of maximum value to defenders, while improving interconnectivity between offense and defense, and maximizing the speed and adaptability of policymaking in partnership with key industry stakeholders. Taken together, the recommendations can help create a more responsive, better aligned US cybersecurity strategy.

The Sunburst campaign was not a triumph of some mythical adversary, nor simply the product of IT failures. It was the result of a strategic shortfall in how the United States organizes itself to fight for leverage in cyberspace. Overcoming this shortfall requires examining the technical and policy pathways of the Sunburst campaign as much as its strategic intent. Policymakers’ response to the campaign should take heed of the continuity between Sunburst and past events to recognize that this is surely not the last such crisis with which the United States will have to contend.

I—Sunburst explained

The incident response to the Sunburst crisis is ongoing, and more details about the campaign’s scope will become known in time. SolarWinds was one of several companies compromised as the adversary’s means of gaining access to more than one hundred actively exploited targets. The adversary’s ultimate goal in every publicly discussed case was organizational email accounts, along with their associated calendars and files.9 Damien Cash, et al., “Dark Halo Leverages SolarWinds Compromise to Breach Organizations,” Volexity, December 14, 2020, https://www.volexity.com/blog/2020/12/14/dark-halo-leverages-solarwinds-compromise-to-breach-organizations; White House, “02/17/21: Press Briefing by Press Secretary and Deputy National Security Advisor,” YouTube, February 17, 2021, https://www.youtube.com/watch?v=Ta_vatZ24Cs. To facilitate this access, the intruders persistently and effectively abused features of several Microsoft IAM products to move throughout organizations, pilfering inboxes in ways that proved difficult to track and trace. Indeed, the varied flavors of Microsoft’s IAM services are as central, if not more so, to the Sunburst campaign than SolarWinds. For instance, Malwarebytes, a security firm, disclosed a compromise through which Sunburst actors targeted the company’s systems by “abusing applications with privileged access to Microsoft Office 365 and Azure environments” to access emails, despite the firm not using SolarWinds Orion.10 Dan Goodin, “Security Firm Malwarebytes Was Infected by Same Hackers Who Hit SolarWinds,” Ars Technica, January 20, 2021, https://arstechnica.com/information-technology/2021/01/security-firm-malwarebytes-was-infected-by-same-hackers-who-hit-solarwinds/; Marcin Kleczynski, “Malwarebytes Targeted by Nation State Actor Implicated in SolarWinds Breach. Evidence Suggests Abuse of Privileged Access to Microsoft Office 365 and Azure Environments,” Malwarebytes Labs, January 28, 2021, https://blog.malwarebytes.com/malwarebytes-news/2021/01/malwarebytes-targeted-by-nation-state-actor-implicated-in-solarwinds-breach-evidence-suggests-abuse-of-privileged-access-to-microsoft-office-365-and-azure-environments. Other examples include a failed attempt by the intruders to breach the security firm CrowdStrike through a Microsoft reseller’s Azure account to access the company’s email servers, and reports from Mimecast, an email security management provider, that explained how in January a malicious actor had compromised one of the certificates used to “guard connections between its products and Microsoft’s cloud server.”11 Shannon Vavra, “Microsoft Alerts CrowdStrike of Hackers’ Attempted Break-in,” CyberScoop, December 24, 2020, https://www.cyberscoop.com/crowdstrike-solarwinds-targeted-microsoft; “Email Security Firm Mimecast Says Hackers Hijacked Its Products to Spy on Customers,” Reuters, January 12, 2021, https://www.reuters.com/article/us-global-cyber-mimecast/email-security-firm-mimecast-says-hackers-hijacked-its-products-to-spy-on-customers-idUSKBN29H22K. Although Mimecast stated that up to 3,600 of its customers could have been affected, it believes that only a “low single-digit number” of users were specifically targeted, demonstrating a pattern of deliberation in the Sunburst campaign.12 Tara Seals, “Mimecast Confirms SolarWinds Hack as List of Security Vendor Victims Snowball,” Threatpost, January 28, 2021, https://threatpost.com/mimecast-solarwinds-hack-security-vendor-victims/163431.

SolarWinds was a significant vector to this wider effort to compromise email and cloud environments.13 Sudhakar Ramakrishna, “New Findings From Our Investigation of SUNBURST,” Orange Matter, January 11, 2021, https://orangematter.solarwinds.com/2021/01/11/new-findings-from-our-investigation-of-sunburst. Sometime in 2019, malicious actors compromised SolarWinds, a software developer based in Austin, Texas, and gained access to software-development and build infrastructure for the company’s Orion product.14 “A Timeline of the Solarwinds Hack: What We’ve Learned,” Kiuwan, January 19, 2021, https://www.kiuwan.com/solarwinds-hack-timeline; “SolarStorm Timeline: Details of the Software Supply-Chain Attack,” Unit42, December 23, 2020, https://unit42.paloaltonetworks.com/solarstorm-supply-chain-attack-timeline. Once inside, the adversary inserted the Sunburst backdoor into a version of Orion via a small, but malicious, change to a dynamic-link library (DLL), which triggered the larger backdoor.15 “Analyzing Solorigate, the Compromised DLL File That Started a Sophisticated Cyberattack, and How Microsoft Defender Helps Protect Customers,” Microsoft Security, December 18, 2020, https://www.microsoft.com/security/blog/2020/12/18/analyzing-solorigate-the-compromised-dll-file-that-started-a-sophisticated-cyberattack-and-how-microsoft-defender-helps-protect. SolarWinds would eventually unknowingly digitally sign and distribute this compromised version of Orion as an update to customers.16 Snir Ben Shimol, “SolarWinds SUNBURST Backdoor: Inside the Stealthy APT Campaign,” Inside Out Security, March 9, 2021, https://www.varonis.com/blog/solarwinds-sunburst-backdoor-inside-the-stealthy-apt-campaign.

This compromised update, with its legitimate certificates, allowed Sunburst to slip easily undetected past network security tools and into more than eighteen thousand organizations from February to June 2020.17 Chris Hickman, “How X.509 Certificate Were Involved in SolarWinds Attack,” Security Boulevard, December 18, 2020, https://securityboulevard.com/2020/12/how-x-509-certificates-were-involved-in-solarwinds-attack-keyfactor; Catalin Cimpanu, “SEC Filings: SolarWinds Says 18,000 Customers Were Impacted by Recent Hack,” ZDNet, December 14, 2020, https://www.zdnet.com/article/sec-filings-solarwinds-says-18000-customers-are-impacted-by-recent-hack. This initial infection included more than four hundred and twenty-five members of the US Fortune 500, systemically important technology vendors like Microsoft and Intel,18 Laura Hautala, “Russia Has Allegedly Hit the US with an Unprecedented Malware Attack: Here’s What You Need to Know,” CNET, February 28, 2021, https://www.cnet.com/news/solarwinds-not-the-only-company-used-to-hack-targets-tech-execs-say-at-hearing; Mitchell Clark, “Big Tech Companies Including Intel, Nvidia, and Cisco Were All Infected During the SolarWinds Hack,” Verge, December 21, 2020, https://www.theverge.com/2020/12/21/22194183/intel-nvidia-cisco-government-infected-solarwinds-hack; Kim Zetter, “Someone Asked Me to Provide a Simple Description of What This SolarWinds Hack Is All About So for Anyone Who Is Confused by the Technical Details, Here’s a Thread with a Simplified Explanation of What Happened and What It Means,” Twitter, December 14, 2020, 2:43 a.m., https://twitter.com/KimZetter/status/1338389130951061504. and nearly a dozen US federal government agencies, including the Departments of the Treasury, Homeland Security, State, and Energy, as well state and local agencies.19 Sara Wilson, “SolarWinds Recap: All of the Federal Agencies Caught Up in the Orion Breach,” FedScoop, December 22, 2020, https://www.fedscoop.com/solarwinds-recap-federal-agencies-caught-orion-breach; Raphael Satter, “U.S. Cyber Agency Says SolarWinds Hackers Are ‘Impacting’ State, Local Governments,” Reuters, December 24, 2020, https://www.reuters.com/article/us-global-cyber-usa-idUSKBN28Y09L; Jack Stubbs, et al., “SolarWinds Hackers Broke into U.S. Cable Firm and Arizona County, Web Records Show,” Reuters, December 18, 2020, https://www.reuters.com/article/us-usa-cyber-idUSKBN28S2B9. Orion’s popularity, and the software’s wide use among system administrators with permissioned access to most or all of victim networks, made it a valuable target.

The adversary further compromised a very small number of these total eighteen thousand targets, using Sunburst to call a series of second-stage malware known as droppers, Teardrop and Raindrop, to download yet more malware that the intruders would use to move through target networks.20 “SUNSPOT: An Implant in the Build Process,” CrowdStrike Blog, January 11, 2021, https://www.crowdstrike.com/blog/sunspot-malware-technical-analysis/. Decoupling these second- and later-stage malware from Sunburst helped hide the SolarWinds compromise for as long as possible.21 The details of the attack are intricate, but—given the many payloads and intrusion stages, as well as the variety of malware strains tailored to specific targets—they are worth reviewing briefly. “Highly Evasive Attacker Leverages SolarWinds Supply Chain to Compromise Multiple Global Victims With SUNBURST Backdoor,” FireEye, December 13, 2020, https://www.fireeye.com/blog/threat-research/2020/12/evasive-attacker-leverages-solarwinds-supply-chain-compromises-with-sunburst-backdoor.html; “SUNSPOT: An Implant in the Build Process,” CrowdStrike Intelligence Team, January 11, 2021, https://www.crowdstrike.com/blog/sunspot-malware-technical-analysis/. The intruders first infiltrated the network of SolarWinds and implanted the SUNSPOT malware, which, in turn, inserted the SUNBURST malware into Orion during its development. Even at this early phase, the intruders took great care to remain stealthy, running SUNSPOT infrequently and encrypting its log files. “Deep Dive into the Solorigate Second-Stage Activation: From SUNBURST to TEARDROP and Raindrop,” Microsoft, January 20, 2021, https://www.microsoft.com/security/blog/2021/01/20/deep-dive-into-the-solorigate-second-stage-activation-from-sunburst-to-teardrop-and-raindrop/. Once Sunburst was in target systems via an Orion update, it downloaded another malware called Teardrop onto selected systems. Teardrop is a “dropper” designed to stealthily inject more malware onto a target system. In this case, Teardrop inserted Beacon, a penetration testing suite based on the commercial Cobalt Strike. “Raindrop: New Malware Discovered in SolarWinds Investigation,” Symantec Blogs, January 18, 2021, https://symantec-enterprise-blogs.security.com/blogs/threat-intelligence/solarwinds-raindrop-malware. After Teardrop, the malicious actor installed Raindrop, a similar dropper, onto targeted computers that were not infected by Sunburst. Raindrop also inserted Cobalt Strike Beacon onto targets, though the exact configurations and setups of the two droppers vary. “Malware Analysis Report (AR21-039B),” Cybersecurity and Infrastructure Security Agency, February 8, 2021, https://us-cert.cisa.gov/ncas/analysis-reports/ar21-039b; “Beacon Covert C2 Payload,” Cobalt Strike, accessed March 1, 2021, https://www.cobaltstrike.com/help-beacon. By using Teardrop and Raindrop, the intruders protected the Sunburst backdoor from discovery.

Although more than 80 percent of the affected SolarWinds customers operate in the United States, the breach also affected clients in Canada, Mexico, Belgium, Spain, the United Kingdom, Israel, and the United Arab Emirates.22 Catalin Cimpanu, “Microsoft Says It Identified 40 Victims of the SolarWinds Hack,” ZDNet, December 18, 2020, https://www.zdnet.com/article/microsoft-says-it-identified-40-victims-of-the-solarwinds-hack. The effort needed to simultaneously and directly compromise even one tenth as many organizations would be enormous, underlining the incredible cost-effectiveness of software supply-chain intrusions.

In the case of Sunburst, a large-scale software supply-chain attack became a full-blown crisis when the adversary successfully abused several Microsoft IAM products. This abuse, sustained by including techniques known to the security community for years, helped the adversary move silently across victim networks. These techniques also enabled the adversary, in a damaging turn of events, to hop from on-premises networks into Office 365 environments.

In nearly every case observed by FireEye, the Sunburst adversary relied on various techniques to abuse Microsoft’s IAM products to move laterally within organizations—enabling the most consequential phase of the ongoing incident.23 “Microsoft Security Response Center,” Microsoft Security Response Center, December 31, 2020, https://msrc-blog.microsoft.com/2020/12/31/microsoft-internal-solorigate-investigation-update. Sunburst operators targeted Azure Active Directory, the Microsoft service used to authenticate users of both Office 365 and Azure, to modify access controls and abuse highly privileged accounts to access email accounts across organizations.24 Shain Wray, “SolarWinds Post-Compromise Hunting with Azure Sentinel,” Microsoft, December 16, 2020, https://techcommunity.microsoft.com/t5/azure-sentinel/solarwinds-post-compromise-hunting-with-azure-sentinel/ba-p/1995095.“Remediation and Hardening Strategies for Microsoft 365 to Defend Against UNC2452, Version 1”; Michael Sentonas, “CrowdStrike Launches Free Tool to Identify & Mitigate Risks in Azure Active Directory,” CrowdStrike, January 12, 2021, https://www.crowdstrike.com/blog/crowdstrike-launches-free-tool-to-identify-and-help-mitigate-risks-in-azure-active-directory. The adversary also leveraged a previously disclosed technique to steal signing certificates to grant itself access to sensitive resources while bypassing multi-factor authentication safeguards, including dozens of user email inboxes in Office 365.25Mathew J. Schwartz and Ron Ross, “US Treasury Suffered ‘Significant’ SolarWinds Breach,” Bank Information Security, https://www.bankinfosecurity.com/us-treasury-suffers-significant-solarwinds-breach-a-15641; Alex Weinert, “Understanding Solorigate’s Identity IOCs—for Identity Vendors and Their Customers,” Microsoft, February 1, 2021, https://techcommunity.microsoft.com/t5/azure-active-directory-identity/understanding-quot-solorigate-quot-s-identity-iocs-for-identity/ba-p/2007610.

The US government and multiple security firms have asserted state-sponsored Russian culpability for the campaign, although there is disagreement about which specific group is responsible. Incident response has taken place in stages, and by independent investigations using both private and public data where attribution was not the first priority. On December 14, 2020, The Washington Post, citing anonymous sources, blamed the campaign on APT29 (Cozy Bear), which Dutch intelligence agencies have linked to Russia’s Foreign Intelligence Service (SVR).26 Ellen Nakashima, et al., “Russian Government Hackers Are behind a Broad Espionage Campaign That Has Compromised U.S. Agencies, including Treasury and Commerce,” Washington Post, December 14, 2020, https://www.washingtonpost.com/national-security/russian-government-spies-are-behind-a-broad-hacking-campaign-that-has-breached-us-agencies-and-a-top-cyber-firm/2020/12/13/d5a53b88-3d7d-11eb-9453-fc36ba051781_story.html. The Russian attribution seemed to be informally confirmed the next day, when a member of the US Congress publicly identified Russia after receiving a classified briefing.27 Richard Blumenthal, “Stunning. Today’s Classified Briefing on Russia’s Cyberattack Left Me Deeply Alarmed, in Fact Downright Scared. Americans Deserve to Know What’s Going On. Declassify What’s Known & Unknown,” Twitter, December 15, 2020, https://twitter.com/SenBlumenthal/status/1338972186535727105?s=20. Indeed, this attribution to Russia was strengthened when a joint statement by the Federal Bureau of Investigation (FBI), CISA, the Office of the Director of National Intelligence (ODNI), and the National Security Agency (NSA) identified the operation as “likely Russian in origin.”28 “ODNI Home,” ODNI Office of Strategic Communications, January 5, 2021, https://www.dni.gov/index.php/newsroom/press-releases/press-releases-2021/item/2176-joint-statement-by-the-federal-bureau-of-investigation-fbi-the-cybersecurity-and-infrastructure-security-agency-cisa-the-office-of-the-director-of-national-intelligence-odni-and-the-national-security-agency-nsa; Andrew Olson, et al., “Explainer—Russia’s Potent Cyber and Information Warfare Capabilities,” Reuters, December 19, 2020, https://www.reuters.com/article/global-cyber-russia/explainer-russias-potent-cyber-and-information-warfare-capabilities-idUSKBN28T0ML.

Attribution, as ever, remains more than a technical question.29 Thomas Rid, et al., “Attributing Cyber Attacks,” Journal of Strategic Studies 38: 1–2, 4–37, https://www.tandfonline.com/doi/abs/10.1080/01402390.2014.977382. From the private sector, both FireEye and the security firm Volexity, in contrast to the reporting by TheWashington Post, attributed the operation to an unknown or uncategorized group, called UNC2452 by FireEye on December 13, 2020,30 Kevin Mandia, “Global Intrusion Campaign Leverages Software Supply Chain Compromise,” FireEye, December 13, 2020, https://www.fireeye.com/blog/products-and-services/2020/12/global-intrusion-campaign-leverages-software-supply-chain-compromise.html. and Dark Halo by Volexity the following day.31 Cash, et al., “Dark Halo Leverages SolarWinds Compromise to Breach Organizations.” Both FireEye and Volexity have maintained this attribution. Separately, technical evidence published on January 11, 2021, by Kaspersky researchers highlighted similarities between the SolarWinds compromise and past operations by Turla (Venomous Bear),32 Georgy Raiu, et al., “Sunburst Backdoor—Code Overlaps with Kazuar,” Securelist, January 11, 2021, https://securelist.com/sunburst-backdoor-kazuar/99981. a threat actor that the Estonian Foreign Intelligence Service (EFIS) had previously tied to the Russian Federal Security Service (FSB).33 “The Domestic Political Situation in Russia,” Estonian Foreign Intelligence Service, 2018, https://www.valisluureamet.ee/pdf/raport-2018-ENG-web.pdf; Andy Greenburg, “SolarWinds Hackers Shared Tricks with Known Russian Cyberspies,” Wired, January 11, 2021, https://www.wired.com/story/solarwinds-russia-hackers-turla-malware. This slow and winding attribution process is likely to continue in the coming months.

While the adversary’s successful, repeated abuse of Microsoft’s identity products helped Sunburst become something more than a notable supply-chain compromise, there are important lessons in the SolarWinds vector. Importantly, many of Sunburst’s characteristics—objectives, methods, and target selection—echo previous campaigns, and should provide lessons for industry practitioners and policymakers alike.

II—The historical roots of Sunburst

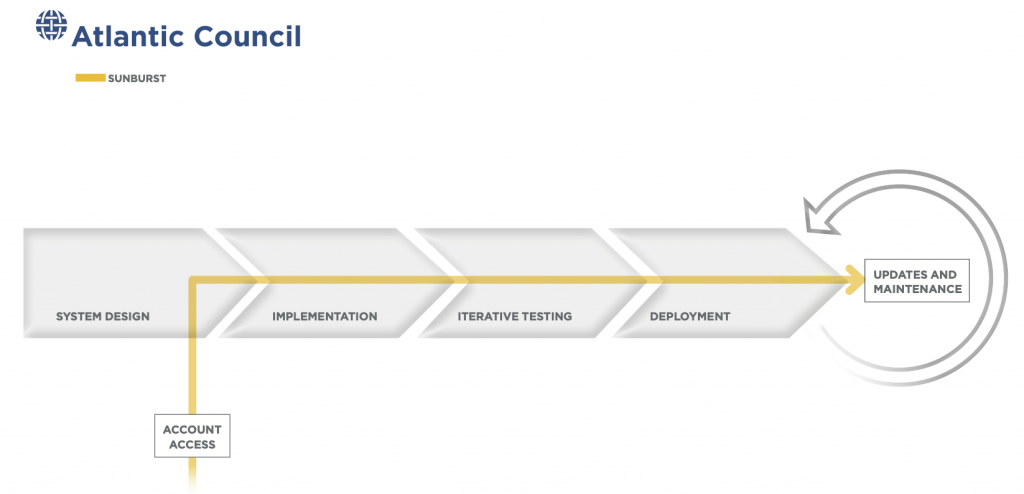

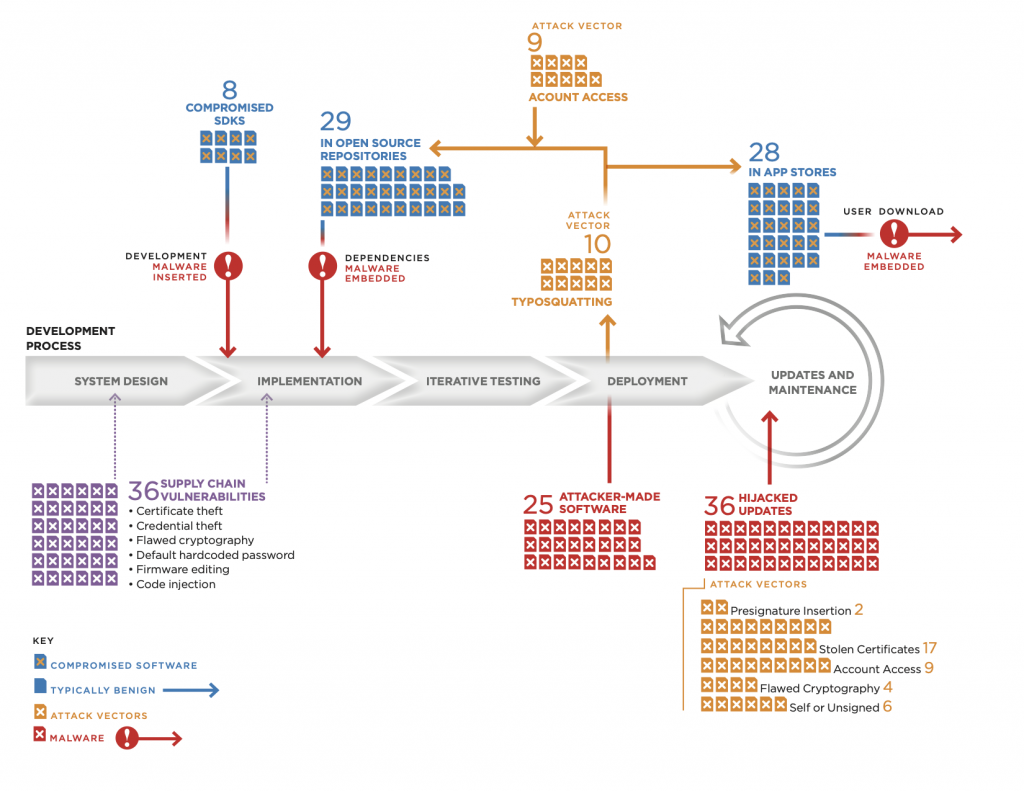

Sunburst offers rare public insight into the intense contest for information taking place in and through cyberspace, as well as the insecurity of critical technology supply chains. Sunburst may be one of the most consequential espionage campaigns of a generation. But, as a software supply-chain intrusion, it has ample precedent. A significant vector in the Sunburst incident was the adversary’s compromise of SolarWinds’ Orion software-development infrastructure, allowing intruders to place malware in thousands of potential targets. As Figure 2 illustrates, since 2010, there have been thirty-six other cases of intruders likewise successfully targeting software updates out of one hundred and thirty-eight total recorded supply-chain attacks and vulnerability disclosures. The authors laid out each of these incidents along a notional model of software development to show their distribution across the software supply chain.34 There is no good way to concisely represent all of software development, and this graphic is not intended to capture the many intricacies of the process. This representation of a waterfall-style model matches with much of the software captured in the study. For more on this dataset, see: Herr et al., Breaking Trust.

In the Sunburst case, intruders were able to access SolarWinds’ build infrastructure, rather than just tacking malware onto a pending update. Of these thirty-six total cases, approximately fifteen included similar access to build or update infrastructure, and, of those, nearly half have state attribution. Rather than adding their malware alongside the Orion software just before they sent it to customers, like attaching to a motorcycle a sidecar with a bomb inside, the intruders went further and compromised the company’s build infrastructure. The result was like secreting a bomb into the cylinders of the motorcycle’s engine before it sold—far more deeply embedded in the resulting device, and thus harder to detect or remove.

Many of these states are locked in a persistent contest for information in which they “compete to steal information from one another, protect what they have acquired, and corrupt the other side’s data and communications.”35 Robert Chesney et al., “Policy Roundtable: Cyber Conflict as an Intelligence Contest,” Texas National Security Review, December 18, 2020, https://tnsr.org/roundtable/policy-roundtable-cyber-conflict-as-an-intelligence-contest. Software supply-chain intrusions are well suited to this intelligence contest. They deliver stealthy espionage capabilities, including data collection, data alteration, and the opportunity to position for follow-on activities.This kind of access, and the information it offers, can produce the type of operational leverage states seek to achieve in cyberspace in support of more strategic ends beyond the one domain. Though not leveraged directly, information’s aggregation and distillation allow parties to recognize opportunities for action, and to anticipate or respond to efforts against their valuable targets. This leverage could come, for example, in the form of valuable intellectual property that can invigorate a key industry or as information on planned sanction targets and timelines to enable the resilience of the affected areas. Recognizing the logic of this intelligence contest, and the real value of this leverage, should help inform a more effective defense.

This section reviews seven campaigns that share common traits with Sunburst: CCleaner, Kingslayer, Flame, Able Desktop, VeraPort, SignSight, and Juniper. The report covers these seven cases as similarly meaningful examples of state-backed software supply-chain intrusions as well as to cover a range of dates, from 2012 to more recent incidents from 2020.

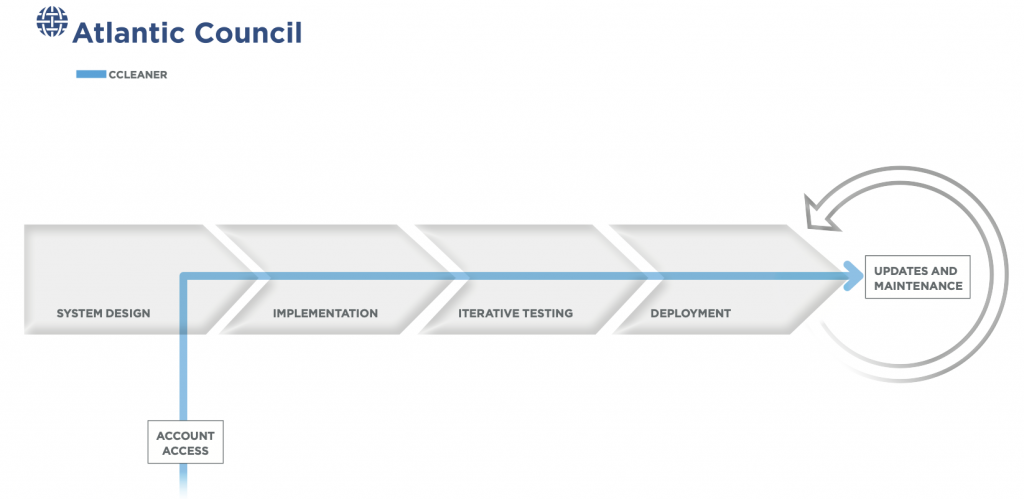

CCleaner

Over the course of 2017, a version of the ubiquitous administrative tool CCleaner, now owned by Avast, was compromised and distributed to several hundred thousand customers, and then used to infiltrate a small set of technology and telecommunications companies from within the initial target group.36 Lucian Constantin, “40 Enterprise Computers Infected with Second-Stage CCleaner Malware,” Security Boulevard, September 26, 2017, https://securityboulevard.com/2017/09/40-enterprise-computers-infected-second-stage-ccleaner-malware/. Intruders initially gained access to the software while it was still owned and operated by Piriform, through a developer workstation reached using credentials stolen from an administrative collaborative tool called TeamViewer.37 Alyssa Foote, “Inside the Unnerving Supply Chain Attack That Corrupted CCleaner,” Wired, April 7, 2018, https://www.wired.com/story/inside-the-unnerving-supply-chain-attack-that-corrupted-ccleaner.

Once inside, the intruders began to move laterally within Piriform’s network during off hours to avoid detection and, in less than a month, installed a modified version of the ShadowPad malware to gain wider access to Piriform’s build and deployment systems. Beginning in August 2017, after Avast’s acquisition of the software, intruders began distributing a compromised version of CCleaner to approximately 2.27 million users through the company’s update system. As in the case of Sunburst, users had no reason to suspect the authenticity of these software updates. They came cryptographically signed directly from the software vendor. These companies were based in eight different countries, mostly in East and Southeast Asia. This tactic of casting a wide net and then narrowly refining an active target list is common to this type of intrusion. However, with Sunburst, the malware distribution reached more diverse targets, including government agencies and nonprofits. As in the Sunburst case, the CCleaner compromise focused on gathering information, with no evidence that there was any intent to disrupt or deny operation of the affected companies.

Kaspersky Lab and others have linked CCleaner to the Chinese hacking group APT17 under the umbrella of the Axiom group, which has a history of using software supply-chain incursions as its core tool to uncover and exploit espionage targets. Based on both technical and contextual evidence, this allegation details tactics that strongly resembled those used in intrusions previously attributed to the Axiom group. Focusing on attribution through intent, the specific concentration on these companies strongly suggests that the intruder’s intent was to extract intellectual property. China has previously been identified as a prominent practitioner of economic espionage, and others point to the degree of resource intensity of the CCleaner compromise, the apparent technical knowledge of the intruders, and their level of preparation as strongly indicative of a state-affiliated group.38 Michael Mimoso, “Inside the CCleaner Backdoor Attack,” Threat Post, October 5, 2017, https://threatpost.com/inside-the-ccleaner-backdoor-attack/128283/; Lucian Constantin, “Researchers Link CCleaner Hack to Cyberespionage Group,” Vice, September 21, 2017, https://www.vice.com/en/article/7xkxba/researchers-link-ccleaner-hack-to-cyberespionage-group; Hearing before the Congressional Executive Commission on China, 113th Congress, 1st session, June 25, 2013, https://www.govinfo.gov/content/pkg/CHRG-113hhrg81855/html/CHRG-113hhrg81855.htm; Jay Rosenberg, “Evidence Aurora Operation Still Active Part 2: More Ties Uncovered Between CCleaner Hack & Chinese Hackers,” Intezer, October 2, 2017, https://www.intezer.com/blog/research/evidence-aurora-operation-still-active-part-2-more-ties-uncovered-between-ccleaner-hack-chinese-hackers.

Kingslayer

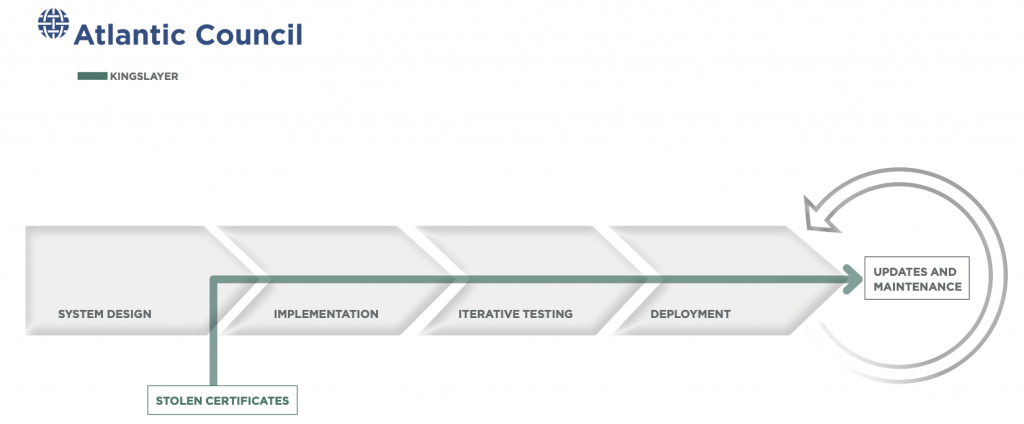

First disclosed by RSA Security researchers in February 2017, the Kingslayer operation targeted an administrative software package called EvLog—developed by the Canadian software company Altair Technologies—in a concerted software supply-chain intrusion, affecting a significant number of enterprise organizations across the globe.

EvLog served as a valuable target because its users were largely system and domain administrators, allowing intruders a high degree of access to targeted networks once they compromised the tool. In a statement later removed from its website, Altair claimed that its customers included four major telecommunications providers, ten different Western military organizations, twenty-four Fortune 500 companies, five major defense contractors, dozens of Western government organizations, and myriad banks and universities. As with Sunburst, the intruders utilized EvLog’s extensive client list and administrative privileges to achieve persistent access to the systems of a wide variety of high-tier clients in the private and public sectors.39 Amy Blackshaw, “Kingslayer—A Supply Chain Attack,” RSA, February 13, 2017, https://www.rsa.com/en-us/blog/2017-02/kingslayer-a-supply-chain-attack. However, almost five years after the Kingslayer campaign began, it is still not known how many of these customers may have been—and possibly remain—compromised by the operation.

The primary vector in this incident was Altair’s EvLog 3.0, a tool that allowed Windows system administrators to accurately interpret and troubleshoot items in event logs. Intruders initially compromised eventid.net, the website used to host EvLog and provide downloads and updates, and replaced legitimate EvLog 3.0 files with a malicious version of the software. It is not clear how these intruders first gained access to the Altair update system, but they managed to secure Altair’s code-signing certificates and authenticate their malicious version. Once this install or update was complete, the malicious package would then attempt to download a secondary payload. The targets of this malware, as in the Sunburst incident, trusted the compromised version of the EvLog software without verification, as it appeared “certified and sent” by Altair Technology.40 Howard Solomon, “Canadian Cyber Firm Confirms It Was the Victim Described in RSA Investigation,” IT World Canada, February 23, 2017, https://www.itworldcanada.com/article/canadian-cyber-firm-confirms-it-was-the-victim-described-in-rsa-investigation/390903.

Like Sunburst, Kingslayer appears to have been an espionage operation, with no evidence that there was any intent to disrupt or deny operations of the affected companies, and the intruders took significant steps to remain covert. Because EvLog is almost exclusively used by system administrators, it also represents an “ideal beachhead and operational staging environment for systematic exploitation of a large enterprise.”41 “Whitepaper: Kingslayer—A Supply Chain Attack¸” RSA, February 13, 2017, https://www.rsa.com/en-us/offers/kingslayer-a-supply-chain-attack. The malware gave intruders espionage-enabling capabilities such as uploading and downloading files, as well as the ability to execute programs on the affected network. The Kingslayer campaign was attributed with some confidence to the China-based threat group Codoso, also known as APT9 or Nightshade Panda. Researchers were able to identify overlapping domains and Internet Protocol (IP) addresses for malware and receive stolen data from a target network.”] infrastructure between Kingslayer and past Codoso campaigns. This incident fits the observed trend of Chinese espionage operations, which tend to be large and covert incursions against both private-sector actors and US government entities, including targets whose value may only be realized in operations months or years later.42Eduard Kovacs, “Serious Breach Linked to Chinese APTs Comes to Light,” SecurityWeek, February 22, 2017, https://www.securityweek.com/serious-breach-linked-chinese-apts-comes-light.

Flame

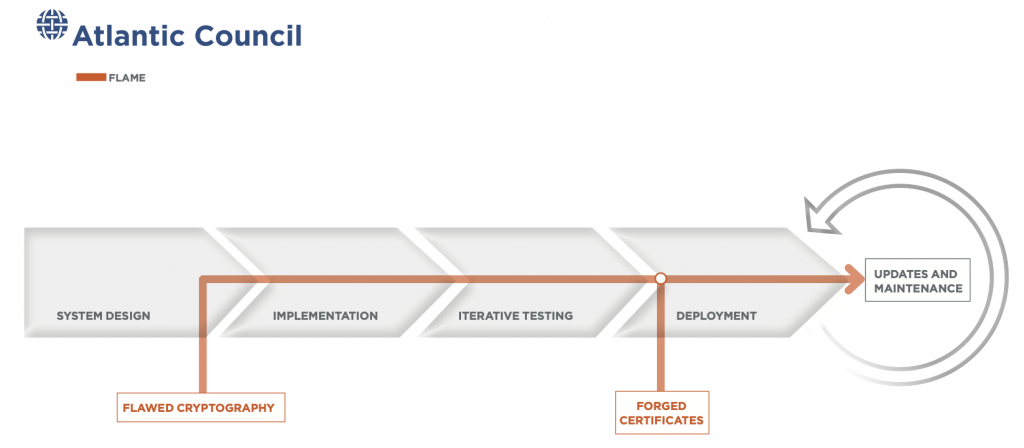

In 2012, a technically mature cyber-espionage operation was reportedly linked to the same consortium of agencies as the watershed Stuxnet malware discovered two years earlier.43 Kim Zetter, “Report: US and Israel Behind Flame Espionage Tool,” Wired, June 19, 2012, https://www.wired.com/2012/06/us-and-israel-behind-flame. The initial target of this software supply-chain operation was the widely used Microsoft Windows operating system, in which Flame masqueraded as a legitimate software update.

The operators of Flame used a novel variant of a cryptographic collision to take advantage of weaknesses inherent in the MD5 hashing algorithm within the system design—weaknesses that had been known for more than a decade.44 “CWI Cryptanalyst Discovers New Cryptographic Attack Variant in Flame Spy Malware,” CWI, July 6, 2012, https://www.cwi.nl/news/2012/cwi-cryptanalist-discovers-new-cryptographic-attack-variant-in-flame-spy-malware; Robert Lemos, “Flame Exploited Long-Known Flaw in MD5 Certificate Algorithm,” EWEEK, February 2, 2021, https://www.eweek.com/security/flame-exploited-long-known-flaw-in-md5-certificate-algorithm. The intruders abused the weak hashing algorithm and the code-signing privileges erroneously granted to Terminal Server Licensing Service to forge code-signing certificates that were linked to Microsoft’s root certificate authority.45 Alex Sotirov, “Analyzing the MD5 Collision in Flame,” Trail of Bits, http://www.kormanyablak.org/it_security/2012-07-17/Alex_Sotirov_Flame_MD5-collision.pdf. These certificates tricked target computers into accepting incoming malware payload deployments as legitimate Windows updates.46 Gregg Keizer, “Researcher Reveal How Flame Fakes Windows Update,” Computerworld, June 5, 2012, https://www.computerworld.com/article/2503916/researchers-reveal-how-flame-fakes-windows-update.html. To improve the likelihood of users accepting the falsified update, and to better disguise its activity, Flame was able to verify the update needs of individual systems. Unlike with Sunburst, intruders did not infiltrate Microsoft’s update process per se; rather, they successfully disguised their deployments as legitimate software from the vendor.47 Zetter, “Report: US and Israel Behind Flame Espionage Tool”; Kim Zetter, “Meet ‘Flame,’ The Massive Spy Malware Infiltrating Iranian Computers,” Wired, May 28, 2012, https://www.wired.com/2012/05/flame/.

Once the Flame malware downloaded and unpacked itself onto target machines, it connected with one of approximately eighty available command-and-control servers to await further instruction. This malware had myriad capabilities, including mapping network traffic, taking screenshots, making audio recordings, and tracking keystrokes.48 Dave Lee, “Flame: Massive Cyber-attack Discovered, Researchers Say,” BBC News, May 28, 2012, https://www.bbc.com/news/technology-18238326. The Flame infection—first detected in 2010—likely began much earlier and infected machines worldwide, though its activity was concentrated in the Middle East.49 Alexander Gostev, et al., “The Flame: Questions and Answers,” Securelist English Global, May 28, 2012, https://securelist.com/the-flame-questions-and-answers/34344. The systems infected represented a wide diversity of target types, ranging from individuals to private companies to educational institutions to government organizations. The Flame malware, like Sunburst, showed impressive diversity in design and target typing.

Flame served as an effective espionage tool, gathering a wide range of information and allowing the intruders to both collect and alter data on the target systems. As with Sunburst, there is no evidence that the Flame malware was intended to cause destruction, only to gather and exfiltrate information. The Flame designers appear to have prioritized stealth and operational longevity in creating Flame, forgoing svelte malware for a large and multi-featured mm with sophisticated tricks to remove most traces of its presence from targeted computers.50 Brian Prince, “Newly Discovered ‘Flame’ Cyber Weapon On Par With Stuxnet, Duqu,” SecurityWeek, May 28, 2012, https://www.securityweek.com/newly-discovered-flame-cyber-weapon-par-stuxnet-duqu. According to a former high-ranking US intelligence official, the reported intelligence gathering surrounding the Iranian nuclear program was executed as part of a larger program intended to slow Iran’s nuclear-enrichment program, and served as cyber prepositioning for further efforts to that end.51 Greg Miller, et al., “U.S., Israel Developed Flame Computer Virus to Slow Iranian Nuclear Efforts, Officials Say,” Washington Post, June 19, 2012, https://www.washingtonpost.com/world/national-security/us-israel-developed-computer-virus-to-slow-iranian-nuclear-efforts-officials-say/2012/06/19/gJQA6xBPoV_story.html.

Initial analysis of the size, complexity, and geographic scope of Flame suggested state involvement. As in the case of Sunburst, the developer would have needed to invest significant resources and technical expertise to execute the operation, especially to calculate the hash collision that allowed access to the Windows update process. After initial wide speculation as to attribution, The Washington Post reported that the United States and Israel were responsible for the creation of the Flame malware.52 Ibid. This attribution followed the discovery of evidence informing the behavior of the intruder, and the connection made that Flame contained some of the same code as the Stuxnet malware. This attribution, though not confirmed, also fits with the apparent intentions and targets of the malware—the Iranian nuclear-enrichment program—as well as with the intrusion’s level of sophistication.53 Alexander Gostev, et al., “Full Analysis of Flame’s Command & Control servers,” Securelist English Global Securelistcom, September 17, 2012, https://securelist.com/full-analysis-of-flames-command-control-servers/34216/; Gostev, “The Flame: Questions and Answers”; Prince, “Newly Discovered ‘Flame’ Cyber Weapon On Par With Stuxnet, Duqu”; Kim Zetter, “Coders Behind the Flame Malware Left Incriminating Clues on Control Servers,” Wired, September 17, 2012, https://www.wired.com/2012/09/flame-coders-left-fingerprints.

Able Desktop

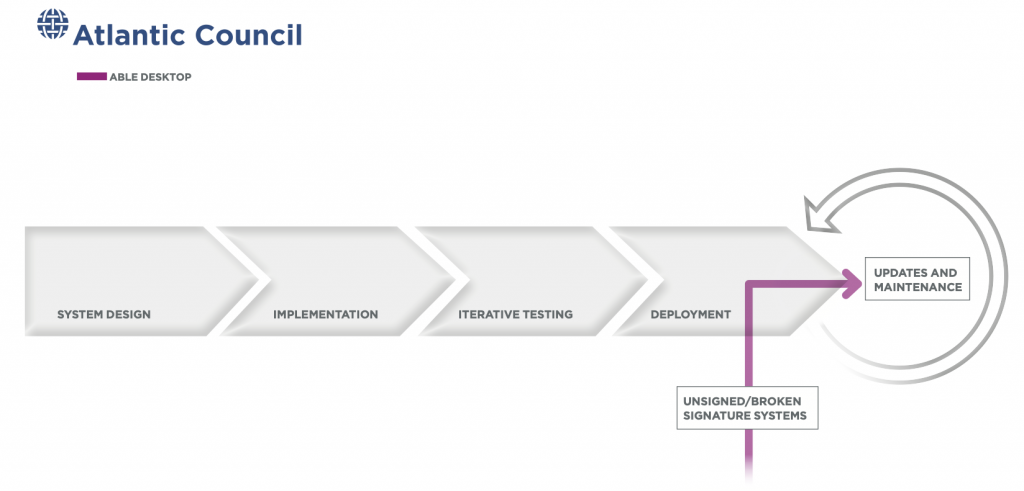

First disclosed by the Slovak security firm ESET in December 2020, Able Software’s Able Desktop application was compromised through its software supply chain using hijacked updates, affecting private-sector and government users across Mongolia.

The primary vector for this compromise was Able Desktop, an application that supports instant messaging as an add-on to the company’s main product, a human-resources management program. Initial access to Able Software’s corporate network came through targeted phishing campaigns. Once inside, the intruders were able to move laterally and compromise Able Software’s update-deployment infrastructure. The intruders used this compromise to upload two well-known malware strains, HyperBro and Tmanger, to the company’s update server. Whenever prompted to update their desktop application, Able Software’s customers received this malicious code instead.54 “Operation StealthyTrident: Corporate Software under Attack,” WeLiveSecurity, December 21, 2020, https://www.welivesecurity.com/2020/12/10/luckymouse-ta428-compromise-able-desktop. Unlike Sunburst and CCleaner, the intruders did not need to steal or forge an update signature because Able’s updates were unsigned—even when they were legitimate and did not include malicious code.

As with Sunburst, it is unclear when initial access began, or how long the operation was under way—intruders could have been hiding unobserved in systems for months, or even years. One report suggests that the intruders’ interest in the Able Software networks stretched as far back as May 2018. Able Software was a valuable target due to its widespread use by the Mongolian federal government. More than four hundred and thirty different agencies, including the Office of the President, the Ministry of Justice, and various state and law-enforcements bodies used Able’s software suite every day, giving intruders a deep, wide view into government operations.55 Catalin Cimpanu, “Chinese APT Suspected of Supply Chain Attack on Mongolian Government Agencies,” ZDNet, December 10, 2020. https://www.zdnet.com/article/chinese-apt-suspected-of-supply-chain-attack-on-mongolian-government-agencies. This focus on government targets, along with the uploaded malware’s capabilities, strongly suggests that intruders aimed to exfiltrate large quantities of sensitive, and potentially valuable, intelligence from government agencies.

There is extensive contextual and technical evidence that this operation was part of a larger espionage campaign against the Mongolian government. The Able Software update system had been targeted by at least two other attempts using tactics similar to the 2020 operation. Although researchers loosely attributed this operation to the Chinese APT group LuckyMouse by analyzing the tools used, those tools have also been utilized elsewhere by other actors—not all of them Chinese.56 Luigino Camastra, et al., “APT Group Targeting Governmental Agencies in East Asia,” Avast Threat Labs, December 18, 2020, https://decoded.avast.io/luigicamastra/apt-group-targeting-governmental-agencies-in-east-asia/. ESET believes this lack of clarity could be because these groups, mostly state-sponsored Chinese ones, collaborate to use the same tools, or are smaller entities acting as “part of a larger threat actor that controls their operations and targeting.” This incident is part of a recurring pattern of digital espionage operations against Mongolian targets attributed by various entities to Chinese sources,57 “Khaan Quest: Chinese Cyber Espionage Targeting Mongolia: ThreatConnect: Risk-Threat-Response,” ThreatConnect, October 7, 2013, https://threatconnect.com/blog/khaan-quest-chinese-cyber-espionage-targeting-mongolia. suggesting a long-running effort to support China’s broader aims of geopolitical influence and regional, as well as social, stability, with intermittent attempts by the Mongolian government to assert leverage of its own.58 Cimpanu, “Chinese APT Suspected of Supply Chain Attack on Mongolian Government Agencies”; Anthony Cuthbertson, “Mongolia Arrests 800 Chinese Nationals in Cyber Crime Raids,” Independent, October 31, 2019, https://www.independent.co.uk/life-style/gadgets-and-tech/news/mongolia-china-cyber-crime-arrest-raids-a9179471.html.

WIZVERA VeraPort

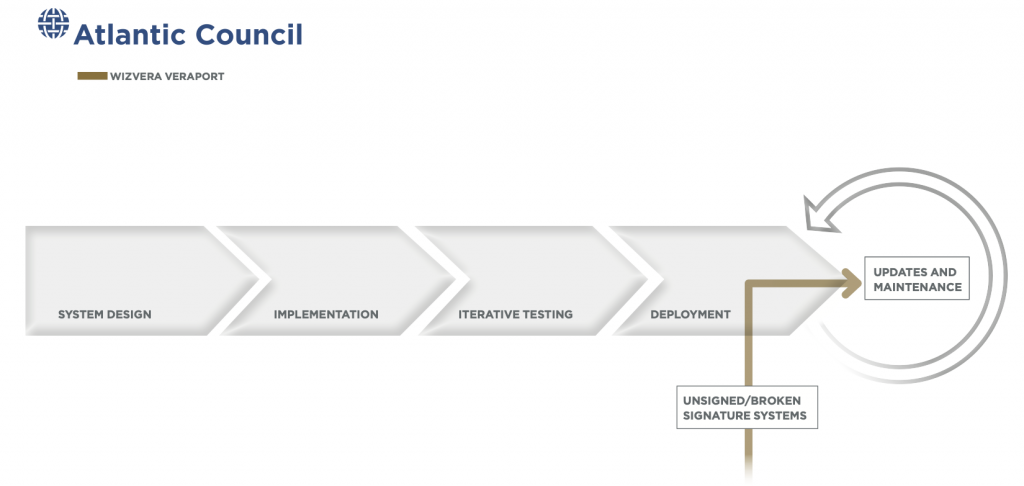

In November 2020, ESET disclosed that a widely required South Korean security tool, WIZVERA VeraPort, had been compromised, affecting an unknown number of organizations across the country.

WIZVERA VeraPort, the main vector for the intrusion, is a program used by South Korean banking and government websites to manage the download and use of mandated security plug-ins to verify the identity of users. Users are often blocked from using these sites unless they have WIZVERA VeraPort installed on their devices. In this operation, intruders took advantage of the requirement, stole code-signing certificates from two South Korean security companies, and used them to sign and then deploy malware through compromised websites.59 “Lazarus Supply‑Chain Attack in South Korea,” WeLiveSecurity, January 1, 2021, https://www.welivesecurity.com/2020/11/16/lazarus-supply-chain-attack-south-korea; Charlie Osborne, “Lazarus Malware Strikes South Korean Supply Chains,” ZDNet, November 16, 2020, https://www.zdnet.com/article/lazarus-malware-strikes-south-korean-supply-chains. Like with the Orion software in Sunburst, VeraPort’s software trusted these certificates to certify new code as authentic, and so did not seek to validate downloads more intensely.

Similar to the Able Desktop intrusion, spear phishing was likely used to gain initial access to these websites and position these signed malware for download. Once on users’ systems, the intruders deployed added payloads to gather information and open a backdoor.60 “Lazarus Supply‑Chain Attack in South Korea.” As with Sunburst, it is hard to determine how long intruders actively exploited VeraPort as they took great pains to disguise themselves—including varying filenames and using legitimate-looking icons.

Because the intrusion exclusively targeted users of VeraPort-supported websites, not much is known about the intended targets of the operation, other than their South Korean citizenship. This type of intrusion explicitly exploits the trust relationship between government, vendor, and citizen by utilizing mandated software as its distribution vector. The WIZVERA VeraPort compromise has been strongly attributed to Lazarus—also known as Hidden Cobra—an umbrella term used to describe groups likely tied to the North Korean government. Best remembered as the actor behind the 2014 Sony Hack, Lazarus is also known for consistently targeting South Korean citizens.61 Ibid.; Osborne, “Lazarus Malware Strikes South Korean Supply Chains.” As with Sunburst, VeraPort provided another avenue for intelligence collection across a long-running geopolitical rivalry.

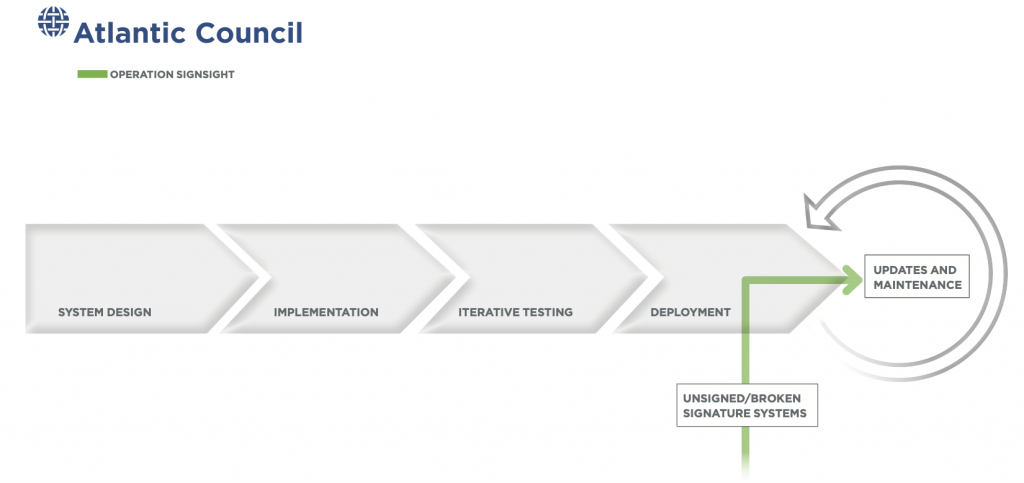

Operation SignSight

In the summer of 2020, the Vietnamese Government Certificate Authority (VGCA) was the primary vector of a software supply-chain intrusion targeting a wide range of public and private entities that used its digital-signature software, which provides both certificates of validation and software suites for handling digital document signatures. The software is widely used throughout the country, and is mandated in some cases.62 “Electronic Signature Laws & Regulations—Vietnam,” Adobe Help Center, September 22, 2020, https://helpx.adobe.com/sign/using/legality-vietnam.html; “Operation SignSight: Supply‑Chain Attack against a Certification Authority in Southeast Asia,” WeLiveSecurity, December 29, 2020, https://www.welivesecurity.com/2020/12/17/operation-signsight-supply-chain-attack-southeast-asia.

This software, like that targeted through Sunburst, was a trusted administrative tool distributed widely and at the nexus of many public-private interactions. The intruders infiltrated the VGCA website and redirected download links for two different pieces of software to instead deploy malware-laced versions from at least July 23 to August 16, 2020.63 “Operation SignSight: Supply‑Chain Attack against a Certification Authority in Southeast Asia.” When run, the compromised software opened the legitimate VGCA program, while also writing the malware onto the target’s computer. As the first step in a multi-part intrusion, the program created a backdoor on the victim machine called Smanager or PhantomNet, communicating basic information to a command-and-control server, and enabling the download and execution of additional malicious packages. The uncompromised versions of the installers had faulty signatures, so the malicious versions—whose digital signatures also failed to verify their integrity—appeared as authentic as the originals.

The SignSight incident derived its potency from the trust users placed in the compromised vendor—the VGCA—and the service oligopoly it had. The VGCA is one of only a handful of entities in Vietnam authorized to issue digital certificates, a crucial component in the cryptography of digital signatures that ensures the identity of the signer, meaning the authorities that issue them must be trustworthy. In fact, the VGCA was so trusted that its own incorrectly signed legitimate software failed to raise any red flags.64 Ha Thi Dung, “Digital Signature in Vietnam,” Lexology, August 24, 2020, https://www.lexology.com/library/detail.aspx?g=3ef35305-e1f6-4dcf-82ad-bf1dcd8c0fd1. As the government provider of software for navigating digital signatures, the VGCA, much like the vectors involved in the Sunburst case, distributes to a large number of public- and private-sector clients. This gave the intrusion a wide blast radius, particularly centered on entities interacting directly with the Vietnamese government. SignSight also resembles the Sunburst intrusion in its targeting and speculated intent—espionage.

Where Operation SignSight differs from the Sunburst intrusion is in its sophistication. Though some errant instances of Smanager detected in the Philippines might imply a broader intrusion that utilized more vectors than currently understood, Operation SignSight lacks the global reach of Sunburst. Additionally, although the malicious versions of this software used deceptive names for malware files and communicated through encrypted web connections, SignSight lacks the more elaborate deception efforts seen in Sunburst. Even within the relatively niche slice of software supply-chain intrusions that target government-associated IT administrative software for intelligence gathering, there is a wide spectrum of complexity.

Some researchers attribute the intrusion to a China-backed group, due to artifacts left in the malware and to the similarities between Smanager and another backdoor, Tmanger, which is attributed to TA428, a group linked to China that has targeted East Asian countries like Mongolia, Russia, and Vietnam for intelligence-gathering purposes. Moreover, TA428 tends to target government IT entities, as did the perpetrators of Operation SignSight, with an eye toward extracting intelligence. As with Able Desktop, cooperation and sharing of malicious tools and infrastructure between Chinese groups complicates precise attribution.65 “[RE018-2] Analyzing New Malware of China Panda Hacker Group Used to Attack Supply Chain against Vietnam Government Certification Authority—Part 2,” VinCSS Blog, December 25, 2020, https://blog.vincss.net/2020/12/re018-2-analyzing-new-malware-of-china-panda-hacker-group-used-to-attack-supply-chain-against-vietnam-government-certification-authority.html?m=1; “Operation StealthyTrident: Corporate Software under Attack.”; “Threat Group Cards: A Threat Actor Encyclopedia,” TA428—Threat Group Cards: A Threat Actor Encyclopedia, https://apt.thaicert.or.th/cgi-bin/showcard.cgi?g=TA428&n=1. A Chinese cyber intrusion into Vietnam would provide continued visibility into the decision-making of a burgeoning regional player whose security relationships with Chinese rivals in the Indo-Pacific, including the United States, continue to grow.

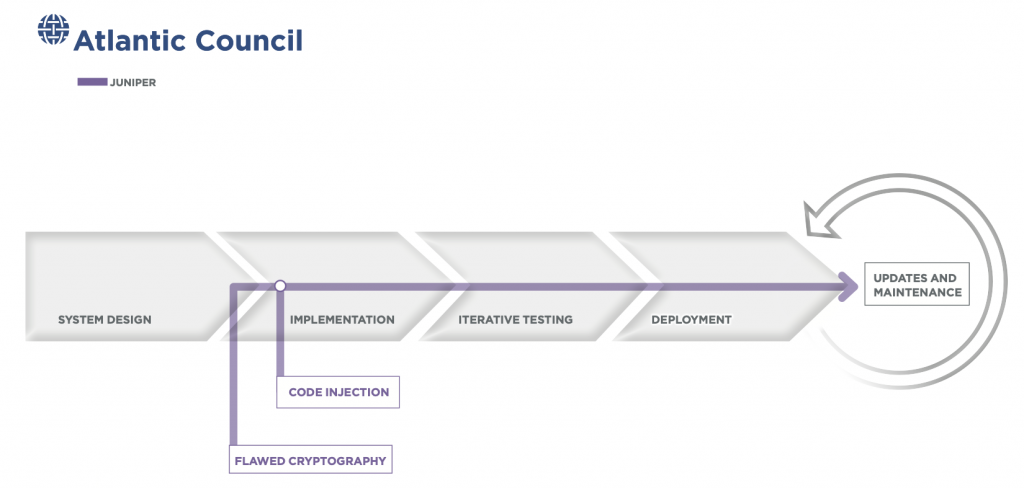

Juniper

Juniper Networks, an industry leader in networking products based in Sunnyvale, California, publicly announced a serious flaw in its NetScreen line of products in December 2015. Hackers infiltrated Juniper’s software-development process and compromised the algorithm used to encrypt classified communications, allowing them to intercept data from multinational corporations and US government agencies.66Evan Perez and Shimon Prokupecz, “Newly Discovered Hack Has U.S. Fearing Foreign Infiltration,” CNN, December 19, 2015, https://www.cnn.com/2015/12/18/politics/juniper-networks-us-government-security-hack; Emily Price, “Juniper Networks Security Flaw May Have Exposed US Government Data,” Guardian, December 22, 2015, https://www.theguardian.com/technology/2015/dec/22/juniper-networks-flaw-vpn-government-data.

NetScreen manufactures high-performance, commercial-grade security systems that are designed to provide firewall, virtual private network (VPN), and network traffic-management capabilities to large organizations. ScreenOS is the operating system powering NetScreen devices, through which authorized users transmit classified data using the built-in VPN. In 2006, the National Institute of Standards and Technology (NIST) released standards on an encryption algorithm developed by the NSA, called the Dual Elliptic Curve Deterministic Random Bit Generator (or Dual_EC).67 Elaine Barker and John Kelsey, “Recommendation for Random Number Generation Using Deterministic Random Bit Generators (Revised), NIST Special Publication 800-90,” National Institute of Standards and Technology, March 2007, https://projectbullrun.org/dual-ec/documents/SP800-90revised_March2007.pdf. This algorithm relies on a static value, known as “Q,” to encrypt data. Juniper, under the assumption that Dual_EC_DRBG was safe, began to ship its products using a Juniper-specific “Q” value sometime between 2008 and 2009.68 “‘Backdoor’ Computer Hack May Have Put Government Data at Risk,” CBS News, December 19, 2015, https://www.cbsnews.com/news/juniper-technologies-computer-hack-could-put-government-data-at-risk/; “TechLibrary,” Juniper Networks, https://www.juniper.net/documentation/en_US/release-independent/screenos/information-products/pathway-pages/netscreen-series/product/; Sen. Ron Wyden, et al., “Letter to The Honorable General Paul M. Nakasone,” United States Congress, January 28, 2021, https://www.wyden.senate.gov/imo/media/doc/012921%20Wyden%20Booker%20Letter%20to%20NSA%20RE%20SolarWinds%20Juniper%20Hacks.pdf;Kim Zetter, “Researchers Solve Juniper Backdoor Mystery; Signs Point To NSA,” Wired, December 12, 2015, https://www.wired.com/2015/12/researchers-solve-the-juniper-mystery-and-they-say-its-partially-the-nsas-fault/.

Originally, Juniper planned to use the Dual_EC_DRBG algorithm alongside another encryption algorithm to ensure product security. But, for some unknown reason, the second encryption algorithm was not included in the software, so Juniper products relied solely and by default on Dual_EC_DRBG for encryption. In 2012, unbeknownst to Juniper, malicious actors infiltrated Juniper’s software-development process and changed the aforementioned “Q” static value to one they knew. This alteration allowed them to intercept and eavesdrop on sensitive communications by organizations using Juniper VPNs shipped after August 2012. At the time, Juniper’s clients for this product included the Department of Defense, Department of Justice, the Federal Bureau of Investigation, and the Department of the Treasury. It is unclear how much, if any, data was exfiltrated using this exploit. However, honeypots created after the vulnerability’s discovery indicated that the attackers were active. The exploit’s discovery in December 2015 meant that the attackers were able to eavesdrop on “VPN-protected” communications for three years.69 Justin Katz, “Lawmakers Press NSA for Answers about Juniper Hack from 2015,” FCW, January 31, 2021, https://fcw.com/articles/2021/01/31/juniper-hack-algo-nsa-letter.aspx; Evan Kovacs, “Backdoors Not Patched in Many Juniper Firewalls,” Security Week, January 6, 2016, https://www.securityweek.com/backdoors-not-patched-many-juniper-firewalls; Perez and Prokupecz, “Newly Discovered Hack Has US Fearing Foreign Infiltration”; Zetter, “Researchers Solve Juniper Backdoor Mystery’ Signs Point to NSA.”

According to leaked materials, the NSA was well aware of this “Q” static value backdoor in the Dual_EC_DRBG encryption algorithm and may have intentionally left it there for its own signals-intelligence purposes. The Juniper networking equipment is used in many target countries—such as Pakistan, Yemen, and China—making the backdoor highly valuable to Western intelligence agencies.70 “Assessment of Intelligence Opportunity—Juniper,” Intercept DocumentCloud, February 3, 2011, https://beta.documentcloud.org/documents/2653542-Juniper-Opportunity-Assessment-03FEB11-Redacted; Ryan Gallagher and Glenn Greenwald, “NSA Helped British Spies Find Security Holes In Juniper Firewalls,” Intercept, December 23, 2015, https://theintercept.com/2015/12/23/juniper-firewalls-successfully-targeted-by-nsa-and-gchq; Nicole Perlroth, Jeff Larson, and Scott Shane, “N.S.A. Able to Foil Basic Safeguards of Privacy on Web,” New York Times, September 5, 2013, https://www.nytimes.com/2013/09/06/us/nsa-foils-much-internet-encryption.html?pagewanted=all&_r=1. The Juniper hack clearly demonstrates the benefits and risks of purposefully creating backdoors in software used by US government agencies, as they may be more easily leveraged by adversaries. In the aftermath of the Sunburst campaign, senators sent a letter in February 2021 to the NSA questioning why extra software supply-chain safeguards were not put into place after the 2015 Juniper hack. Attribution for this attack is still unknown.71 Wyden, et al., “Letter to The Honorable General Paul M. Nakasone.”

Trendlines leading to Sunburst

Taken together, these cases convey trends in state-backed software-supply compromises: the identification and extraction of valuable information from adversaries; the undermining of key technical mechanisms of trust to gain access and preserve operational secrecy; and the targeting of deeply privileged programs or those deployed widely across governments and industry—usually at the seams of assurance, with less-than-adequate protection. Far from an unprecedented bolt-from-the-blue attack, Sunburst echoed many of these trends as a supply-chain compromise while adding a highly effective focus on the seams of security in cloud deployments and on-premises identity systems. Unfortunately for the organizations targeted, these supply-chain lessons went largely unheeded, and much of what might have been done to limit the harm of these cloud-focused techniques happened late, or not at all.

Figure 10. Mapping of Section II cases through the software supply chain

The contest for information

Over the past decade, there have been at least one hundred and thirty-eight software supply-chain attacks or vulnerability disclosures. Thirty of these are tied to states—four, including Sunburst, are linked to Russia, versus eight to China.72 Herr, et al., Breaking Trust, https://www.atlanticcouncil.org/resources/breaking-trust-the-dataset.

The Flame, VeraPort, CCleaner, and Able Desktop operations all provided their operators with stealthy means to infiltrate sensitive networks and access systems without detection for months, if not years.73 Zetter, “Meet ‘Flame,’ The Massive Spy Malware Infiltrating Iranian Computers”; Ravie Lakshmanan, “Trojanized Security Software Hits South Korea Users in Supply-Chain Attack,” Hacker News, November 16, 2020, https://thehackernews.com/2020/11/trojanized-security-software-hits-south.html; Martin Brinkmann, “CCleaner Malware Second Payload Discovered,” GHacks Technology News, September 21, 2017, https://www.ghacks.net/2017/09/21/ccleaner-malware-second-payload-discovered; Cimpanu, “Chinese APT Suspected of Supply Chain Attack on Mongolian Government Agencies.” The Flame malware was able to control infected machines’ internal microphones to record conversations, siphon contact information via nearby Bluetooth devices, record screenshots, and manipulate network devices to collect usernames and passwords.

The Sunburst designers included some similar capabilities, such as transferring and executing files on affected systems, which gave them the ability to exfiltrate large amounts of information from targets over the course of months.74 “Highly Evasive Attacker Leverages SolarWinds Supply Chain to Compromise Multiple Global Victims with SUNBURST Backdoor,” FireEye, December 13, 2020, https://www.fireeye.com/blog/threat-research/2020/12/evasive-attacker-leverages-solarwinds-supply-chain-compromises-with-sunburst-backdoor.html. Intruders appear to have accessed SolarWinds’ corporate networks and infiltrated the company’s build infrastructure as early as September 2019, through still-unconfirmed means.

A key aspect of the intelligence contest is not only the acquisition of information, but a combination of data collection, data denial, and securing access to target networks that allows actors to more effectively maneuver around adversary defenses, in and outside of cyberspace, and preposition capabilities for future use. Triton, not mentioned above, serves as a good example of prepositioning. Intruders in the Triton case first gained access to the plant’s networks through a poorly secured engineering workstation, before then moving to a computer that controlled a number of physical safety systems using a previously unknown software vulnerability, or zero-day.75 “Triton Malware Is Spreading,” Cyber Security Intelligence, March 19, 2019, https://www.cybersecurityintelligence.com/blog/triton-malware-is-spreading-4177.html; Nimrod Stoler, “Anatomy of the Triton Malware Attack,” CyberArk, February 8, 2018, https://www.cyberark.com/resources/threat-research-blog/anatomy-of-the-triton-malware-attack; Martin Giles, “Triton Is the World’s Most Murderous Malware, and It’s Spreading,” MIT Technology Review, March 5, 2019, https://www.technologyreview.com/2019/03/05/103328/cybersecurity-critical-infrastructure-triton-malware. This vulnerability enabled the malware to alter the system design by accessing supervisor privileges to inject the malware payload into the firmware, as well as modify a piece of administrative software running on the computer. This software, called Trixonex, linked the computer to a number of physical control and safety systems. The malware enabled intruders to read, write, and execute commands inside the safety-control network, which triggered two shutdowns in June and August 2017, leading to the operation’s unmasking and obscuring its final stages from discovery.76 Stoler, “Anatomy of the Triton Malware Attack”; “Triton Malware Is Spreading,” Cyber Security Intelligence.

Despite the lack of final-stage execution, the accessed privileged information about the plant created the possibility for the intruders to create real physical harm. In a worst-case scenario, the access the intruders had before their discovery would have allowed them to trigger explosions causing casualties both at the plant and in surrounding areas.77 Giles, “Triton Is the World’s Most Murderous Malware, and It’s Spreading” Safety instrumented systems are not unique to petrochemical powerplants in Saudi Arabia; they secure everything from transportation systems to nuclear powerplants. The execution of such an action would fall outside the realm of espionage operations, but the preparation for it demands exquisite information about a target system. These intrusions, once discovered, no longer provided tangible espionage capabilities, but the preparation for such an eventuality constitutes prepositioning. If the intent of Triton was to prove a potential destructive capability, it succeeded.

Tactics: Run silent, run deep

For most of the studied cases, intruders’ key point of entry to their targets was the circumvention of code-signing protections to compromise software. From there, intruders moved laterally throughout the networks they could access via the initial infection. Among these eight total cases (including Sunburst), a diversity of techniques was employed to break, evade, or abuse the protections of these signatures. Flame managed to solve the significant mathematical problem of breaking the cryptographic protections of a certificate that granted excessive permissions in order to target individual users. Juniper exploited backdoors in an encryption standard on private networks meant to protect classified government communications. Others opted for more cost-efficient, less demanding techniques, such as stealing legitimate code-signing certificates (VeraPort)78 Osborne, “Lazarus Malware Strikes South Korean Supply Chains.” or compromising build servers to inject code prior to signing (CCleaner).79 Lily Hay Newman, “Inside the Unnerving CCleaner Supply Chain Attack,” Wired, April 17, 2018, https://www.wired.com/story/inside-the-unnerving-supply-chain-attack-that-corrupted-ccleaner. At the other end of the spectrum, some compromises relied on shortcomings already present in code-integrity protections by targeting unsigned software, programs with signatures that had already failed verification, or systems that did not fully authenticate certificates.