How modern militaries are leveraging AI

Table of contents

- The importance of human-machine teaming and key insights

- Applications of human-machine teaming

- Demonstrating advantage through use cases

- Pathways to HMT adoption at scale and pace

- Summary of recommendations and conclusion

- Acknowledgements

- About the authors

The importance of human-machine teaming and key insights

Modern militaries must embrace HMT or risk conceding military edge to competitors effectively leveraging AI and autonomy.

Machines are becoming ubiquitous across the twenty-first-century battlespace, and modern militaries must embrace human-machine teaming (HMT) or risk conceding military edge to competitors effectively leveraging artificial intelligence (AI) and autonomy.1This paper uses the term human-machine teaming (HMT) to signify both the teaming of human operators with semiautonomous machines (sometimes referred to as manned-unmanned teaming) and interactions between humans and AI agents that do not take physical form. This report investigates the implications of the increasing incorporation of AI into military operations with a particular focus on understanding the parameters, advantages, and challenges to the US Department of Defense’s (DOD’s) adoption of the concept of HMT.2From November 2022 to June 2023, the authors held workshops and interviews with defense and national security experts and practitioners to help inform their research.

Key takeaways

Looking through the lens of three military applications for HMT, the authors arrive at the following conclusions.

- HMT has the potential to change warfare and solve key operational challenges: AI and HMT could potentially transform conflict and noncombat operations by increasing situational awareness, improving decision-making, extending the range and lethality of human operators, and gaining and maintaining advantage across the multi-domain fight. HMT can also drive efficiencies in many supporting functions such as logistics, sustainment, and back-office administration, reducing the costs and timelines of these processes and freeing up humans to carry out higher-value tasks within these mission areas.

- DOD must expand its definitions for HMT: Definitions of HMT should be expanded to include the breadth of human interactions with autonomous uncrewed systems and AI agents, including those that have no physical form (e.g., decision support software). Extending the definition beyond interactions between humans and robots allows DOD to realize the wide-ranging use cases for HMT—ranging from the use of lethal weapon systems and drone swarms in high-intensity warfare to leveraging algorithms to fuse data and realize virtual connections in the information domain.

- HMT development and employment must prioritize human-centric teaming: AI development is moving at an impressive pace, driving potential leaps ahead in machine capability and placing a premium on ensuring the safety, reliability, and trustworthiness of AI agents. As much attention must be dedicated to developing the competencies, comfort levels, and trust of human operators to effectively exploit the value of HMT and ensure humans stay at the center of human-machine teams.

- DOD must move from the conceptual to the practical: HMT as a concept is gaining momentum within some elements of the DOD enterprise. Still, advocates for increased AI and HMT adoption stress the need to move the conversation from the conceptual to the practical—transitioning capability development into the real-time testing and employment of HMT capabilities, as has been demonstrated by the Navy’s experimentation with AI through Task Force 59—to better articulate and demonstrate the operational advantages HMT can deliver.

- Experimentation is crucial to building trust: Iterative, real-world experimentation in which humans develop new operational concepts, test the limits of their machine teammates, and better understand their breaking points, strengths, and weaknesses of machines in a range of environments will play a key role in speeding HMT adoption. This awareness is also essential to human operators as they develop the trust in their AI teammates required to effectively capitalize on the potential of HMT.

- DOD must address bureaucratic challenges to AI adoption: DOD’s risk-averse culture and siloed bureaucracy is slowing acquisition, experimentation, and adoption of HMT concepts and capabilities. Increasing the agility and flexibility of the acquisition process for HMT capabilities, iterative experimentation, incentives to take on risks, and digital literacy across the force are necessary to overcome these adoption challenges.

Definitions and components of HMT

HMT refers to the employment of AI and autonomous systems alongside human decision-makers, analysts, operators, and watchkeepers. HMT combines the capabilities of intelligent humans and machines in concert to achieve a military outcome. At its core, HMT is a relationship with four equally important elements:

- Human(s): An operator (or operators) that provides inputs for and tests machines, as well as leverages their outputs;

- Machine(s): Ranging from an AI and machine learning (ML) algorithm to a drone swarm, the machine holds a degree of agency to make determinations and supports a specified mission; and

- Interaction(s): The way in which the human(s) and machine(s) interface to meet a shared mission.

- Interface(s): The mechanisms and displays through which humans interact with machines.

Applications of human-machine teaming

HMT is most frequently envisioned narrowly as the process of humans interacting with anywhere from one to several hundred or more autonomous uncrewed systems. In its most basic form, this vision of HMT is not new: Humans have collaborated with intelligent machines for decades—with early machine talents epitomized in 1997 by supercomputer Deep Blue defeating world champion Gary Kasparov in a game of chess—and militaries have long tested concepts to move the needle in this critical capability. The recent impressive pace of development in AI as well as in robotics, however, is driving increased consideration of the new capabilities, efficiencies, and advantages these technologies can enable.

The “loyal wingman” concept is a frequently cited example of this manifestation of HMT in which a human pilot controls the tasking and operations of a handful of relatively inexpensive, modular, attritable autonomous uncrewed aerial systems (UAS). These wingman aircraft can fly forward of the crewed aircraft to carry out a range of missions, including electronic attack or defense, intelligence, surveillance, and reconnaissance (ISR), or strike, or as decoys to attract fire away from other assets and “light up” enemy air defenses.

Interest in this manifestation of HMT has increased not just in the United States but also in most modern militaries. In addition to the United States, Australia, China, Russia, the United Kingdom, Turkey, and India all have at least one active loyal wingman development program, while the sixth-generation fighter efforts Global Combat Air Programme (United Kingdom, Italy, Japan), Next Generation Air Dominance programs (US Air Force and Navy), and Future Combat Air System (Germany, France, Spain) involve system of systems concepts of airpower that stress both HMT and machine-machine teaming.

As important as this category of HMT is and will continue to be to emerging military capabilities, discussion of HMT should include the full breadth of human interaction with AI agents (which learn from and make determinations based on their environments, experiences, and inputs), including the overwhelming majority of interactions that occur with algorithms that possess no physical form. Project Maven is one example of how DOD and now the National Geospatial-Intelligence Agency use this category of HMT to autonomously detect, tag, and trace objects or humans of interest from various forms of media and collected intelligence, enabling human analysts and operators to prioritize their areas of focus.

HMT can provide multiple layers of overlapping advantages to the United States and its allies and partners.

Beyond imagery analysis and target identification, nonphysical manifestations of HMT can support a range of important tasks such as threat detection and data processing and analysis. It is essential to military efficacy in operational environments marked by significant increases in speed, complexity, and available data. They also can generate efficiencies in logistics and sustainment, training, and back-office administrative tasks that reduce costs and timelines for execution.

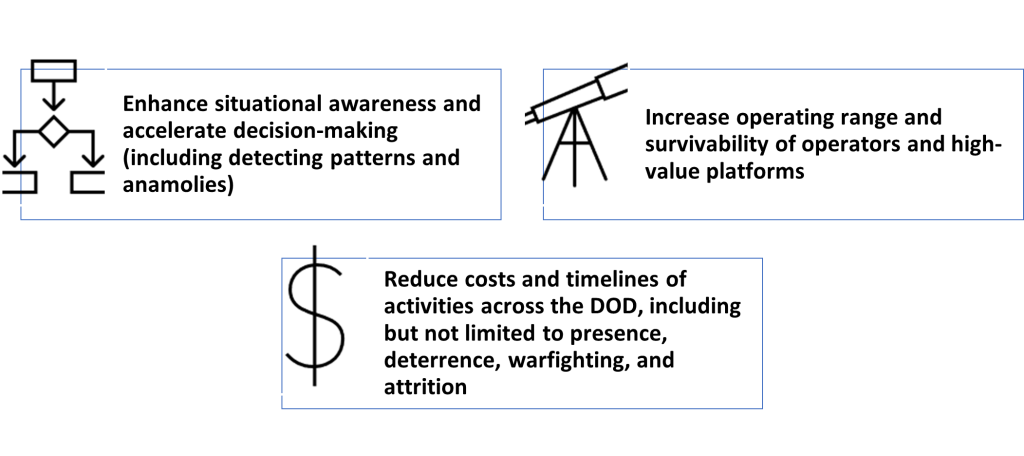

By combining the processing power and decision support capabilities of AI with the social intelligence and judgment of humans and, in some cases, the force-multiplying effects of uncrewed systems with different degrees of autonomy, HMT can provide multiple layers of overlapping advantages to the United States and its allies and partners, including those high-level advantages listed in Figure 1.

Figure 1: High-level description of three layers of HMT value.

DOD recognition of the current and future multilayered value of HMT as part of broader efforts to “accelerate the adoption of AI and the creation of a force fit for our time” has increased. Still, several persistent challenges to adoption of AI and HMT endure throughout the Pentagon. To accelerate and deepen HMT adoption, the DOD must commit to an approach that aligns development efforts and private sector engagement, creating flexibility for acquisition officials to scale HMT solutions across the defense enterprise. This approach must be complemented by:

- Continued and increased focus on building trust between humans and machine partners;

- Leading in establishing best practices and norms around ethics and safety;

- Aggressive and iterative experimentation; and

- Clear and consistent messaging

These elements are crucial to realizing the value and advantages HMT can deliver across the multi-domain future fight.

Demonstrating advantage through use cases

Use cases serve as a means of better understanding how HMT can provide value and lead to advantage in and across several missions and environments. Certainly, practitioners have seen or experienced use cases in various settings—including through war games and analysis of the ongoing war in Ukraine—and the slow nature of HMT adoption may call into question the utility of use cases. Still, highlighting the varied and, in certain instances, underappreciated applications of HMT can help demonstrate the different contexts in which HMT acts as a force multiplier and how this capability can support the US military in meeting the demands of the modern battlefield.

However, changing the perceptions of HMT across a large organization such as DOD is an iterative task and requires the frequent reinforcement of its value, especially as the technologies and concepts supporting HMT create new or enhanced opportunities. The three use cases discussed below are far from inclusive—our workshops and research explored several other compelling use cases—but the authors chose them because they reflect the layered value of HMT in serving missions and meeting operational threats and challenges being urgently considered by defense planners, as described in Table 1.3HMT’s relevance to the future of cognitive and information warfare, anticipatory logistics and resilient sustainment, training, and military medicine were all raised through project interviews and workshops.

Table 1: A high-level review of the advantages of each of the case studies discussed in this briefing.

| Use cases of HMT | Key advantages |

| A2/AD conflict | The use of masses of expendable and attritable systems to penetrate A2/AD environments, sustain forces, and extend the range of effects from crewed platforms operating outside A2/AD cordons. Finding, fixing, and tracking key nodes and threats in a data-rich environment. Providing situational awareness at the edge. |

| Sense-making and targeting | Enhancing situational awareness and threat detection through data processing and fusion improves situational awareness and threat detection and ensures decisions are made at the speed of relevance. Finding connections that human analysts and operators did not know were present. Supporting humans in target identification and prioritization across domains. Improving precision of effects by recommending to human decision-makers the most applicable kinetic or non-kinetic weapon to engage targets. |

| Presence, prioritization, and deterrence | Extending the range of high-value crewed assets through the use of ISR meshes that incorporate several uncrewed systems. Identifying anomalies and disruptions in activity patterns across geographically dispersed theaters. Prioritizing threats and ensuring efficient resource allocation based on AI-driven assessments. Aggressive and iterative experimentation in real-world environments builds trust between operators and their machine partners. |

Anti-access and area-denial (A2/AD): Coping with mass, range, and attrition

Determining how to conduct operations in an A2/AD environment is a clear priority for defense planners. This is especially the case in the Indo-Pacific, where the military modernization effort of the People’s Republic of China has emphasized utilizing pervasive multi-domain sensors and a surfeit of kinetic and non-kinetic strike assets to establish cordons in which US and allied forces are highly vulnerable to enemy fires and, in the worst-case scenario, unable to operate effectively.

HMT will not mitigate all risks of operating against robust A2/AD systems, though it can help US and allied forces better manage these risks in several ways, including in processing and analyzing large and complex datasets to support better and faster human decision-making.

Teaming uncrewed systems, crewed assets, and human operators

Reducing risk to human operators does not mean eliminating this risk, and the use of attritable uncrewed aerial systems (UAS) and uncrewed surface vehicles (USVs) will still incur costs.

The use of attritable and expendable uncrewed systems, in conjunction with crewed assets and human controllers and decision-makers, can achieve several important objectives in the A2/AD context. Most notably, these smaller, less expensive, generally modular systems can be used to saturate A2/AD systems, identify enemy defenses, and force adversaries to expend their deep stores of munitions—all while extending the operating range of higher-value crewed and uncrewed assets and reducing the risks to crewed assets and their human operators.

The use of attritable and expendable uncrewed systems, in conjunction with crewed assets and human controllers and decision-makers, can achieve several important objectives in the A2/AD context.Attritable or reusable systems are those that are considerably lower cost than higher-end uncrewed systems such as the MQ-9 Reaper.4The generally acknowledged cost range for attritable or reusable aircraft is $2 million to $20 million, though there is no consensus as to whether this cost range includes only the aircraft or also includes mission systems. While designed to be reused, the significantly lower cost of these systems in comparison to high-value uncrewed systems and advanced crewed systems means that they can be placed into contested environments in advance of more-expensive platforms with a considerably reduced risk to force structure cost, especially if paired with in-theater means of reconstituting these systems. Expendable systems are even lower cost than attritable systems and are most often designed for a single use. Loitering munitions are one category of expendable uncrewed systems. Most notably, these smaller, less expensive, generally modular systems can be used to saturate A2/AD systems, identify enemy defenses, and force adversaries to expend their deep stores of munitions—all while extending the operating range of higher-value crewed and uncrewed assets and reducing the risks to crewed assets and their human operators.

To that end, reducing risk to human operators does not mean eliminating this risk, and the use of attritable uncrewed aerial systems (UAS) and uncrewed surface vehicles (USVs) will still incur costs. Moreover, even attritable systems and their payloads can be worth several million dollars. New calculations of value as well as increased capacity to reconstitute systems must keep pace with expected levels of attrition to ensure the right sizing of HMT-enabling force structures.

Sense-making and decision support: Enhanced situational awareness and improved decision-making at the pace of relevance

The A2/AD environment also serves as an example of how machines can support humans in the crucial and increasingly demanding task of sense-making—the art of interpreting and fusing data for enhanced decision-making.

Sense-making in A2/AD environments

A2/AD environments will be characterized by an abundance of complex datasets and both signal and noise across domains including in the electromagnetic spectrum. The amount of data available to operators will be overwhelming. Multi-domain sensors and surveillance and strike assets will be actively operating and engaging with both friendly and adversary forces, creating a need for AI agents to help process and filter data and feed relevant information back to the warfighter. The result will be to improve the quality and speed at which human operators filter data and then fix and track critical nodes in adversary A2/AD systems. This high-level A2/AD example reveals one context in which AI-enabled data fusion and processing of complex datasets can increase situational awareness and speed up decision-making. But the applications of this sense-making are impressively broad, including in increasing the speed and precision of identifying targets, determining appropriate kinetic or non-kinetic weapons to use to strike the target, and ensuring precision of effects.

Targeting: Joint all-domain command and control (JADC2)

DOD’s “connect everything” JADC2 effort offers another example of how HMT can be applied to support improved targeting and speeding up sensor-to-shooter processing. As a January 2022 Congressional Research Service report observed, “JADC2 intends to enable commanders to make better decisions by collecting data from numerous sensors, processing the data using AI algorithms to identify targets, then recommending the optimal weapon—both kinetic and non-kinetic—to engage the target.” While JADC2 is still largely a concept rather than an architecture for future military operations, the US military is already using AI to help find and track possible targets or entities of interest on the battlefield. In September 2021, Secretary of the US Air Force Frank Kendall acknowledged that the Air Force had “deployed AI algorithms for the first time to a live operational kill chain” to provide “automated target recognition.” Kendall noted that by doing so, the Air Force hoped to “significantly reduce the manpower-intensive tasks of manually identifying targets—shortening the kill chain and accelerating the speed of decision-making.”

AI in the intelligence field

Sense-making through human-machine teams is also shaping the future of disciplines such as intelligence analysis and mission planning (see sidebar below), in which AI-enabled data fusion, pattern and anomaly detection, and research and analysis support are helping analysts manage and exploit the explosion in available sources and data. Tasks that would take days for humans to perform can now be performed in hours, allowing humans to concentrate on the most relevant pieces of information derived from large datasets. For example, through the war in Ukraine, the Ukrainian Armed Forces are already using natural language processing tools that leverage AI to translate and analyze intercepted Russian communications, saving analysts time and allowing them to focus on key messages and intelligence. The use of AI is not only speeding up analysis but also demonstrating value in bringing “unknown knowns”—observed but ignored or forgotten connections, insights, and information—to the attention of an analyst and articulating the value or quality of information to the human decision-maker.5Phone interview with Harry Kemsley, president of Government and National Security, Janes, January 31, 2023.

Large language models for intelligence and planning activities

The release of Chat GPT-4 in March 2023 has led to discussion of how DOD can leverage similar large language model (LLM) tools to support intelligence activities. There is understandable concern that the current sophistication of LLMs and their tendency to “hallucinate”—make up information that is incorrect—would make extensive use or reliance on these tools premature, or even counterproductive.

However, experimentation with LLMs would be useful in better understanding where and how these tools can add value, especially as they become more reliable. An April 2023 War on the Rocks article detailed how the US Marine Corps’ School of Advanced Warfighting is using war games to explore how LLMs can assist humans in military planning. These systems were used to provide, connect, and visualize different layers of information and levels of analysis—such as strategic-level understanding of regional economic relationships and more-focused analysis of dynamics in a specific country—which planners then used to refine possible courses of action and better understand the adversary’s system.

Tasks that would take days for humans to perform can now be performed in hours, allowing humans to concentrate on the most relevant pieces of information derived from large datasets.

In January 2023, the Intelligence Advanced Research Projects Activity (IARPA), situated within the Office of the Director of National Intelligence, announced a program known as REASON (Rapid Explanation, Analysis, and Sourcing Online). The program will use AI-enabled software to improve human-authored intelligence assessment products. The software reviews human-authored reporting and automatically generates recommendations for additional sources the human may not be aware of or did not use and also offers suggestions of how to improve the analytical quality of the report, performing the role of an autonomous red-team reviewer. This program offers a step in the right direction, demonstrating one of many ways in which AI can help humans make connections between data and sources and improve analysis and decision-making.6Phone interview with Harry Kemsley, president of Government and National Security, Janes, January 31, 2023.

Presence, prioritization, and deterrence: Coping with distance and complexity

The US military faces significant, varied, and frequently dispersed challenges across its geographic combatant commands. Take, for example, US Indo-Pacific Command (INDOPACOM). INDOPACOM covers more of the globe than any other combatant command and is home to the DOD’s “pacing challenge” of China, a range of potential military and geopolitical hot spots, several US allies and partners, and a growing list of security challenges. While differing in its prioritization, US Central Command (CENTCOM) is similarly complex in nature. CENTCOM covers nearly 4 million square miles, an area that includes key waterways and shipping lanes, active conflicts, ethnic and sectarian violence, and a diverse set of threats to regional security and US national interests.

HMT offers a solution to meet an increasingly sophisticated threat landscape while operating with insufficient resources.

While each command faces unique problem sets, the size and complexity of today’s security challenges are straining the limited number of forces available to deter, identify, assess, or respond to a fast-moving threat or crisis in a timely manner. HMT offers a solution to meet an increasingly sophisticated threat landscape while operating with insufficient resources.

Exporting Task Force 59: A sandbox for AI experimentation and integration

In September 2021, CENTCOM’s 5th Fleet established Task Force 59—the Navy’s testing ground for unmanned systems and AI—to experiment with teaming human operators and both smart robots and AI agents to increase presence across the region, provide persistent and expanded maritime domain awareness (MDA), and prioritize threats for in-demand crewed and high-value assets.

Task Force 59’s experimentation efforts combine AI and uncrewed systems—particularly USVs but also vertical take-off and landing UAS, which are valuable in expanding the coverage of the Navy’s ISR networks in the region—and are further amplified by cooperation with partners and allies. In comments at the February 2023 International Defence Conference in Abu Dhabi, 5th Fleet Commander Vice Admiral Brad Cooper explained that each of the small USVs with which Task Force 59 is experimenting can extend the range of ISR networks by thirty kilometers, meaning that even a modest investment in smart uncrewed systems can deliver a significant increase in MDA.7One of the authors, Tate Nurkin, attended the conference on February 19, 2023, and moderated the panel in which these comments were made

AI agents then process the millions of data points collected by the uncrewed systems to create an understanding of normal patterns of life, which, in turn, serves as a baseline for AI agents to identify anomalies that require further investigation by human operators and watchkeepers. In doing so, human-machine teams can cultivate a deeper understanding of the operational environment, moving toward a predictive model in which the DOD can possibly anticipate and prevent future threats and prioritize allocation of limited resources to areas that AI agents determined are most vulnerable to disruption.

Building on the success of the Navy’s Task Force 59, both the Army and Air Force components of CENTCOM have stood up task forces to experiment with emerging technology and HMT concepts. The Army component, Task Force 39, was established in November 2022 to advance experimentation in counter-small UAS solutions that can be scaled not just within CENTCOM environments but across the DOD. The Air Force’s Task Force 99 was stood up in October 2022 as an operational task force to pair unmanned and digital technologies to improve air domain awareness in much the same way that Task Force 59 seeks to improve MDA by expanding presence and anticipating emergent threats. Lieutenant General Alexus Grynkewich, commander of CENTCOM’s Air Force component, stated in February 2023 that the objective is “not just tracking objects in the air, but maybe finding things that could be on the ground about to be launched into the air and how those could be a threat to us.”

The applicability of this HMT approach is not limited to CENTCOM; indeed, it is now being adopted in other commands. In April 2023, the US Navy announced the expansion of its experimentation with unmanned and AI tools into US Southern Command’s 4th Fleet to increase MDA awareness in a region with its own particular dynamics, threats, and MDA requirements. Notably, 4th Fleet is taking a different approach to adoption of HMT lessons and new HMT capabilities, deciding to integrate them into its command and staff structure rather than through the standing up of a discrete task force. While these task forces provide valuable models for quickly bringing off-the-shelf technologies to the warfighter, the challenge rests in scaling these solutions across services and domains (and the defense enterprise at large).

Pathways to HMT adoption at scale and pace

The pace of AI and HMT technology development has exceeded the capacity of the DOD to adopt these technologies at scale. To more fully realize the advantages of HMT, DOD must:

- Address several cultural, bureaucratic, and organizational challenges;

- Ensure continued focus on HMT ethics, safety, and agency;

- Embrace rapid and realistic experimentation of HMT; and

- Develop the human component and enhance trust in their machine teammates.

Culture, acquisition, and melting the “frozen middle”

Organizational and cultural constraints radiate across the DOD, affecting the way the enterprise acquires and adopts the technologies, concepts, and capabilities necessary to enable HMT. Innovation and adoption are hindered by DOD’s “frozen middle”—layers of relatively senior military and civilian personnel within DOD bound by an underlying set of inherited assumptions, incentives, and instincts that are resisting the adaptive, collaborative practices necessary for adoption of HMT concepts and capabilities at pace and scale. These organizational layers and bureaucratic proclivities have a cascading effect as they embed in a DOD acquisition system. According to former Secretary of Defense Mark Esper and former Secretary of the Air Force Deborah Lee James, co-chairs of the Atlantic Council’s Commission on Defense Innovation Adoption, the effect is slowing adoption by requiring multiple levels of review “because incentives do not exist to be bold and to move fast.”

Organizational “tribalism” also has an enervating effect on the DOD’s acquisition of key technologies and adoption of HMT-relevant capabilities. Examples of cross-service and cross-command collaboration do exist. Workshop participants highlighted joint projects on AI transparency between service laboratories and the ability of the US Special Operations Command, as a functional command, to work across regions and services. Far more frequently, though, disjointed development and vendor engagement and procurement efforts have been the norm. To achieve the necessary momentum for adoption across DOD—and, most importantly, the salutary outcomes of this adoption—requires an increased willingness to share and subsequently align research, information, development efforts, acquisition models, and best practices across the department and, particularly, the services.

In June 2022, David Tremper, director for electronic warfare in the Office of the Under Secretary of Defense for Acquisition and Sustainment, provided an example of how DOD siloes slow adoption of HMT-enabling capabilities. Tremper highlighted an incident in which the Navy developed an electronic warfare algorithm that was of interest to the Army, though transfer of the algorithm could not take place without a memorandum of understanding (MoU) between the two service’s labs. It took nine months for the lab that developed the algorithm to approve the transfer, and, after eighteen months, the MoU was determined to be inadequate to support the algorithm migration. According to Tremper, “one service just lawyered up against the other . . . and prevented a critical capability . . . from going from one service to another service.” This example highlights the hurdles blocking the services from learning and gaining from one another’s strides in HMT; the cross-service applicability of HMT solutions is critical to avoid a service unnecessarily depleting its resources in a field where its sister service has already paved the way.

To melt this frozen middle and reduce cultural and organizational obstacles to HMT adoption, the DOD must center its efforts around three high-level aims. First, acquisition reform is a necessary and foundational step. The ability to develop, build, and acquire advanced platforms and systems with longer development times remains important to achieving DOD missions. There is also growing and urgent demand for the technologies and capabilities enabling HMT, such as software and lower-cost uncrewed systems, across the Pentagon. The program-centric system used to acquire and develop many advanced platforms—with the development of requirements, budgets, and capabilities completed individually for hundreds of programs—is not optimized for the rapid acquisition of the innovative technologies and capabilities developed by traditional defense suppliers and the commercial sector.

While the objective of this report is not to provide a comprehensive set of recommendations for acquisition reform, two principles are useful in guiding this reform: 1) the need for increased flexibility and agility within the acquisition process, enabling response to changing technologies and requirements; and 2) new incentives that stimulate an enhanced degree of risk tolerance.8In April 2023, the Atlantic Council’s Commission on Defense Innovation Adoption released its interim report, which offers actionable recommendations to engender these changes. Lofgren et al., Atlantic Council Commission on Defense Innovation Adoption Interim Report.

Second, among the workforce setting requirements for and working alongside machine teammates, a lack of understanding surrounding HMT and its applications is slowing adoption. The human element of this equation must be adequately prioritized through recruitment, retention, and training programs. Specifically, DOD must provide its personnel with opportunities to enhance digital literacy and understanding of the technologies, concepts, and applications of HMT capabilities within not just the acquisition force and those that set requirements but also among many senior decision-makers.

The human element of this equation must be adequately prioritized through recruitment, retention, and training programs.

Third, DOD should work to press the limits of existing authorities for “quick wins” in support of rapid acquisition and experimentation, similar to the Task Force 59 model. According to Schuyler Moore, CENTCOM’s chief technology officer, one of the key lessons of command innovation efforts is “that [CENTCOM] can actually work with the existing [authorities] fairly flexibly” and officers must consider “whether or not the authority exists or whether it’s simply never been tried before.9“US Central Command Chief Technology Officer Schuyler Moore and Innovation Oasis Winner Sgt. Mickey Reeve Press Briefing,” US Central Command”. Officers must be incentivized to use their own ingenuity as a tool for testing new HMT capabilities and demonstrating operational successes.

The human component: Trust and training

Building and calibrating appropriate levels of human trust in AI teammates is essential to HMT adoption across the DOD. This discussion typically begins with several actions designed to ensure the reliability—that is, the trustworthiness—of AI agents, such as establishment and implementation of effective best practices for the design, rigorous testing, iterative experimentation, and deployment of AI-enabled machines. Enhancing data security is another frequently cited component of building trust: Humans cannot trust algorithms if they lack faith in the integrity of the data being processed or used to train the algorithm.

Limitations in AI transparency (i.e., how machines perceive the environments they are in) and explainability (i.e., how machines come to decisions) pose another challenge. While not prohibitive to the employment of HMT in many missions and contexts, reducing the “black box” nature of AI outputs will have a positive impact on the capacity for humans to trust their machine teammates and, in turn, on the pace of adoption for the range of HMT applications.

Increased experimentation—in terms of frequency, rigor, and realism—can enhance the human operator’s understanding of how an AI agent reached a certain conclusion. The DOD must embrace accelerated experimentation across a diverse set of scenarios and use cases. By asking humans to “try to break the technology,” operators and analysts can develop a better understanding of the limits, strengths, and weaknesses of their AI-enabled teammates as well as any possible unexpected behaviors. Rapid and aggressive experimentation in conditions that replicate real-world operational conditions has been a regularly highlighted feature of Task Force 59’s success to date, though there is concern that this practice has not been adopted broadly across DOD.

Human trust in machines, however, is only one part of the discussion of the human component of HMT. At least as much attention should be focused on building up the competencies and confidence of humans to effectively engage with and employ their machine partners. Humans will require just as much preparation and training as their machine teammates to become effective team leaders and to ensure that the value of human-machine teams is realized. In a worst-case scenario, adequate human training will help avoid a circumstance in which ineffectual human engagement with machines (the inability to recognize a hallucinating machine, for example) or unnecessary interference with machine operations could lead to mistakes that carry deleterious tactical, operational, or strategic outcomes. One salient example is seen in the Marine Corps experimentation with LLMs. Experiment organizers noted that the tendency of these models to hallucinate means “falsification is still a human responsibility” and that “absent a trained user, relying on model-produced outputs risks confirmation bias,” risking the potential of individuals “prone to [acting] off the hallucinations of machines.”

This is especially the case in situations in which a general-purpose machine or set of machines is interacting with multiple humans rather than just one human controller. Protocols for determining who is in control, whose priorities are executed, and how to manage contradictory instructions become essential.

Ethics, agency, judgment, and reliability: Maintaining leadership and retaining relevance

DOD efforts to maintain leadership at home and internationally in establishing AI and HMT ethics and safety guidelines should be viewed as a process to follow rather than an end state to be achieved.

Pathways to adoption of HMT at speed and scale do not solely rely on the DOD doing things radically differently or applying new incentives and structures. Rather, they can build on the momentum of ongoing initiatives.

The DOD has already expended commendable energy in understanding and addressing questions of ethics, safety, alignment, and agency—all of which emerged as acute areas of interest and concern during the project workshops. The DOD, in collaboration with other parts of the US government, has been proactive in articulating its positions on key questions of ethics and safety around AI development and use for military purposes since first adopting principles for AI ethics in February 2020. In February 2023, at the conclusion of the Responsible AI in the Military Domain conference, the US Department of State released a “Political Declaration on Responsible Military Use of Artificial Intelligence and Autonomy” as the foundation for the establishment of international norms and standards for the development and use of military AI. The document includes twelve best practices that emphasize concepts such as safe and secure development, extensive testing, and human control and oversight, among others, that endorsing states should implement.

These principles and best practices as well as current laws, policies, and regulations provide a useful foundation for resolving critical questions about agency, such as:

- Which component of the human-machine teams makes decisions under what circumstances?

- When might the machine’s judgment supersede that of the commander?

- What implications will these answers have on recruitment, reskilling, and retention of talent?

- How does the United States avoid or mitigate tactical events and AI/HMT mistakes that have significant strategic consequences?

- How do we judge the possible risk of AI-enabled decision-making in kill chains relative to the current and demonstrated risk of human error in these decisions?

Still, as technologies, concepts, applications, and competitions evolve, new questions are likely to be raised. Old practices may need to be iteratively revisited to ensure that adoption efforts remain relevant, current, and appropriate. DOD efforts to maintain leadership at home and internationally in establishing AI and HMT ethics and safety guidelines should be viewed as a process to follow rather than an end state to be achieved.

Understanding knock-on effects of HMT incorporation

DOD adoption of HMT into military operations will elicit responses from competitors and lead to changing risks, competitions, opportunities, and doctrine. For example, the use of HMT-enabled UAS swarms could shift targeting priorities from forward-deployed machines to the human controllers and decision-makers situated well behind contested environments. Another example can be found in the use of an AI-enabled machine to patrol a contested border, which could reduce immediate risk to human life and, as a result, reduce risks of rapid escalation. However, immediate loss of life may not be the only escalation pathway perceived by an adversary. If destroying autonomous systems is lower stakes than firing on humans, how many downed UASs would the United States tolerate? Alternatively, what if the AI-enabled machine recommends or makes a bad decision that leads to an unprovoked loss of life in the adversary state?

The United States and its allies should intensify examination of these types of what-if scenarios associated with HMT adoption. First, to preempt arguments that DOD has not thought through the long-term implications of HMT employment, and then to determine the most efficacious concepts of use and prepare for possible future risks. A combination of iterative tabletop, seminar-style, and live war games and model-based simulations would be especially useful in identifying and preparing for longer-term implications of HMT. These exercises could then inform live testing of HMT concepts and capabilities.

Shaping the narrative around military applications of HMT

Mixed feelings and misperceptions about the DOD’s use of AI and uncrewed systems persist within the defense enterprise and the US polity and society more broadly. Despite an increase in the number of young personnel who are considered to be more intuitively inclined to technological adoption, pockets of stubbornness and contrarianism, and efforts to protect institutional equities, remain throughout the force. In addition, some social and political perspectives on HMT may be skewed by fears of fully autonomous weapons systems and singularities in which AI overcomes human control with devastating consequences. Identifying and managing the variety of strategic, operational, social, and philosophical concerns about accelerating HMT adoption will necessitate a coherent and consistent campaign to shape the narrative around military AI use and HMT. This narrative should stress:

- The demonstrated and prospective advantages HMT will provide to warfighters and decision-makers, and the budgetary efficiencies that could be gained through deeper adoption of HMT;

- The measures DOD is taking to ensure the safe and ethical development and use of AI and to build humans’ trust in machines; and

- The centrality of human agency to human-machine teams.

Summary of recommendations and conclusion

The United States’ AI strides are not occurring in a vacuum; if the Pentagon is slow to adopt HMT at scale, it risks conceding military edge to strategic competitors like China that view AI as a security imperative.

HMT offers several advantages to twenty-first-century militaries. As such, the DOD must invest sufficient time and resources to address the challenges to adoption discussed above. Catalyzing HMT adoption will necessitate a combination of new ideas, procedures, and incentives, as well as intensification of promising ongoing adoption acceleration efforts, especially related to the following areas:

- Developing an enterprise-wide approach to HMT adoption that builds on and provides sufficient money and authority to the establishment of centralized structures such as the Chief Digital and Artificial Intelligence Office to ensure that requirements, capability, infrastructure, and strategy development as well as procurement and vendor engagement are mutually reinforcing across the DOD.

- Rapid, iterative, and aggressive experimentation in settings that replicate the challenges of a real-world operational environment will facilitate adoption by helping humans test and understand the breaking points of HMT technologies. Different levels of experimentation can also build human trust in their AI teammates necessary to optimize HMT value.

- Melting the “frozen middle” through reforms that increase incentives for moving quickly, align DOD and congressional reform priorities, and reinforce efforts to ensure an enterprise-wide—rather than service-by-service or command-by-command—approach to HMT adoption. The Atlantic Council Commission on Defense Innovation Adoption has developed several specific recommendation sthat apply to the acquisition and adoption of HMT capabilities.10Lofgren et al., Atlantic Council Commission on Defense Innovation Adoption Interim Report

- Articulating and demonstrating the multilayered value of HMT in gaining advantage over competitors and potential adversaries in a future operational environment characterized by significantly increased pace of operations, amount of available data, and complexity of threats.

- Continuing to lead on issues of agency and ethics by prioritizing the ethical and responsible development and use of trustworthy AI and keeping humans—and human judgment—at the center of human-machine teams. The US government and private sector should revisit and update guidelines around agency and ethics to reflect contemporaneous technology development trends and capabilities.

- Development of strategic messages that highlight the value and safety of HMT for consumption by DOD and congressional stakeholders as well as American society more broadly.

The United States’ AI strides are not occurring in a vacuum; if the Pentagon is slow to adopt HMT at scale, it risks conceding military edge to strategic competitors like China that view AI as a security imperative. Machines and AI agents are becoming ubiquitous across the twenty-first-century battlespace, and the onus is on DOD to demonstrate, communicate, and realize HMT’s value to achieving future missions and national objectives.

Acknowledgements

To produce this report, the authors conducted a number of interviews and workshops. Listed below are some of the individuals consulted and whose insights informed this report. The analysis and recommendations presented here are those of the authors alone and do not necessarily represent the views of the individuals consulted. Moreover, the named individuals participated in a personal, not institutional, capacity.

- Maj Ezra Akin, USMC, technical PhD analyst, Commandant’s Office of Net Assessment

- Samuel Bendett, adjunct senior fellow, Technology and National Security Program, Center for a New American Security

- August Cole, nonresident senior fellow, Forward Defense, Scowcroft Center for Strategy and Security, Atlantic Council

- Owen J. Daniels, Andrew W. Marshall fellow, Center for Security and Emerging Technology, Georgetown University

- Phil Freidhoff, vice president for human centered design projects, 2Mi

- Dr. Gerald F. Goodwin, senior research scientist, personnel sciences, US Army Research Institute for the Behavioral and Social Sciences

- Dr. Neera Jain, associate professor, School of Mechanical Engineering, Purdue University

- LCDR Marek Jestrab, USN, senior US Navy fellow, Forward Defense, Scowcroft Center for Strategy and Security, Atlantic Council

- Zak Kallenborn, policy fellow, Schar School of Policy and Government, George Mason University

- Harry Kemsley OBE, president, government and national security, Janes Group

- Dr. Margarita Konaev, nonresident senior fellow, Forward Defense, Scowcroft Center for Strategy and Security, Atlantic Council

- Justin Lynch, nonresident senior fellow, Forward Defense, Scowcroft Center for Strategy and Security, Atlantic Council

- Dr. Joseph Lyons, principal research psychologist, Air Force Research Laboratory

- Brig Gen Patrick Malackowski, USAF (ret.), director, F-16, conventional weapons, and total force, Lockheed Martin Corporation

- Col Michelle Melendez, USMC, senior US Marine Corps fellow, Forward Defense, Scowcroft Center for Strategy and Security, Atlantic Council

- Rob Murray, nonresident senior fellow, Forward Defense, Scowcroft Center for Strategy and Security, Atlantic Council

- Dr. Julie Obenauer-Motley, senior national security analyst, Johns Hopkins University Applied Physics Lab

- John T. Quinn II, head, futures branch, concepts and plans division, Marine Corps Warfighting Lab

- Dr. Laura Steckman, program officer, trust and influence, Air Force Office of Scientific Research

Sponsored By

This report was generously sponsored by Lockheed Martin Corporation. The report is written and published in accordance with the Atlantic Council Policy on Intellectual Independence. The authors are solely responsible for its analysis and recommendations. The Atlantic Council and its donors do not determine, nor do they necessarily endorse or advocate for, any of this report’s conclusions.

About the authors

Forward Defense, housed within the Scowcroft Center for Strategy and Security, generates ideas and connects stakeholders in the defense ecosystem to promote an enduring military advantage for the United States, its allies, and partners. Our work identifies the defense strategies, capabilities, and resources the United States needs to deter and, if necessary, prevail in future conflict.