Event recap | Data salon episode 2: Could better technology protect privacy when a crisis requires enhanced knowledge?

On Wednesday, 17 June 2020, the Atlantic Council’s GeoTech Center and Accenture held the second episode of the jointly presented Data Salon Series, featuring a presentation from Mr. Davi Ottenheimer, Vice President of Trust and Digital Ethics at Inrupt, that prompted animated discussion among participants about the nature of privacy, consent, and responsibility. The event focused on how our understanding of privacy and its preservation affects our ability to temporarily compromise it in the interest of addressing crises. These issues are particularly relevant to the ongoing pandemic, and their intersections with other topics—integrating different cultural priorities and expectations of privacy, ensuring data is truly representative of a diverse population, and examining the nuanced relationships between privacy, knowledge, and power—are especially timely.

Presentation

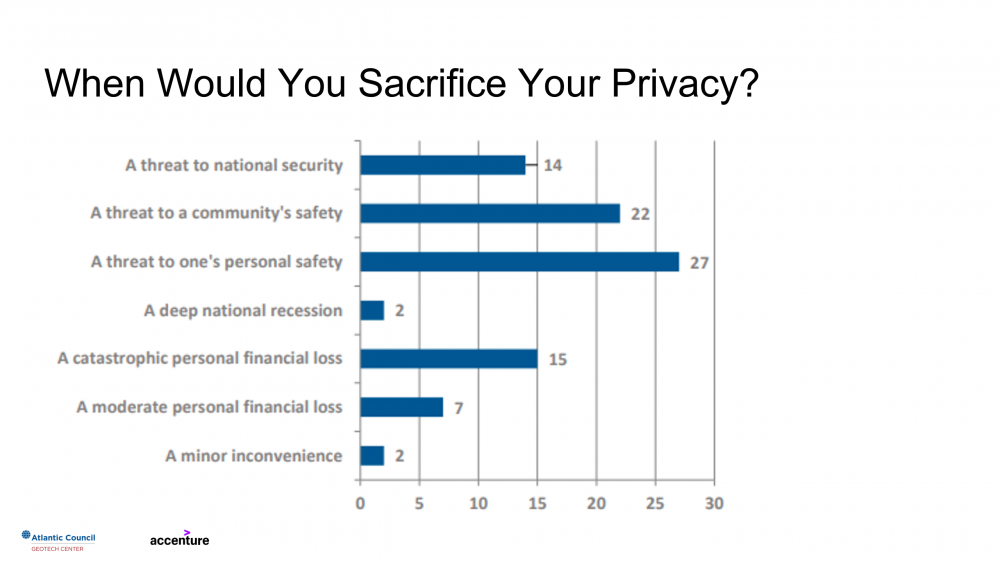

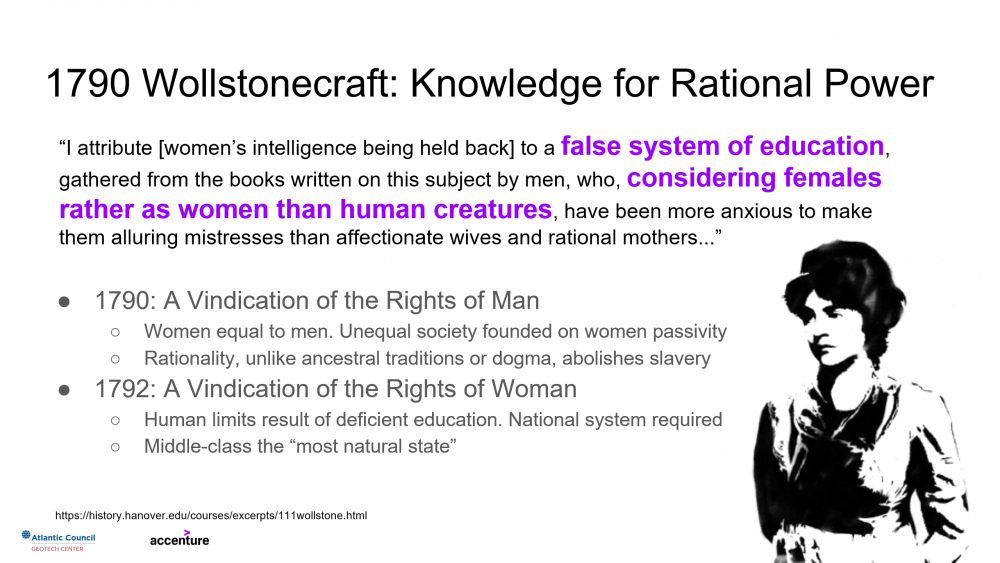

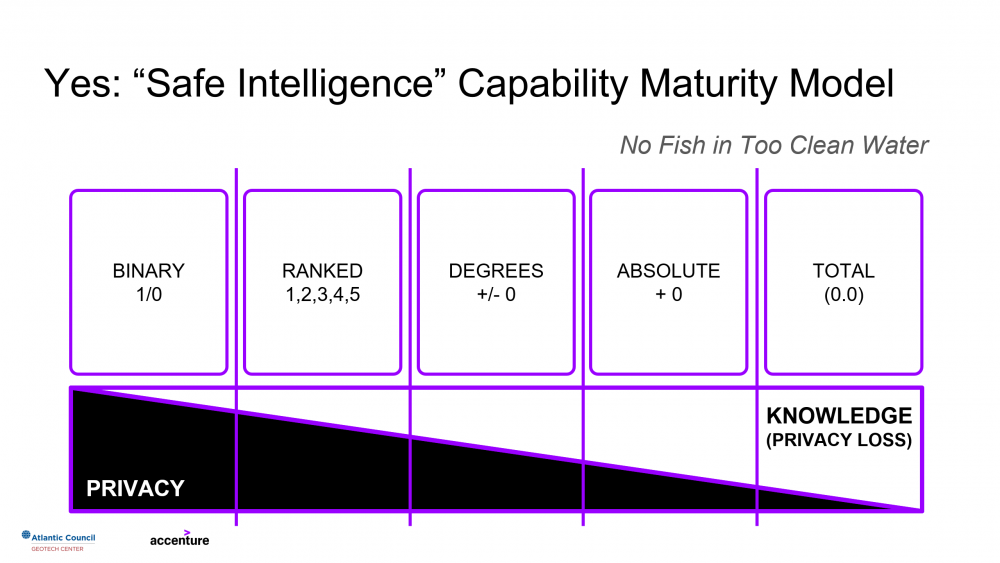

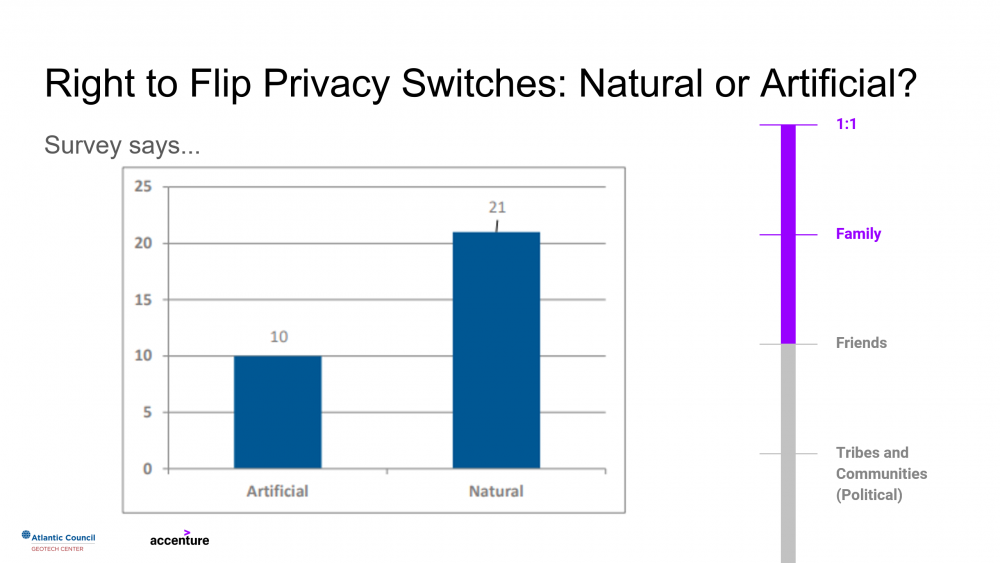

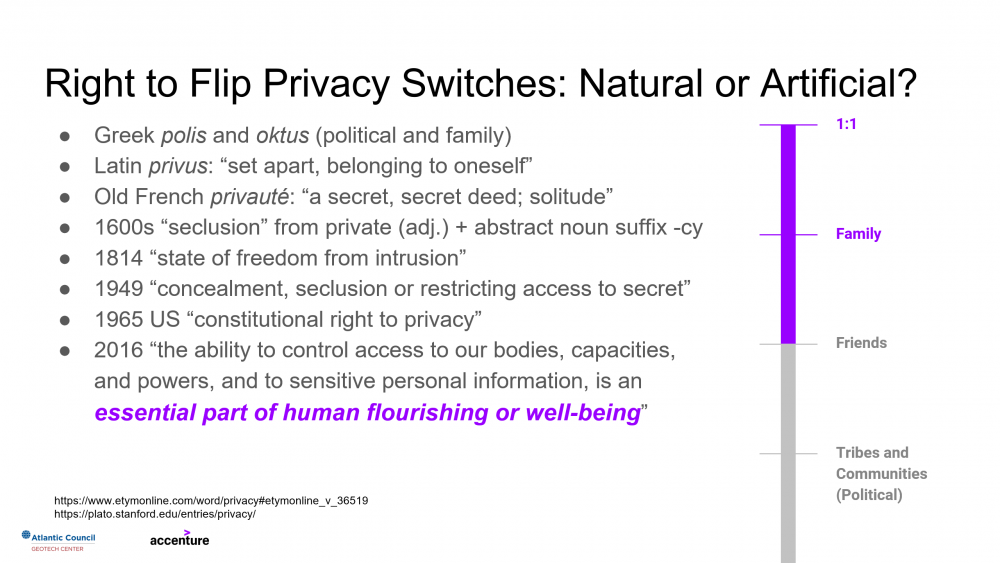

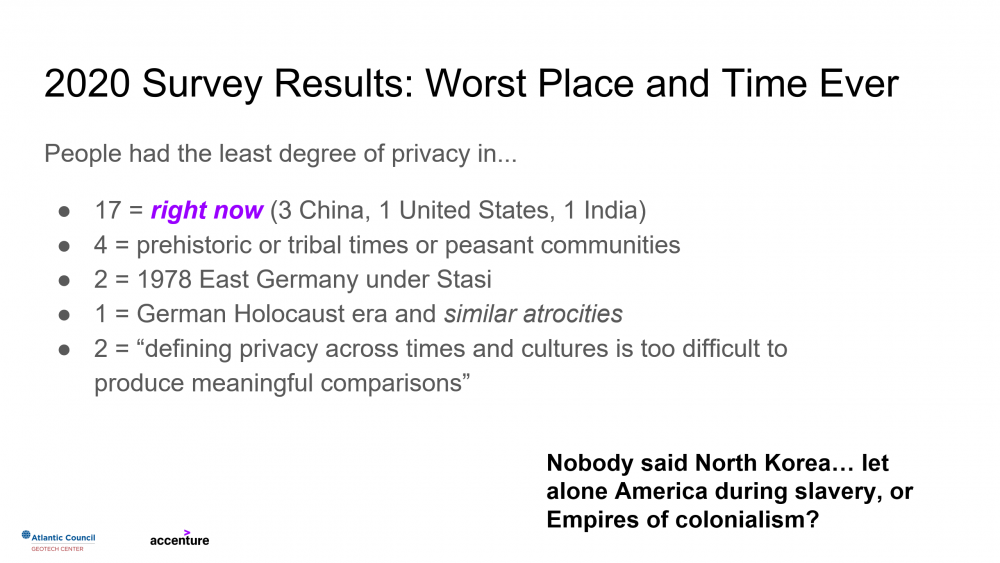

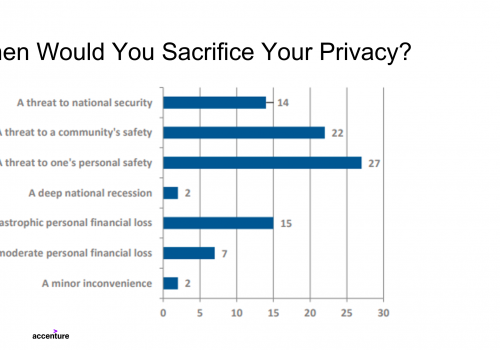

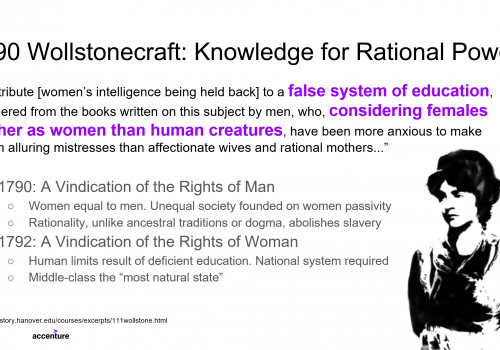

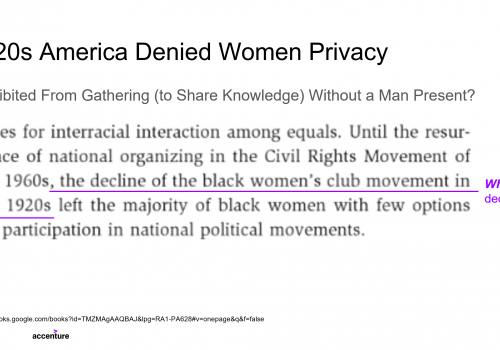

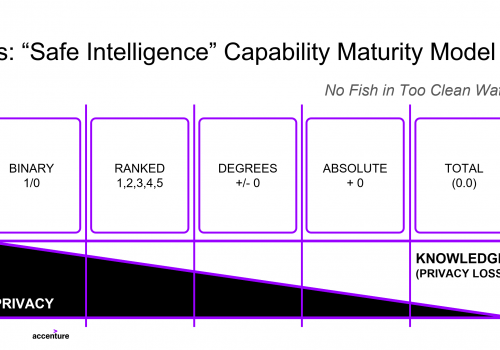

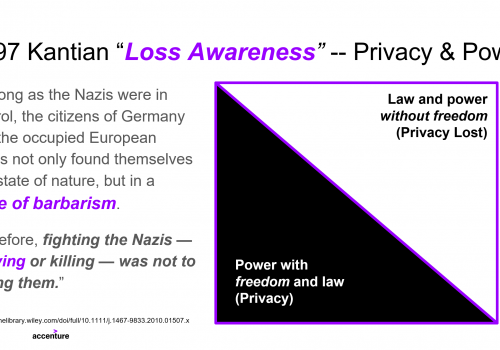

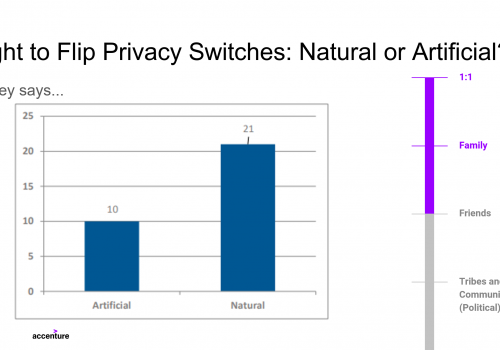

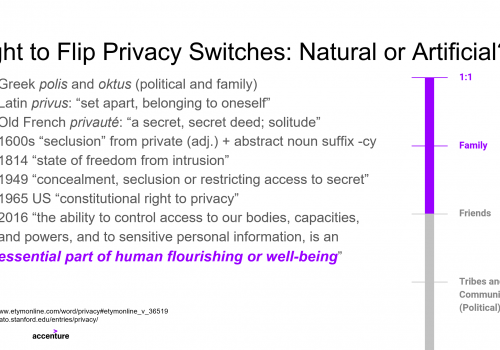

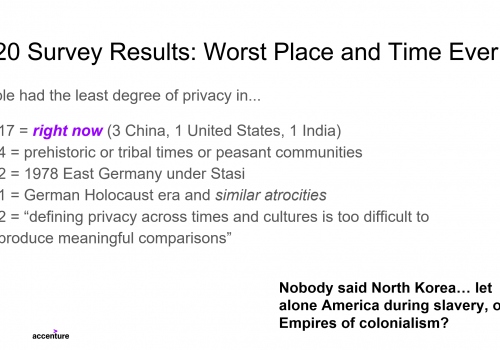

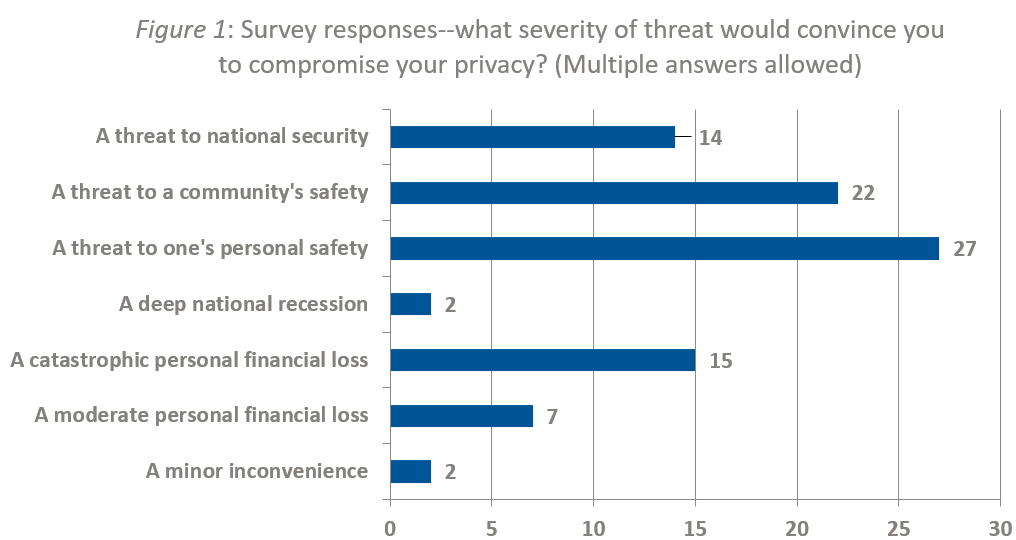

Mr. Ottenheimer’s presentation focused on the history of privacy, other cultures’ understanding of the concept, and how expectations of privacy vary during crises and between technologies. He began by noting how some societies are willing to sacrifice a degree of privacy when there is a threat to an individual, while others are more focused on threats to the community. A brief survey administered prior to the presentation corroborated that by demonstrating that the audience’s priorities aligned more with individual interests (see figure 1). Mr. Ottenheimer also introduced a framework for understanding privacy as a concept in tension with knowledge, where losing privacy results in gained knowledge. The relationship is nuanced though—there are contexts where marginalized groups are denied privacy in which they could pursue knowledge, and where the knowledge gained from degraded privacy only benefits some groups.

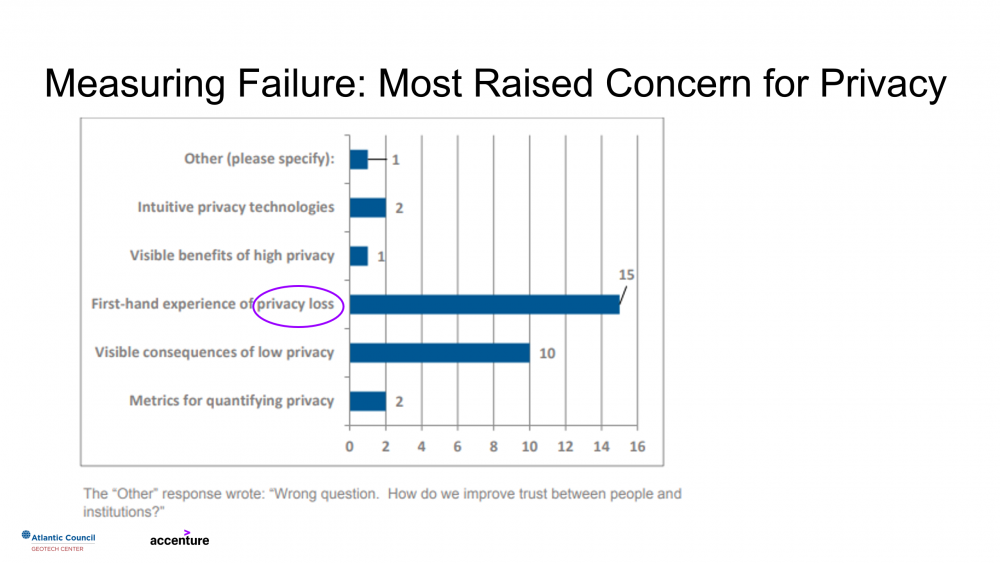

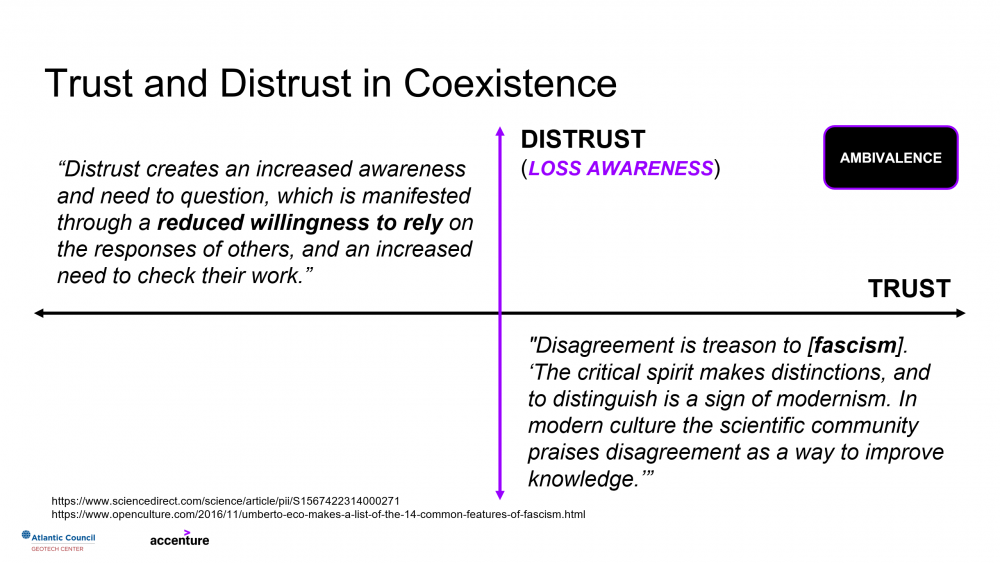

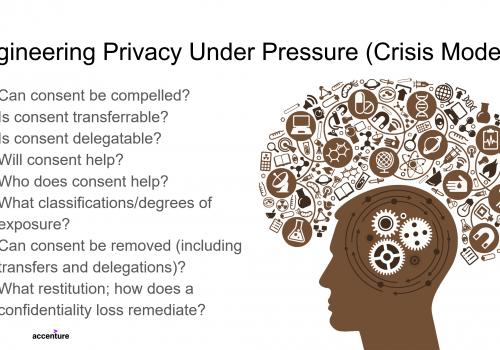

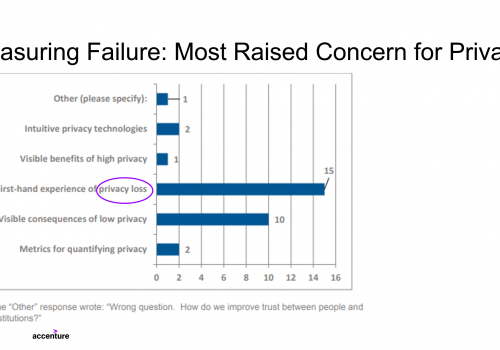

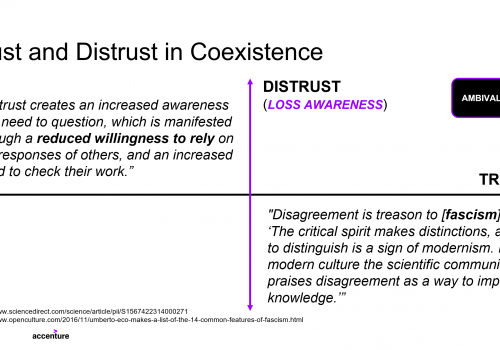

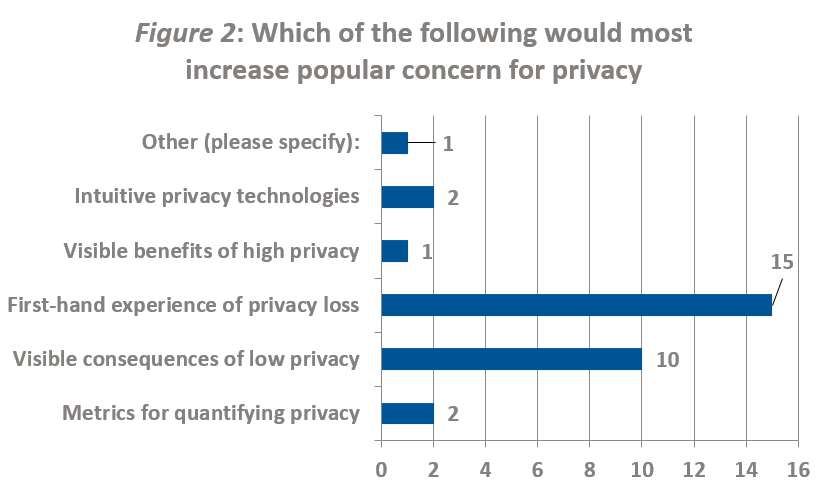

Next, trust and distrust were posed as separate axes rather than different extremes of a single continuum. Mr. Ottenheimer described lack of trust as ambivalence, not suspicion, while the presence of distrust creates a need to question, to develop independence, and to monitor. Survey results also helped motivate this distinction, as audience members indicated a belief that the experience of losing privacy would increase popular concern for privacy the most (see figure 2). Applying these principles to technology use amidst crisis, Mr. Ottenheimer proposed two ways to make our treatment of privacy consent-based during disruptions that require compromise. First, crisis-optimized privacy must contain a disabling function—some way for users to turn off the increased access granted to institutions addressing the crisis at their discretion. Second, a reset must be possible, allowing for reversion to a prior information state in order to correct false information or undo a compromise in privacy while still preventing manipulation by malicious actors.

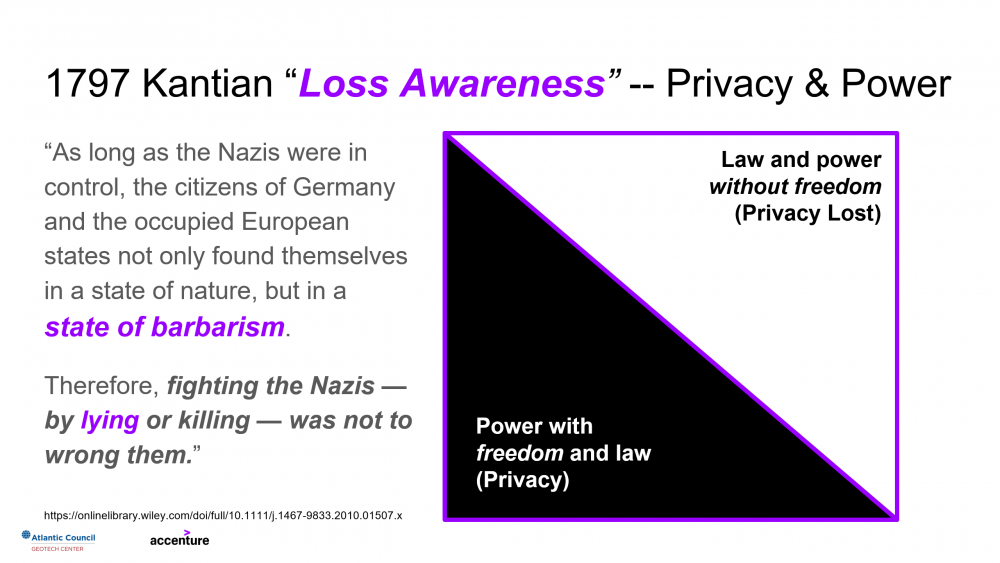

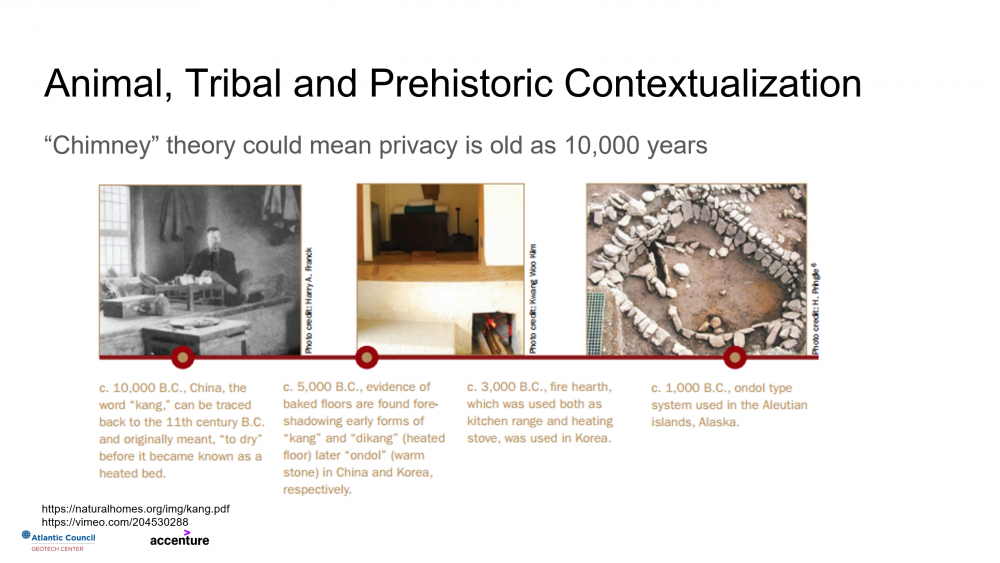

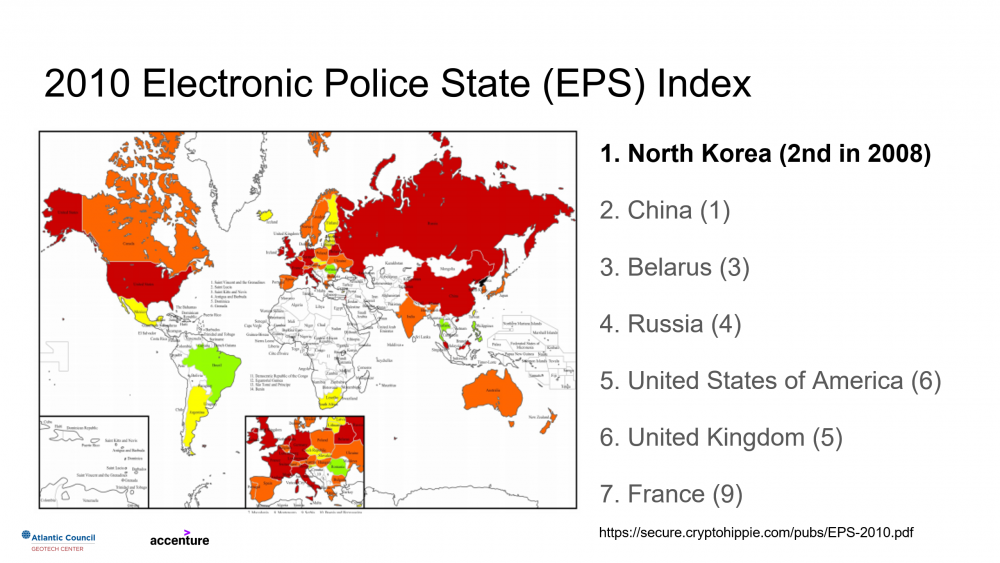

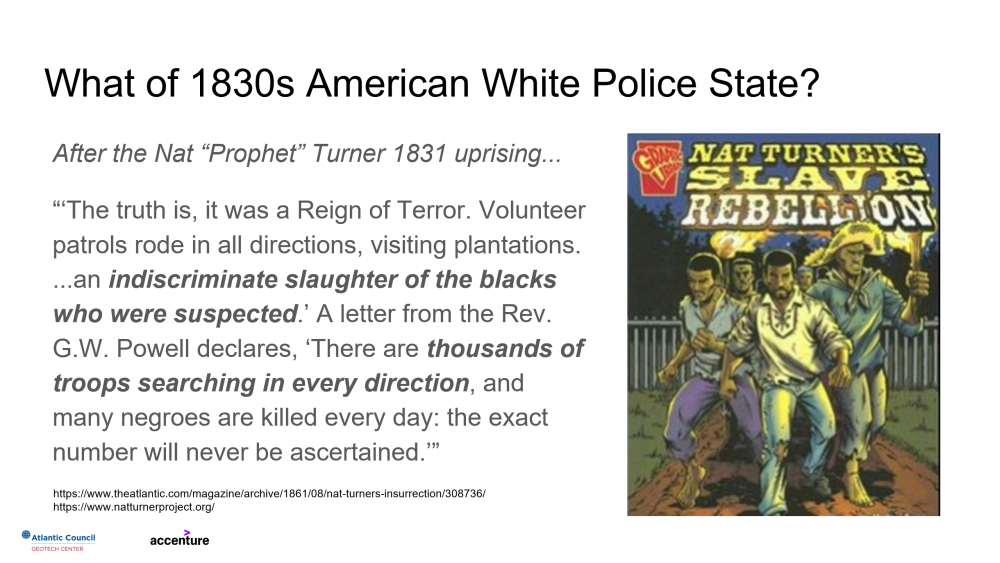

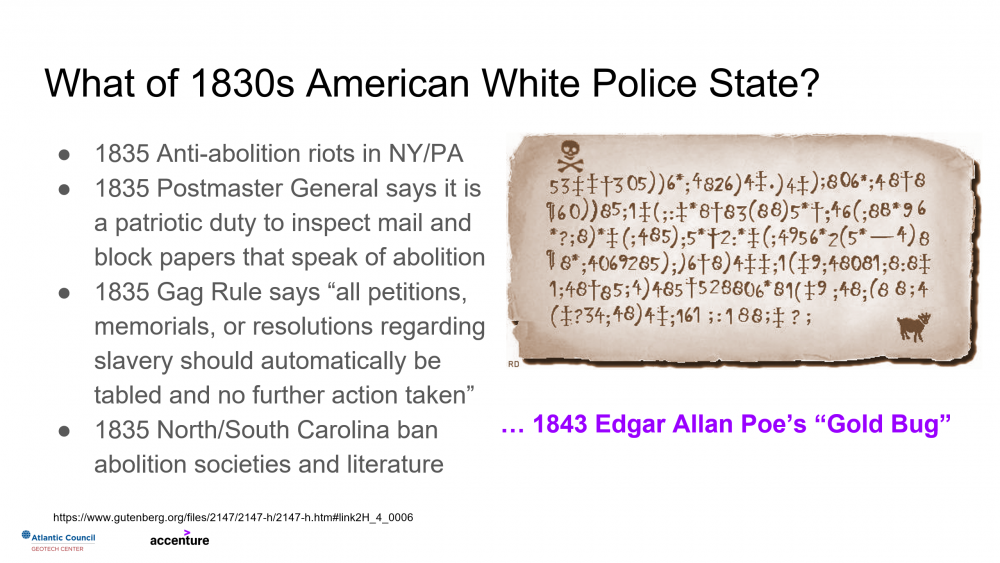

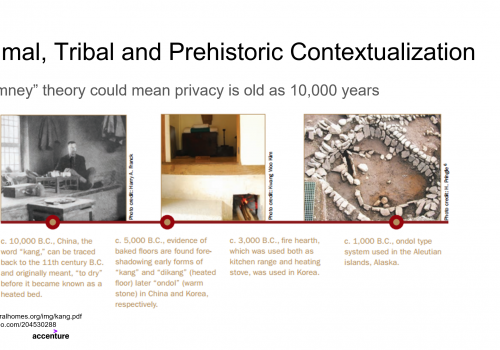

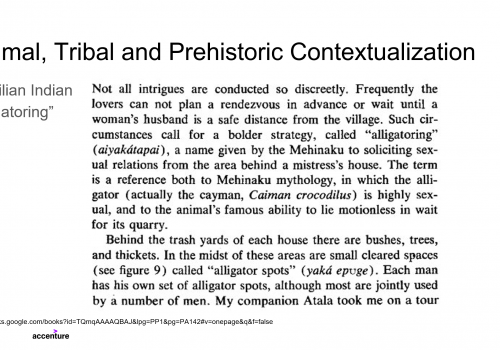

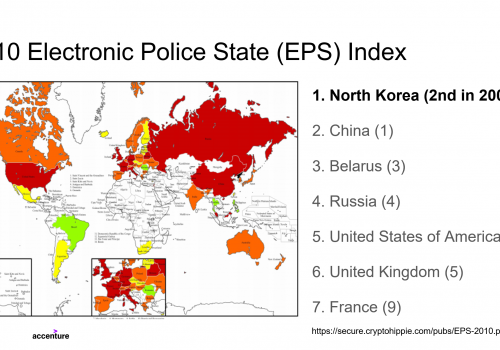

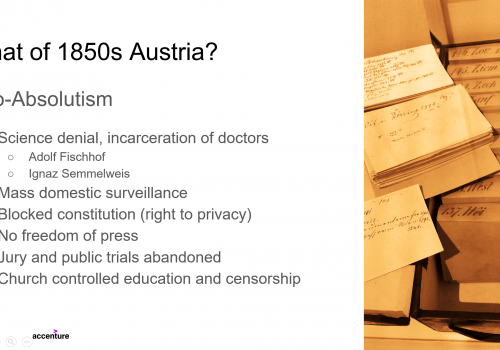

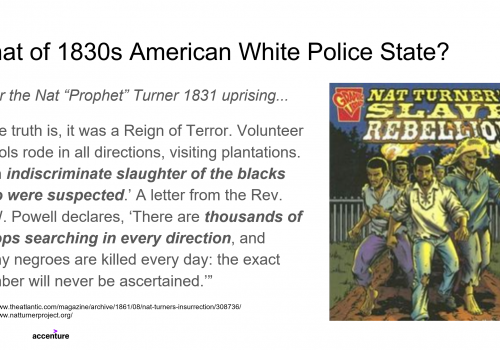

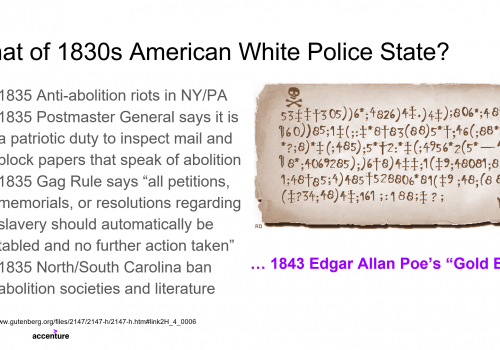

Throughout his presentation, Mr. Ottenheimer pointed to examples from the past to ground abstract theories and historical beliefs about privacy: the development of chimneys, the abuses of dictatorships past and present, the plight of Blacks in the slave-owning South, police states at home and abroad, colonial plantations, 1850s Austria, and more. Tying them together was a deep examination of the relationship between privacy and power, leading Mr. Ottenheimer to end on the somber observation that some surveys report 50% of cryptographers are uncomfortable answering questions about ethics. Accordingly, there may be a troubling disconnect between engineers’ experiences and comfort and the ethical ramifications of the things they create.

Presentation slides

Discussion

Many in the audience used Mr. Ottenheimer’s presentation as a prompt for addressing the gaps between existing laws and broader notions of privacy. One discussant asked about the lack of legal frameworks addressing any responsibility of industry to preserve privacy, which Mr. Ottenheimer hoped could be addressed by the design choices of technology companies themselves. Ideally, privacy-minded engineers would build consent and transparency into software, giving customers control of when companies are granted full access to their data, say for service needs. Another audience member raised the challenges of “informed” consent, which presumes an often-unrealistic level of understanding on the part of consumers, particularly as more of the world comes online, though some noted the opportunities presented by a clean slate for technology and policy developments in some parts of the world, and some were optimistic about the potential market growth and innovation they could provide.

Towards the event’s end, several discussants raised concerns echoing a parallel Zoom chat conversation—that focusing on privacy and informed consent through a technological lens misses critical ideas about ethical norms. They were worried about undue focus on problems with purely technical solutions. Some wanted to refocus on the fiduciary duties of care that could be applied to information providers, on preserving trust between users and industry, and on incentivizing or enforcing responsible corporate citizenship. The discussion ended on the note that untangling technological solutions from broader ones might not be so clear cut though. Many of the frameworks that could empower those providing data to own its use and their own consent have both technical and policy elements.

We look forward to the next episode in the Data Salon Series on Wednesday, 29 July, 2020 at 11:30 a.m. EDT, in which Ms. Joy Bonaguro, Chief Data Officer for the State of California, will present on her state’s response to COVID-19, and we hope to see you there.

Previous episode

The GeoTech Center champions positive paths forward that societies can pursue to ensure new technologies and data empower people, prosperity, and peace.